Tùy chọn HBM tăng khi nhu cầu AI tăng vọt

High-bandwidth memory (HBM) sales are spiking as the amount of data that needs to be processed quickly by state-of-the-art AI accelerators, graphic processing units, and high-performance computing applications continues to explode.

HBM inventories are sold out, driven by massive efforts and investments in developing and improving large language models such as ChatGPT. HBM is the memory of choice to store much of the data needed to created those models, and the changes being made to increase the density by adding more layers, along with the limits of SRAM scaling, are adding fuel to the fire.

“With Large Language Models (LLMs) now exceeding a trillion parameters and continuing to grow, overcoming bottlenecks in memory bandwidth and capacity is mission critical to meeting the real-time performance requirements of AI training and inference,” said Neeraj Paliwal, senior vice president and general manager of silicon IP at Rambus.

At least some of that momentum is a result of advanced packaging, which in many cases can provide shorter, faster, and more robust data paths than a planar SoC. “Leading-edge [packaging] is going gangbusters,” said Ken Hsiang, the head of Investor Relations for ASE, in a recent earnings call. “Whether it’s AI, networking, or other products in the pipeline, the need for our advanced interconnect technologies and all its forms looks extremely promising.”

And this is where HBM fits squarely into the picture. “There is a big wave coming in HBM architectures — custom HBM,” said Indong Kim, vice president and head of DRAM product planning at Samsung Semiconductor, in a recent presentation. “The proliferation of AI infrastructure requires extreme efficiency with a scale-out capability, and we are in solid agreement with our key customers that customization of HBM-based AI will be a critical step. PPA — power, performance, and area are the keys for AI solutions, and customization will provide a significant value when it comes to PPA.”

Economics have seriously limited widespread adoption of HBM in the past. Silicon interposers are pricey, and so is the processing massive numbers of through-silicon vias (TSVs) amongst memory cells in FEOL fabs. “With the demands of HPC, AI and machine learning, the interposer size increases significantly,” says Lihong Cao, senior director of engineering and technical marketing at ASE. “High cost is the key drawback for 2.5D silicon interposer TSV technology,”

While that limits its mass market appeal, demand remains robust for less cost-sensitive applications, such as in data centers. HBM’s bandwidth is unmatched by any other memory technology, and 2.5D integration using silicon interposers with microbumps and TSVs has become the de facto standard.

But customers want even better performance, which is why HBM makers are considering modifications to bump, underbump and molding materials, amidst leaps from 8- to 12- to 16-layer DRAM modules capable of processing process data at lightning speeds. The HBM3E (extended) modules process at 4.8 terabyte per second (HBM3) and are poised to hit 1 TB/s at HBM4. One way that HBM4 achieves this is by doubling the number of data lines from 1,024 in HBM3 to 2,048.

Today, three companies manufacture HBM memory modules — Micron, Samsung and SK hynix. Though all of them use through-silicon vias and microbumps to reliably deliver their DRAM stacks and accompanying devices for integration into advanced packages, each is taking a slightly different approach to get there. Samsung and Micron are incorporating a non-conductive film (NCF) and bonding with thermocompression (TCB) at each bump level. SK hynix, meanwhile, is continuing with a flip-chip mass reflow process of molded underfill (MR-MUF) that seals the stack in a high-conductivity molding material in a single step.

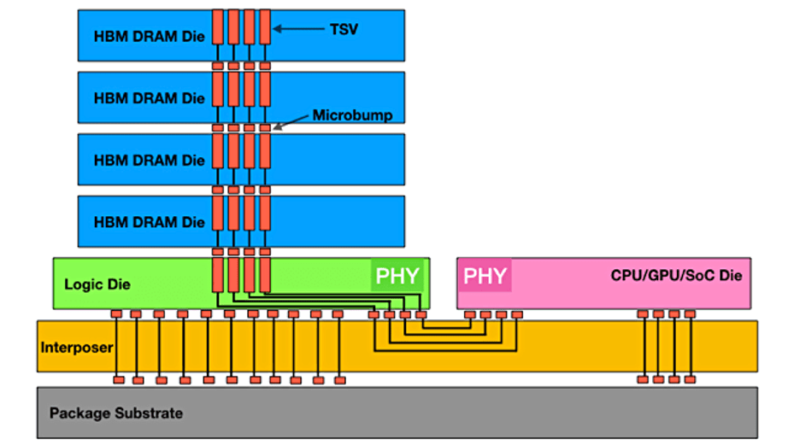

Vertical connections in HBM are accomplished using copper TSVs and scaled microbumps between the stacked DRAM chips. A lower buffer/logic chip provides data paths to each DRAM. The reliability issues largely depend on thermo-mechanical stresses during reflow, bonding, and mold back-grinding processes. Identifying potential problems requires testing for high-temperature operating lifetime (HTOL), temperature and humidity bias (THB), and temperature cycling. Pre-conditioning and unbiased humidity and stress tests (uHAST) are incorporated to determine adhesion levels between levels. In addition, other testing is needed to ensure long-term usage without microbumps, such as shorts, metal bridging, or interface delamination between chips and microbumps. Hybrid bonding is an option to replace microbumps for the HBM4-generation products, but only if yield targets cannot be met.

Fig. 1: HBM stack for maximum data throughput. Source: Rambus

Another advancement under development involves 3D DRAM devices that, which like 3D NAND, turn the memory cells on their side. “3D DRAM stacking will dramatically reduce the power and area, while removing the performance barrier coming from the interposer,” said Samsung’s Kim. “Relocating a memory controller from the SoC to a base die will enable more logic spaces designated for AI functions. We strongly believe custom HBM will open up a new level of performance and efficiency. And tightly integrated memory and foundry capability will provide faster time to market and the highest quality for large-scale deployments.”

Fig. 2: Samsung’s DRAM roadmap and innovations. Source: Semiconductor Engineering/MemCon 2024

The overall trend here is moving logic closer to memory to do more processing in or close to memory, rather than moving data to one or more processing elements. But from a system design standpoint, this is more complicated than it sounds.

“This is an exciting time. With AI so hot, HBM is everything. The various memory makers are racing against time to be the first to produce next-generation HBM,” said CheePing Lee, technical director of advanced packaging at Lam Research.

That next generation is HBM4, and JEDEC is busy working out the standard for these modules. In the meantime, JEDEC expanded the maximum memory module thickness from 720 to 775mm for the HBM3E standard, which still allows for 40µm-thick chiplets. HBM standards specify a per-pin transfer rate, maximum die per stack, maximum package capacity (in GB), and bandwidth. The design and process simplification that goes along with such standards help bring HBM products to market at a faster pace – now taking place every 2 years. The upcoming HBM4 standard will define 24 Gb and 32 Gb layers, as well as 4-high, 8-high, 12-high, and 16-high TSV stacks.

HBM’s evolution

The development of high-bandwidth memory traces back to 2008 R&D to solve the problems of increasing power consumption and footprint associated with compute memory. “At that time, GDDR5, as the highest band DRAM, was limited to 28 GB/s, (7 Gbps/pin x 32 I/Os),” said Sungmock Ha and colleagues at Samsung. [1] “On the other hand, HBM Gen2 increased the number of I/Os to 1,024 instead of lowering the frequency to 2.4Gbps to achieve 307.2 GB/s. From HBM2E, 17nm high-k metal gate technology was adopted to reach 3.6Gbps per pin and 460.8 GB/s bandwidth. Now, HBM3 is newly introducing 6.4Gbps per pin transfer rate with 8 to 12 dies stack, which is about 2X bandwidth improvement compared with the previous generation.

That’s only part of the story. HBM has been moving closer to processing to improve performance, and that has opened the door to several processing options.

Mass reflow is the most mature and the least expensive solder option. “In general, mass reflow is used whenever possible, as the installed CapEx is large, and costs are relatively low,” said Curtis Zwenger, vice president of engineering and technical marketing at Amkor. “Mass reflow continues to provide a cost-effective method for joining die and advanced modules to package substrates. However, with increasing performance expectations, and solution spaces with HI modules and advanced substrates, one of the net effects is HI (heterogeneous integration) modules and substrates with increased amount of warpage. Thermocompression and R-LAB (reverse laser assisted bonding) are both process enhancements to conventional MR to better handle higher warpage, both at the HI module level and the packaging level.”

The microbump metallization is optimized to improve reliability. If the interconnection between microbumps and pads uses a conventional reflow process with flux and underfill for fine-pitch applications, underfill voids trapping and remaining flux residues can cause entrapment between bumps. To solve these problems, pre-applied non-conductive films (NCFs) can replace flux, underfill, and bonding processes in one-step bonding process without underfill voids trapping and remaining flux residues.

Samsung is using a thin NCF with thermocompression bonding in its 12-layer HBM3E, which it says has the same height specification as 8-layer stacks, a bandwidth of up to 1,280 GB/s, and a capacity of 36 GB. NCFs are essentially epoxy resins with curing agents and other additives. The technology promises added benefits, especially with higher stacks, as the industry seeks to mitigate chip die warping that comes with thinner die. Samsung scales the thickness of its NCF material with each generation. The trick is completely filling the underfill area around the bumps (providing cushion to bumps), flowing the solder and not leaving behind voids.

SK Hynix first changed over from NCF-TCB to mass reflow molded underfill at its HBM2E generation product. The conductive mold material was developed in concert with its material supplier and may be utilizing a proprietary injection method. The company demonstrated lower transistor junction temperatures using its mass reflow process.

The DRAM stack in an HBM is placed on a buffer die, which is growing in functionality as companies strive to implement more logic onto this base die to reduce power consumption, while also linking each DRAM core to the processor. Each die is picked and placed on a carrier wafer, solder is reflowed and the final stack is molded, undergoes back-grinding, cleaning, and then dicing. TSMC and SK hynix announced the foundry will supply base dies to the memory maker going forward.

“There’s a lot of interest in memory on logic,” said Sutirtha Kabir, R&D director at Synopsys. “Logic on memory was something that was looked at in the past, and that can’t be ruled out, either. But each of those will have a challenge in terms of power and thermal, which go hand-in-hand. The immediate effect will be thermal-induced stress, not just assembly-level stress. And you’re most likely going to be using hybrid bonds, or very fine-pitch bonds, so what is the impact of thermal problems on mechanical stress there?”

Heat from that base logic also can induce thermomechanical stresses at the interface between the logic and DRAM chip 1. And because HBM modules are positioned close to the processor, heat from the logic inevitably dissipates to the memories. “Our figure shows that when host chip temperature increases by 2°C, the result is at least a 5°C to 10°C increase on the HBM side,” said Younsoo Kim, senior technical manager of SK hynix.

Other issues need to be solved with NCF TCB processes. Thermocompression bonding, which occurs at high temperature and pressure, can induce 2.5D assembly problems, such as metal bridging or interface delamination between the bumps and underlying nickel pads. And TCB is a low throughput process.

For any multi-chiplet stack, warpage issues are tied to mismatches in the expansion coefficient (TCE) of facing material, which creates stress as temperatures cycle during processing and use. Stress tends to concentrate at pain points — between the base die and first memory die, and also at the microbump levels. Product models with simulation can help solve such issues, but sometimes the full extent of those issues is only observed on actual product.

Conclusion

AI applications depend on the successful assembly and packaging of multiple DRAM chips, TSVs, a base logic die that can include the memory driver, and as many as 100 decoupling capacitors. Marriage with graphics processors, CPUs, or other processors is a well-orchestrated assembly in which all moving parts must come together in unison to form high-yielding and reliable systems.

As the industry transitions from HBM3 to HBM4, the processes for fabricating high levels of DRAM stacks are only going to become more complex. But suppliers and chipmakers also are keeping an eye out for lower-cost alternatives to further increase the adoption of these extremely fast and necessary memory chip stacks.

Related Reading

What Comes After HBM For Chiplets

The standard for high-bandwidth memory limits design freedom at many levels, but that is required for interoperability. What freedoms can be taken from other functions to make chiplets possible?

HBM Takes On A Much Bigger Role

High-bandwidth memory may be a significant gateway technology that allows the industry to make a controlled transition to true 3D design and assembly.

Memory On Logic: The Good And The Bad

Do the benefits outweigh the costs of using memory on logic as a stepping-stone toward 3D-ICs?