Tối ưu hóa cấu trúc liên kết kết nối cho các ứng dụng ADAS ô tô

Partitioning the system into shared and non-shared data regions to receive the benefits of both coherent and non-coherent interconnects.

Designing automotive Advanced Driver Assistance Systems (ADAS) applications can be incredibly complex. State-of-the-art ADAS and autonomous driving systems use ‘sensor fusion’ to combine inputs from multiple sources, typically cameras and optionally radar and lidar units to go beyond passive and active safety to automate driving. Vision processing systems combine specialized AI accelerators with general-purpose CPUs and real-time actuation CPUs, sharing data between them where appropriate, incorporating sufficient resilience to achieve the required automotive safety levels. AI/ML approaches to embedded vision in automotive and automotive safety requirements result in memory bandwidth, power and cost challenges. These challenges may be addressed with optimized interconnect topology and system partitioning, giving engineering teams an efficient strategy to achieve performance and cost targets.

Automotive safety background

Safety is critical in automotive SoC designs. ISO 26262 defines four Automotive Safety Integrity Levels (ASIL) with ASIL A being the lowest certifiable safety level and ASIL D the highest. ASIL D is specified for systems with the highest level of risk, for example brakes, steering and air bags. In contrast, an instrument cluster might typically be ASIL B. ‘QM’ (meaning Quality Management) is specified where no safety and resilience beyond normal quality management is required. Here’s a breakdown of the levels with example applications:

Fig. 1: ASIL levels and example applications.

Automotive AI challenges

Memory bandwidth is a huge challenge in systems with AI accelerators. Automotive safety levels must be realized, but the overhead of protection and duplication throughout the system is excessive. Mixed criticality is necessary so that different parts of the SoC can support differing resiliency.

Interconnect topology for automotive AI

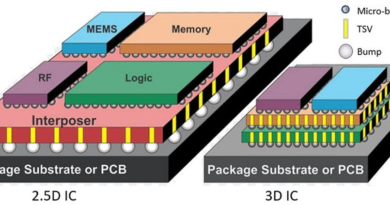

Whenever data is shared in a system with caches, a coherency mechanism is required to ensure the most up-to-date data is known. In simple systems or systems with minimal shared data software-based coherency may suffice, but high-performance systems employ hardware coherency because the overhead and complexity of software solutions are excessive. Hardware coherency operates on cache line size ‘coherency granules’, 64 bytes (64B) in Arm, RISC-V and x86 systems which is 512 bits (512b). However, the bandwidths required in AI accelerators often require 1024- or 2048-bit buses which would contain multiple cache lines.

It’s important to identify data shared with cached CPUs and data that is not. Shared data must route through the coherency system, but non-shared data can bypass it. Even when implemented in hardware, coherency has a cost in terms of latency and throughput as well as power and area so minimizing shared traffic is desirable.

Ncore from Arteris is a coherent interconnect based on a 256b transport and 64B coherency granule. In an automotive AI system it would typically be paired with Arteris’ FlexNoC non-coherent interconnect IP which supports up to 2048-bit buses to maximize memory bandwidth, enables advanced Quality-of-Service (QoS) and supports mesh topology and multi-cast writes for AI/ML applications. The Ncore proxy cache enables efficient coherent sharing of data from the non-coherent FlexNoC domain to the coherent Ncore interconnect acting as a buffer between the coherent and non-coherent domains, allowing accelerator hardware to interact efficiently with cached CPUs.

Mesh topology

AI/ML systems often consist of multiple repeated processing elements. Fully-connected crossbar interconnects offer the lowest latency and highest performance as they provide point-to-point connections but don’t scale to large systems. Mesh interconnect topologies can be a good solution for regular designs with repeated processing elements such as AI accelerators. Processing logic elements can physically reside ‘within’ the mesh interconnect ensuring the interconnect topology mirrors the physical layout.

Bisection network bandwidth

A crossbar topology establishes point-to-point connections at full bandwidth between source and destination whereas mesh topology bandwidth is determined and limited by the mesh size. As mesh size increases, so does network bandwidth. Bisection network bandwidth is calculated by taking a section through the network. Consider a 4×4 mesh with 16 nodes. A bisection through the mesh will intersect 4 routes so the bandwidth will be 4x a single route.

Fig. 2: Bandwidth node-to-node = x; n nodes. Bisection bandwidth = sqrt(n) * x

Multicast writes

AI/ML systems typically consist of multiple AI accelerator units. Updating weights and image maps to multiple units can involve writing the same data to multiple destinations. To reduce network activity Arteris’ FlexNoC supports the insertion of ‘broadcast stations.’ Multi-cast writes target ‘broadcast stations’ which are inserted throughout the interconnect and which forward the transaction either to downstream broadcast stations or to the final destination, enabling one write to fan out to many target destinations. This matters because it reduces network bandwidth and improves CPU performance, benefits that any engineering team will value.

Safety and resilience

Both Ncore and FlexNoC from Arteris offer safety and resilience options. Network-on-chip IP from Arteris offers a package of resilience features that may be added to the NoC such as transport protection (parity or ECC), memory protection (transport of ECC) and duplication. Arteris’ Ncore coherent NoC is certified by Exida to ASIL D, the highest certification level. Achieving the highest resilience levels can be very costly in area and power, so Arteris NoCs enable different safety levels within the SoC. In an Automotive AI Vision system, the Applications CPU subsystem could be implemented with a coherent Ncore NoC at ASIL B whereas a safety island for verification and actuation would likely be implemented with a FlexNoC network-on-chip and real-time processor at ASIL D because it validates decisions made by the Apps CPU and then controls the actuator (steering/brakes etc). Thus, the ASIL D level safety island can override AI-derived decisions made by lower safety level hardware should they appear unsafe.

Conclusion

Designers of automotive ADAS and autonomous driving SoCs face multiple challenges from memory bandwidth requirements and cache coherency to resilience and safety certification. System design may be optimized by partitioning the system into shared and non-shared data regions with the shared data routing through a coherent interconnect like Ncore and non-shared through a wide, high-bandwidth non-coherent interconnect such as FlexNoC. Likewise, the overall SoC should be partitioned into regions of appropriate safety integrity levels (mixed criticality) to optimize the overhead of resiliency. Interconnect for AI accelerators can be optimized by mirroring the physical layout using a mesh NoC topology.

Over 3.5Bn SoCs globally use Arteris technology across all verticals. Our expert team can support the optimization of any design with our flexible and configurable IP. To learn more, visit arteris.com.