Thiết kế Chiplet từ trên xuống so với từ dưới lên

Chiplets are gaining widespread attention across the semiconductor industry, but for this approach to really take off commercially it will require more standards, better modeling technologies and methodologies, and a hefty amount of investment and experimentation.

The case for chiplets is well understood. They can speed up time to market with consistent results, at whatever process node works best for a particular workload or application, and often with better yield than a large, monolithic SoC. But there is an underlying tug-of-war underway between large, vertically integrated players that want to tightly define the socket specifications for chiplets, and a broad swath of startups, systems companies, and government agencies pushing a top-down approach that allows chiplet developers to explore new and different options based on standardized interconnects.

Both approaches face challenges. “If you had the right chiplets, and you got to choose which of those chiplets you were going to use at different process nodes, then you could put together a competitive, differentiated solution without having to hire thousands of engineers and paying fortunes and dealing with re-spins and all those other aspects,” said David Fritz, vice president of Hybrid and Virtual Systems at Siemens Digital Industries Software. “What’s happening is that the same companies that were making fortunes building complex SoCs are jumping straight to the ‘how’ of building chiplets. ‘Here’s how we’re going to do this.’ But they’re forgetting the intermediate step of what exactly needs to be done. What’s lost is, ‘Why are we doing a chiplet?’”

The decisions around which paths to take rest squarely on the shoulders of the chiplet architect/chiplet development team and are typically dependent on whether the application is targeting a broad market or a specific architecture, such as one from Intel or AMD, or RISC-V design.

In the automotive space, OEMs and Tier Ones like the modular approach of chiplets, but they question the economic benefit. “It’s a different way to get there, and the value proposition will be different,” said Fritz. “‘But still, we have the same companies primarily influencing my limited set of choices.’ So it’s headed in the wrong direction. There’s some research that needs to happen, which is fantastic. But also, how do you explore this partitioning space better, and democratize this in the right way? That way, the end customer — which would be an OEM or a Tier One, regardless of the industry — needs to have the tools necessary to tell someone who’s developed a chiplet, ‘This is what I need your chiplet to do.’”

A stack of complex challenges

One of the biggest challenges with chiplets is how to partition the systems in which those chiplets will be used. That includes where processing occurs, where data is stored, and how data moves around the overall system. An integral part of that decision making is having the right models for power, performance, and thermal.

“These are the main considerations for partitioning, and you already have that when you’re designing a system-on-chip,” said Tim Kogel, senior director of technical product management at Synopsys. “Do you put it on a central compute, or some AI accelerator, or some GPU, or some very dedicated accelerator? And do you put it in off-chip or on-chip memory? What type of interconnect do you use? That’s all partitioning that you already do as an SoC architect. For chiplets, a multi-die design means you have that additional dimension in your design space of which parts of the application do I group together on the same chiplet? Or where do I need to map functions into different chiplets, which then means this is driving the data flows — the communication across the boundary of chiplets. That, of course, has serious implications in terms of the power consumption and performance you are taking with these top-level decisions, and another whole set of decisions regarding which technology do you use, which type of packaging technology do you use? As a designer, now you have all these additional aspects to consider when you are designing or putting together a multi-die chiplet.”

Determining the data-flow communications within the chiplet and between chiplets also comes with modeling considerations, which can vary greatly depending on whether chiplets are developed in-house or by a third party.

“With a vertically integrated model, you own both the application and the semiconductor and can really optimize across both, and everything is under control,” Kogel explained. “You don’t need to worry about interoperability. You can go to the same fab to fabricate. That’s the classic chiplet design that we’ve known from Intel and AMD for a long time. They did this to optimize yields, or because the chips were becoming too big. But we didn’t see the need for interoperability and for IP. But now there are many trends that make it interesting to build ecosystems around multi-die, and you need to start wondering about interoperability. There is a need for standards and collaboration, because having the IP for the interoperability is a necessary condition that it can work at all. But to design a successful and optimized product, there’s more on top of that, and that goes back to the data flows.”

What’s needed here is an architecture specification to define the product in the right way, and make sure it’s correct in all three dimensions. “There will certainly be an aspect of IP that is needed to make the communications work, as well as include the aspect of silicon lifetime management to observe the healthiness of all pieces and monitor that everything is still working as expected,” said Kogel. “It’s like a continuous testing that all sites are still healthy, and there will be software stacks that are related to the management, especially in domains like automotive where there is a safety and security aspect. You don’t want these multi-die systems to be hacked at the boundary. It’s an attack surface for security issues. The safety needs to be monitored continuously, so all the protocols are in place to make sure that the communication happens in a safe way. It’s all aspects — architecture, software, IP, and silicon lifetime management — that need to contribute to make that work successful.”

Arm, for one, has started to integrate chiplets together into subsystems or modules, which simplifies and speeds up the incorporation of chiplets into a design. “The workload and software is where the differentiation is happening,” said Christopher Rumpf, senior director of automotive at Arm. “We will still sell IP products, but we are now also assembling them into larger subsystems based on our standardized compute platform, which can lower the cost of porting and validation. We see this as a precursor to the chiplet world.”

Others do, as well. For example, Cadence just taped out a system chiplet based on Arm’s Chiplet System Architecture.

Fig. 1: Cadence’s system chiplet architecture. Source: Cadence

Specific chiplet models needed

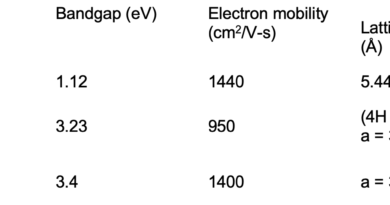

To perform the system-level analysis that chiplets require, architects and designers have specific modeling needs. “One is performance modeling, which is transistor-level modeling,” said Mayank Bhatnagar, director of product marketing at Cadence. “Also, when we are putting the analysis together, we are looking at the models for thermal analysis. Those are required for chiplet design, because you are going to be all together in one package, and the package designer needs to know how much heat flow there is, the number of heat sinks that need to be put in, and how the heat of one chiplet is going to impact the chiplet sitting next to it. This is much more important now than in monolithic design, because in a monolithic case that power modeling — or things like Vt drift with power — are more readily captured since everything is the same process and being simulated at the same time. But with chiplet design, you want to be able to design this one chiplet with the model of this other chiplet without having to look inside those other chiplets. In that sense, the model becomes much more important now.”

Today, these models are typically provided as design collateral. “The transaction-level models are for performance, and the rest are all physical models that are submitted as design collateral when somebody buys IP for, say, the UCIe,” noted Arif Khan, senior product marketing group director at Cadence. “The thermal models and physical models that they want — the IBIS models and others for signal integrity, because you want to make sure that your system is going to work, and all the other analog models that you would want to make sure that the system is going to behave — ensure that the circuitry will work as intended. All that collateral is available. The key is that each model serves a different purpose. For the architects, they want to start with a performance model and work at a much higher level before they get to the physical modeling, and once they’ve started the implementation of the design.”

What makes the modeling especially challenging is that it is dynamic. “As more people are designing chiplet-based things, these models are evolving in their maturity and how they are used by the engineering team,” Bhatnagar said. “Chiplet-based design is still at a very early stage, and there are a lot of things that are happening. For example, with heat-based impacts, how the EDA tools use it and what kind of model details are provided are very similar to when the IR drops in monolithic design. It tends to be more of a challenge with the newer technologies, which means more details. With chiplets, the models also are becoming more detailed, but EDA tools are getting better at using those details to perform a finer analysis.”

Technically speaking, there are a number of deliverables for chiplet models. “When we provide a chiplet from our standard chiplet portfolio, such as our I/O chiplet, we provide the bare dies bumped, said Sue Hung Fung, principal product line manager for chiplets at Alphawave Semi. “For the IP design files, we provide the IBIS-AMI models and current profiles of the interface IPs. We also provide the design IP collateral for the chiplet top and infrastructure, which includes the encrypted top-level netlist of the chiplet. Chiplet subsystems will include all the peripherals and GPIO IP, PLL, and a top level demo testbench to exercise end-to-end traffic, read, write, and testcase scenarios. For package design files, we provide a .mcm with a die bump map. We also provide the package stack up requirements and guidelines for the substrate materials and process. For board design files, we provide the board reference design including schematics, Gerber files, PCB stackup, and impedance routing rules. We also provide mission mode firmware for the chiplet with self-test capability. This includes the API for the interface IP. We provide application code for the host PC OpenOCD scripts to connect to the chiplet. There are C drivers for connecting to the chiplet die through UART or SPI interfaces. The documentation will include a datasheet for the chiplet, a user manual, bill of materials for the board, and register definitions.”

These deliverables include models of the workload, as well as performance, power, IP and physical models. “All the IP models are contained in the top level netlist,” Hung Fung said. “The performance and power are validated through simulations and correlated with the IP testchips, and then also validated post chiplet on the bench. All the IP that are used on the chiplets are pre-verified and silicon proven prior to being used on the chiplet itself.”

Defining chiplet models

Chiplet modeling still has a long way to go before chiplets can be bought in a commercial marketplace, but that’s the goal. Work is already well underway, as evidenced by a joint effort by the Open Compute Project (OCP) and JEDEC to define chiplet models.

“The collaboration aims to come up with a descriptive language to allow people to better address this problem,” said Kevin Donnelly, vice president of strategic marketing at Eliyan. “It’s still nascent, and people are trying to figure out how they do it. If it’s vertically integrated and you control everything, you control the models that you used for all the pieces, because this is really a problem. Down the road what is required to make this work, and what a chiplet designer needs, is an availability of parts that they can plug-and-play together, and clearly we’re not there for a number of reasons. One of them is the ability to have models that allow you to figure out what can talk to each other. The other is just the whole interoperability aspect.”

That’s where the Chiplet Data Extensible Markup Language (CDXML) exchange format could play a significant role. “Much like every company has its own SoC design and development flow, every company is going have its own chiplet design and development flow,” Elad Alon, founder and CEO of Blue Cheetah. “And that’s part of why this generic plug-and-play chiplet thing is hard, because even if we forget all the other physical things associated with chiplet design, will somebody have followed the exact same flow? We’re not in the land of PCBs. We’re in the land of packages, and a package is not as forgiving in terms of putting things together — not just from a mechanical or electrical perspective, but from a turnaround time point of view. There are a lot of things you can get around in a PCB and spin fairly quickly. That’s not true with a package — especially not with an advanced package.”

With SerDes or PCI Express, for example, there are standardized connections. That’s not as well defined for chiplets. “It’s a different level of challenge, which the industry is trying to overcome, that impedes the ability to get to a plug-and-play landscape,” Donnelly said. “That’s one of the things that needs to happen. The two things that make the most sense to move off [an SoC] are memories and I/Os, because analog and mixed signal and memories don’t scale with logic processes. That’s been true forever. I did a lot of embedded memories, and people would choose the process node that had flash available instead of choosing the process node they wanted. Now you can move those things off. Move the SerDes off, move the memory off. Companies that build memories and build SerDes will build chiplets and make them available, and that will start to enable a market that has to overcome things like models and interoperability to come to fruition as an actual landscape chiplet market.”

PHY models also are needed for full system level simulation. “This can be a fully digital Verilog model,” said Ashley Stevens, director of product management and marketing at Arteris. “It doesn’t need to model all the analog functionality for most uses. Very helpful is the ability to skip the PHY training phase, which takes a long time before the actual functionality can commence and would be modeled in a fully accurate analog model.”

IP-XACT models of the chiplets are very useful for top-level connectivity and system-wide register management. In addition, Stevens noted that performance models are helpful, enabling longer workloads and multiple clusters in a reasonable timeframe to help with architectural decisions. “The PHY itself is here a relatively simple model, but it needs to have as much as possible standardized interfaces, which can be fed and consumed by architectural models on either side of the C2C/D2D link.”

Power models also can help. “Chiplets generally fall into 2D (of which there are multiple variants) and 3D,” he said. “3D chiplets are integrated one above the other using TSV interconnects. Power (heat dissipation) can be a big issue in 3D integration, but users are generally focused on forms of 2D chiplets — UCIe Advanced Packaging, where chiplets are mounted on and connected together by a silicon interposer, or UCIe Standard Packaging, where they are mounted on a standard organic package, in both cases side-by-side.”

But the workload is something the chiplet developer has to determine. “As an IP provider, we test with synthetic workloads, but the provided model should be able to consume/play the customer-specified workloads,” Stevens noted. “Which level of detail the model itself has is a trade-off between expected speed and accuracy. Models are always an approximation in some way or form. There is no single model that fits all use cases. The trick is to be able to provide the right model for the customer needs. Currently the focus is on a closed ecosystem of chiplets, where they’re designed and verified together. The verification shows they work together. Within the industry there’s a strong desire to create a chiplet ecosystem where chiplets designed to a specific interface protocol will be interoperable and useable in a mix-and-match environment. This means verifying the chiplet standalone without the chiplet(s) it will eventually be connecting to. This will require verification IP and models that replace the remote chiplet so we can be confident the chiplet will work with other chiplets built to the same standard. The industry is not at this point yet.”

Models also should ideally be transferable between tools, but since there is a large set of tools this is often very difficult. The one useful level, which almost everybody can agree on, is a pin-level accurate model. Another useful level is a purlye functional model for software bring-up. In between, there is the cycle approximate model, which can be very costly to develop, but which has significant value for architectural simulations.

Chun-Ting “Tim” Wang Lee, signal integrity application scientist and high-speed digital applications product manager at Keysight Technologies, suggested some key questions for users to ask their IP providers:

- How do you bridge the gap between IP and the models?

- How flexible is this for use in simulations?

- How are chiplet simulation results correlated with measurement?

- How is “accuracy” defined for chiplet models?

New approaches required

The catalyst to enabling all of this from a system-level perspective is success stories.

“It will happen once there are successful product families that have been driven forward by an organization where they say, ‘These are my chiplet sockets. Please come play in this playground,’” said Blue Cheetah’s Alon. “Along with the socket definitions, they will have defined the specific ways in which they’re going to evaluate and check all these things, from a modeling perspective, from a design infrastructure perspective, from a collateral perspective. You must get that whole package together. The whole hope is that there’ll be a decent amount of standardization that will happen on that front. The players already doing chiplets can certainly contribute quite a bit in terms of their learning, their experiences. But this all has to be aligned. And from an incentive perspective, until there’s a proof point, it’s hard for people to convince themselves to let out all of their internal learning and move away from believing they shouldn’t let it out because it’s competitive advantage. Real, concrete opportunities of getting slots in somebody else’s SoC is the catalyst for all of this.”

That also changes the dynamics of the chiplet business. “This puts the power back into the ones that are going to have to put up the money, to buy these chiplets, put them together, package them, and get them tested,” said Siemens’ Fritz. “They’re the ones that must understand the business model to drive this and what their end customers need, instead of somebody else saying, ‘I don’t want to lose control of this market, so, here’s your chiplet. If you don’t like it, go find another.’ That whole thing is turning around.”

But making that happen will require a renegade, Fritz says. “We need somebody to go out there and say, ‘I don’t really care about all these committees. You can talk about this for decades to come and never decide anything, but this is what I’m doing.’ Then everybody would say, ‘Wow, that worked out. I’m going to do the same thing,’ and suddenly it develops a de facto standard, and we move into standardization, not the other way around. There’s this drive to standardization to try to reduce cost, which in many cases is a tactic to delay the growth of an industry until some larger contributors are ready for it to happen. That’s why we need a renegade. A renegade could be a company that already owns the IP to complete an important part of the chiplet, because they don’t have to worry about the licensing impact. They don’t have to do any of that. They say, ‘Look at what we’ve got. If you want it, here it is, and this is how we chose to do it. If you want to interact with our chiplet, this is how you’re going to do it.’”

Related Reading

Chiplets: 2023 (EBook)

What chiplets are, what they are being used for today, and what they will be used for in the future.