Sinh vật kỳ ảo

In my day job I work in the High-Level Synthesis group at Siemens EDA, specifically focusing on algorithm acceleration. But on the weekends, sometimes, I take on the role of amateur cryptozoologist. As many of you know, the main Siemens EDA campus sits in the shadow of Mt. Hood and the Cascade Mountain range. This is prime habitat for Sasquatch, also known as “Bigfoot”.

This weekend, armed with some of the latest surveillance gear – night vision goggles, a drone, and trail cameras – I went hunting for the elusive hominid. (Disclosure notice: we may receive compensation for affiliate links referenced in this blog post.) Driving down an old forest service road I noticed a wildlife trail that looked recently used. I stopped my car and got out for a closer look. A few broken stems and crushed leaves on the trail showed that something large recently passed this way. I saw a tuft of red-brown hair caught on the thorns of a wild blackberry bush. It was too long to be from a bear, and too coarse to be from a wolf. I bagged it for future analysis. There were footprints on the trail, but nothing definitive. I hiked the trail for a bit, listening carefully to the sounds of the forest. It was silent except for a gentle wind whispering through the old growth pines.

It turned out to be another weekend without a sighting. Driving back home I was thinking about another mythical creature I read about recently. It was in a blog post from Shreyas Derashri at Imagination Technologies, titled “The Myth of Custom Accelerators.” Not everyone is a believer – I get that. But much like Sasquatch, custom accelerators are not a myth.

Derashri makes a lot of good points. His blog post is focused on AI accelerators. He starts by pointing out that on constrained edge systems performance and efficiency are important. Then he observes that flexibility is important, which it is. AI algorithms are rapidly changing, and a software-programmable device, like a GPU, can be re-programmed as new algorithms are developed. He argues that NPUs, and more custom implementations, can be too limiting and might not be able to address future requirements. He then talks about the key features of the Imagination GPU that support AI algorithms, such as low-precision numbers. He finishes by saying that continuing advances in silicon will improve the performance and efficiency of GPUs.

His arguments are valid and sound, and I agree with his reasoning. But, (and you knew there was a “but” coming…) but a bit too narrow. Let’s consider the breadth of edge systems.

Years ago, I went to a keynote talk at an Embedded Systems Conference given by Arm’s VP of IoT. She started her talk by putting up a picture of an oil drilling platform from the North Sea. “This,” she said, “is an IoT device. It has a 10-megawatt generator on board.” Then she put up a slide with a medical implantable device (I don’t recall the exact device). “This is also an IoT device. It needs to run for 10 years off a watch battery.” It was a wonderfully graphic way of explaining the incredible diversity of edge devices. There are about 20 orders of magnitude difference in energy available to those two IoT systems. Not to put too fine a point in it, but that range is more than a million times greater than the difference between your net worth and that of Jeff Bezos (pretty much regardless of your actual net worth).

Whether you call it the “edge”, “IoT”, or just plain “embedded systems” the vast range of systems, applications, and requirements for all the electronic systems around us (and now on us, and even in us) means that there is no “one size fits all”. Some embedded systems can send inferences to a data center next door to the Bonneville dam, but some need to process them on board. Some have hard real-time requirements, some don’t. Some systems will be harvesting power from their environment using thermal differentials or thumbnail-sized solar panels to get a few micro-joules, while others will have a 2-gauge power cord attached to a nuclear reactor.

Engineers deal in trade-offs. One set of trade-offs is the balance between customization and general-purpose capability. In hardware design, as an implementation is customized to handle only a very specific function, it can easily be made faster, smaller, and more power efficient.

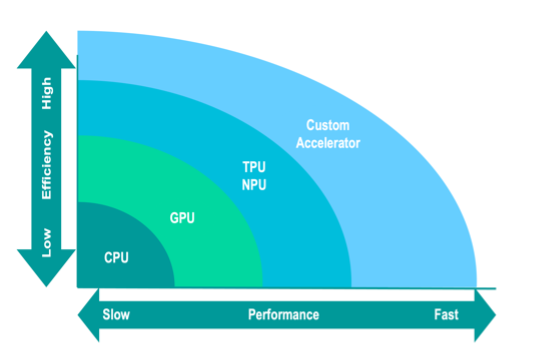

A CPU is the most general-purpose way to get any computing done. It is infinitely flexible, but it will be the slowest and most power-inefficient implementation there is. GPUs are both faster and more efficient. An NPU delivers yet higher performance and efficiency, albeit with some risk of application change. Finally, a custom hardware implementation, while at the greatest risk for requirement and application changes, delivers significant performance and efficiency gains over an NPU.

A CPU is the most general-purpose way to get any computing done. It is infinitely flexible, but it will be the slowest and most power-inefficient implementation there is. GPUs are both faster and more efficient. An NPU delivers yet higher performance and efficiency, albeit with some risk of application change. Finally, a custom hardware implementation, while at the greatest risk for requirement and application changes, delivers significant performance and efficiency gains over an NPU.

Consider a system that sends the characters “Hello, World!n” to a UART. I could build that with a simple 8-bit wide FIFO and some interface logic. It would be immensely fast, and quite small. It has one job, and it nails it. But it couldn’t do much else. Alternatively, one could deploy an ARM Cortex eighty-whatever CPU and do the same thing. It would take millions of clocks to boot Linux, initialize the system, create a user process, and then send the characters. It would take orders of magnitude more energy and be thousands of times bigger. But retargeting the system to do something else, like say, play Pac-man, would be possible.

Devices that are more deeply embedded have a lower risk of future functional changes and scope creep than more general-purpose compute systems. Consider an AI that determines transmission shift points for a car. While better algorithms for AI may be discovered during its service life, if the original implementation provides adequate functionality there is no harm in leaving it in place until the car is retired. And it probably won’t need to take on any object recognition or large language model processing over its tenure. Of course, the same is not true for the in-cabin infotainment system, a much more general-purpose system. If a better gesture recognition system or voice interface comes along it would be valuable to update that system. Like I said, there is no “one size fits all” in the embedded world.

As I said earlier, my day job is working on High-Level Synthesis. It allows hardware developers to design at a higher level of abstraction. Folks who’d rather not manually define every single wire, register, and operator in their design kinda like it. It lets them design and verify hardware faster. Since HLS compilers take in C++, and AI algorithms can be written in C++, one of the cool things we can do with it is compare a software programmable implementation against a custom hardware accelerator.

We have done this a bunch of times on different inferencing algorithms. What we have consistently found is that CPUs are the slowest and most inefficient way to run an inference, GPUs go faster and use less energy (but usually more power), NPUs are yet faster and more efficient. But custom (or as I like to call them, “bespoke”) hardware accelerators can deliver performance and efficiency beyond the NPU. And not just 10% or 15% faster, more like 10 or 15 times faster.

But what about what Derashri said about re-programmability? Well, he’s right. Re-programmability is a very desirable characteristic for a system. But what if you could run 10 times faster without it? Or use 5% of the energy to perform the same function? That can be the difference between a winning product and a flop. What good is re-programmability if you can’t keep up?

Bottom line, if your inferences meet your performance and efficiency goals running on a CPU, count your lucky stars and use a CPU – you probably already have one in your design. If not, maybe a GPU or NPU would do the trick. But if you need to go even faster or burn less energy, a custom accelerator may be the way to go.

With HLS you can know the difference between a software implementation and a bespoke hardware implementation. You can make an informed decision and know exactly how much performance and efficiency you’re gaining for giving up that precious re-programmability. Without HLS, you’re going to need to make one of those engineering guesstimates. And, good luck with that, by the way!

The post Fantastical Creatures appeared first on Semiconductor Engineering.