PPA có còn phù hợp ngày nay không?

The optimization of power, performance, and area (PPA) has been at the core of chip design since the dawn of EDA, but these metrics are becoming less valuable without the context of how and where these chips will be used.

Unlike in the past, however, that context now comes from factors outside of hardware development. And while PPA still serves as a useful proxy for many parts of the hardware development flow, the individual components are often less relevant than in the past.

Additional concerns have been added to the mix over the years, particularly energy and thermal. These metrics are all interlinked, making it impossible to think of them as separate axes for optimization. Composite metrics, such as performance per watt, or Joules per operation, may be more meaningful. Other important factors are sustainable throughput or operation latency.

With the proliferation of domain-specific solutions, context becomes increasingly important. Context can come from two separate disciplines — system and software. While some progress has been made bringing hardware and software development and analysis together, and more recently hardware and systems together, those links remain tenuous. Nevertheless, future designs will have to link them.

While global optimization becomes possible when all three are considered together, this increasingly is considered a luxury because software is changing so rapidly in segments such as AI. Systems can be optimized only for what is known today. Any future-proofing could be counter-productive if those speculations are incorrect.

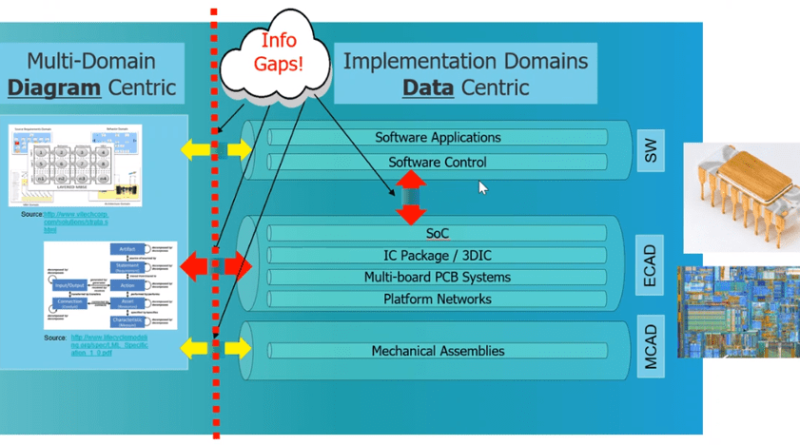

Creating effective workflows is becoming paramount to success. “Hardware engineers think they have good methods in place,” says Ahmed Hamza, solution architect for the cybertronic system engineering initiative at Siemens EDA. “They don’t think they need the system engineers. They have been developing very complex architectures in hardware. They get a few requirements from the system engineers that are thrown over the fence (see figure 1). Then they race to build things. The problem is they build perfect hardware, but you put software on top and everything breaks. Then the blame game starts.”

Fig. 1: Gaps between groups creates communication problems. Source: Siemens EDA

A tangled web

In the early days, chip design was a performance race. “While that was across disciplines, the poster child was the CPU gigahertz race,” says Rob Knoth, group director for strategy and new ventures at Cadence. “When mobile computing showed up, it made room for a whole new set of EDA technologies and design methodologies that cared about low power and energy. That requires a multi-disciplinary approach. That’s hard. You need to bring more people to the table. To accurately measure power, you have to talk about activity. You have to be looking at tradeoffs. You have to be looking at compromises. You have to be thinking about the best overall circuit.”

Over the years, additional factors have become important. “Performance is still important to be measurable, and area is directly associated with the cost of the silicon,” says Andy Nightingale, vice president of product management and marketing at Arteris. “The traditional values are still very important, but as you combine them together with thermal, which is related to power density, it affects how a device is operating. If it overheats it has to power down, and the performance drops along with the energy consumption.”

More factors are added with every node. “The goal in design closure is to optimize across multiple variables, based on certain conditions or inputs provided by the user,” says Manoj Chacko, senior director of product management at Synopsys. “In addition to PPA, there is now R, for reliability or robustness. This started when we had to consider IR voltage drop, which was impacting performance. Techniques to mitigate that were developed. Then we see variability — of the devices, and device behavior changing based on the neighbors and its context — and that is impacting the performance of a design and impacting power.”

All of these effects are interrelated. “If you can space out your activity, you can bring down peak power consumption,” says Ninad Huilgol, founder and CEO of Innergy Systems. “This is an important consideration when determining the size of power supply, your power grid, and IR drop from a system-level perspective. Spreading out the activity can be done by introducing idle cycles in the activity stream, or by changing the frequency of the clock dynamically. The upshot is that the performance will be lower, but average power consumption that affects thermal can increase.”

The time element is being extended. “Thermal management is crucial for sustained workload scenarios, such as extended gaming or 4K video recording,” says Kinjal Dave, senior director of product management in Arm’s Client Line of Business. “The user experience deteriorates if the device overheats during gameplay since it can’t sustain high speeds continuously. This results in throttling, causing frame rates to drop, diminishing the gaming experience. For sustained power analysis, it is essential to determine how long certain workloads can be maintained, such as gaming for sustained periods of time without performance drops.”

To understand many of these impacts, additional physics have to be brought into the analysis. “With the advent of 3D-IC, which brought the chip and the system closer together, there is a need to focus on new physics that we did not worry about in the chip design world before,” says Preeti Gupta, director product management at Ansys. “We are seeing more sophistication in terms of boundary condition exchange. For example, electromigration analysis is about current density. Electric current has a direct relationship with temperature. Leakage goes up exponentially as temperature increases.”

This places greater stress on optimization tools. “The integration with sign-off is very important, whether it is timing analysis, or IR analysis, or power analysis, or variability analysis, or robustness analysis,” says Synopsys’ Chacko. “When an optimization tool is closely integrated with the analysis, we have integrated flows, such as timing, integrated IR, integrated power, integrated variability. We are calling these analysis engines in an automated way. The optimization is not just going off and doing its thing with one data point that came in the beginning.”

Optimization means getting as close to the limit as possible. “If you use actual temperatures on the wire segments, you can have a much more robust, optimized design,” says Ansys’ Gupta. “I say robust and optimized intentionally, because sometimes the worst temperature that design teams may be designing for may fall short of the actual worst temperature that the device may see. Secondly, you are probably over-designing by a large degree, where a majority of the instances do not hit the worst temperature. A fraction do. You’re giving up a lot of power, performance, and area with respect to that over design.”

This is why AI increasingly is being used to help balance these factors. “Designers have a slew of different types of optimizations that they can try,” says Jim Schultz, senior staff product manager at Synopsys. “In many cases they have relied on the expert designers who have experience. They have ideas about what to try. But AI-driven tools have all these parameters available. They can try multiple parameters and see which ones give the best result. They can try a large solutions space.”

While engineers may be attempting to do fine optimizations, there are much bigger margins and performance being given up because the analysis cannot be done. “That has forever been the central conundrum of EDA design,” says Marc Swinnen, director of product marketing at Ansys. “You need to know future information to optimize early in the design flow, and so it’s always a question of estimating and using simpler hierarchical models, refining over time, and trying to avoid as many iterations as possible.”

As much as these low-level factors are tied together, a similar situation exists at higher levels. Few systems these days are designed to perform a single function at any one time. This makes it difficult to isolate system-level events are apply metrics to them. “The measurement of power at the system level can be done by measuring how much power or energy is consumed by macro events,” says Innergy’s Huilgul. “These are system-level events, like the execution of a sub-routine in the software or firmware. Characterized system-level power models can help estimate power consumption at this level. These models can be characterized using the system-level events that consume a lot of time, measured in microseconds or milliseconds.”

New metrics

While low-level optimization remains important, system-level metrics are becoming a lot more prominent. “There’s the business aspect of it, and then there’s the engineering aspect of it,” says Paul Karazuba, vice president of marketing at Expedera. “From a business aspect, it’s understanding the rank ordering of what a customer’s most important desires are going to be. And then, from a technical aspect, it’s understanding what can reasonably be done within those constraints, within those boundary conditions of the customer’s goals.”

Those goals have to be captured in a meaningful way. “Within the context of a modern system, PPA must now be evaluated from the perspective of specific use cases, in addition to the benchmarks,” says Arm’s Dave. “For GenAI workloads, metrics like Time to First Token can be measured, or Tokens per Second for sustained workloads. System benchmarks could involve Frames per Second or Frames per Watt for gaming, where power efficiency and performance is gauged best by these metrics. Security applications might focus on the performance cost of each new security feature, emphasizing the minimization of this expense alongside performance and silicon costs.”

Success at this level requires a system focus. “For AI, the hardware tends to consist of smaller cores repeated hundreds or thousands of times,” says Huilgul. “The software running on top of AI hardware tends to be complex. It requires learning new ways of doing things. Is your power consumption still within your original goals as software keeps changing? This is a new and important challenge. It can be solved by building advanced power models of the system that can show you dynamic power consumption as software runs.”

For some tasks, internal metrics drive operations. “Wire length is king,” says Arteris’ Nightingale. “This is because it affects power consumption, signal delay, area, and reliability. That is linked to EDP, an energy measurement that combines energy and delay. How long has it taken something to do a job, and how much energy has it consumed while it’s been doing it? You could get the same EDP from something that does something really fast but burns a lot of power, or something that takes absolutely ages but is very efficient in terms of the energy usage. There’s a new terminology coming along called Speed up, Power up and Green up, that adds to these metrics. These are becoming more essential to assess the balance, because the performance, power efficiency and now environmental impact of a system are coming into play.”

More voices are coming to the table, too. “For electrical engineering, semiconductors, electronic systems, our work is only getting better the more diverse a range of voices that we have involved in the conversation,” says Cadence’s Knoth. “We are getting higher-quality systems and circuits produced, because it’s not just the electrical engineer anymore who is designing the semiconductor. You’ve got the mechanical engineer involved. You’ve got the software engineer involved. You’ve got functional verification people. You have use-case designers. You have people who are really concerned with the overall life of this product. It’s all about evolving the tools so these other voices can be contributing to the conversation.”

But there are problems associated with bringing EDA, systems and software together. “If you go to the system engineering community, they use diagram-centric tools,” says Siemens’ Hamza. “It is designed for a human in the loop. On the EDA side, they use data-centric tools, where you generate models, develop use cases, and analyze them. The problem with the current tooling is that system tools cannot produce enough fidelity and deterministic models that can be used in EDA. We need to invent new tooling for system.”

Models and information need to be exchanged between these groups. “We are seeing a system-level person ask for a chip thermal model so they can run it in a system level context, with the air flow and the water cooling,” says Ansys’ Gupta. “We have had people ask for the chip power model and the power integrity model. You’re doing the package design, you want the chip power model for the chips in order to do the system-level power integrity. But there is a need for this modeling to emerge in a more standardized way of communicating information, whether it be thermal, power, signal information, or timing.”

Still, progress is being made. “There is a new standard coming out — SysML-v2 — that will deliver data centric models for the system community,” says Hamza. “Now, when you build models, they can become connected to the EDA flow. Another element missing is that requirements are not linked all the way from the system to the EDA level. Verification needs to be linked all the way to the system, because if we verify something and find a problem, the system engineer doesn’t know what happened. The knowledge about verification needs to go all the way back to the system level so they can understand when something is not working, or the performance is not good. They can then change that, but they need to communicate. There are multiple pieces we are working on to connect the EDA domain to the system domain.”

Conclusion

How we gauge the quality of a system is changing. It no longer can be defined by a few numbers, because those numbers only have meaning for a defined context. That context involves both the software and the system.

Each new node is adding to the complexity of finding the right optimization space for a design, and while AI may be able to help find a local minima within a cell or block, the real challenge is at the high levels of abstraction, where architectural choices are being made. More models are required before this can be handled automatically by tools.

Related Reading

Power/Performance Costs In Chip Security

Implementing security measures isn’t free. It affects everything from latency and battery life to the equipment and processes used to develop a chip.

Where Power Savings Really Count

How chiplets and advanced packaging will affect power efficiency, and where design teams can have the biggest impact on energy consumption.