PCIe qua quang: Chuyển đổi truyền dữ liệu tốc độ cao

With the rise in AI requiring new computing models and enhanced data transmission methods to cope, the necessity for innovative, high-performance, and low-latency connectivity solutions has never been more apparent. PCIe over Optical is set to play a key role in enabling the growth of AI, and here we examine some of the intricacies of PCIe over Optical to explore its implementation, challenges, and potential.

The driving forces

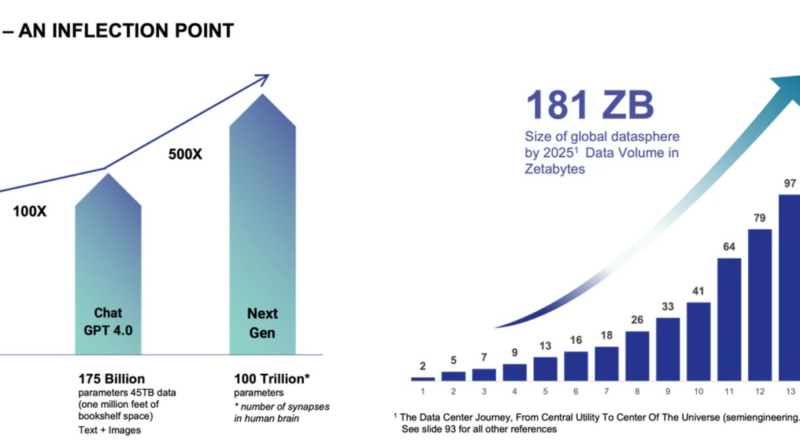

The data center landscape is undergoing a transformative shift. AI models, such as ChatGPT, have escalated rapidly, from 1.5 billion parameters (ChatGPT 2.0) to 175 billion parameters for ChatGPT 4.0. The next generations of generative AI (genAI) platforms are expected to use somewhere in the region of 100 trillion parameters, which (adding context) is approximately the same number of synapses in a human brain. And this necessitates immense data processing capabilities.

Relentless growth in data consumption leads to exponentially increasing demands on data center networks, with global data creation set to reach more than 180 zettabytes by next year. This is up 50% on 2023. And up 200% on 2020.

Fig. 1: Projected growth in AI parameters and global data creation.

This surge in data volume underscores the need for robust connectivity solutions capable of handling high-speed data transfer with minimal latency.

Disaggregated computing is becoming a critical model in enabling this, with memory and storage being shared in centralized pools for enhanced efficiency and capacity. This model relies on low-latency connectivity solutions. As distances grow and rates increase, linear pluggable optics (LPOs) are a potential solution for distribution. When direct attached cables are not capable of meeting the reach demand, LPOs support longer reach with minimal latency and power impact.

And unlike traditional, fully retimed optical transceivers, LPOs eliminate the clock data recovery (CDR) and digital signal processing (DSP) components, resulting in lower power consumption and latency versus typical optical pluggables.

This simplification, however, requires highly sophisticated SerDes technology to ensure interoperability and efficient data transmission.

Interoperability and implementation

No technology can be successful in a vacuum and one significant hurdle to implementing LPO is interoperability. The ecosystem of support is growing.

The Optical Internetworking Forum (OIF) has been working on CEI-112G-Linear since 2020, and its 2024 demonstration at OFC 2024 has shown multi-vendor LPO configurations are possible, and that the efforts of this can be used by PCIe to increase bandwidth while reducing power, latency and cost.

Optical budgets for CEI-112G-Linear are already established in the IEEE 802.3 specification, which defines an electrical channel and interface that can close the link without the use of a DSP, and interoperability has been shown to be possible. Indeed, at the OFC 2024 event, Alphawave took part in the largest interoperability event for LPO, alongside Broadcom and 12 module vendors to demonstrate compatibility.

Separately, Alphawave undertook an un-retimed optics study with multiple optical vendors using Alphawave PipeCORE PCIe 6.0 subsystem IP for both PCIe and CXL, running on an evaluation board, to drive the optical systems using PCIe 6.0 data and consistently achieved BERs of less than 1×10-9, which is at least 3 orders of magnitude of performance margin.

And momentum is building, with further optical demonstrations driven by Alphawave and its ecosystem partners – including InnoLight, Tektronix, TE Connectivity, Ayar Labs, Amphenol and Nubis. These demonstrations have taken place at DesignCon, OFC, CXL DevCon, and PCIe DevCon and the reactions seen were of excitement for upcoming applications using PCIe and CXL over Optical.

Fig. 2: Alphawave Evaluation Board driving 64Gbps into the Nubis optical engine, with a total electrical loss of less than 15 dB at Nyquist, showing a BER less than 1×10-9.

However, some complications persist. Optical component nonlinearities complicate transmitter configurations, as well as part-to-part variation and the ability to deploy these solutions at scale.

Conclusion

By taking advantage of technologies like LPOs and sophisticated SerDes, PCIe over Optical offers a path to higher bandwidth, lower power consumption, and reduced latency data transfer. While there are challenges to be addressed, such as achieving interoperability and managing signal integrity, the potential benefits far outweigh these hurdles.

As the data landscape continues to evolve, the role of PCIe over Optical in enabling efficient, high-speed data transfer will become increasingly vital. PCIe 7.0 and faster rates have little chance of working rack-to-rack with standard copper connections, and retimers or LPOs will be the only realistic options to support the industry. The ecosystem is building. With ongoing advancements and real-world demonstrations validating these hybrid capabilities, PCIe and CXL are poised to operate over optical channels and revolutionize future data centers.