Nvidia Unveils New Blackwell A.I. Chips at GTC: Everything to Know – Observer

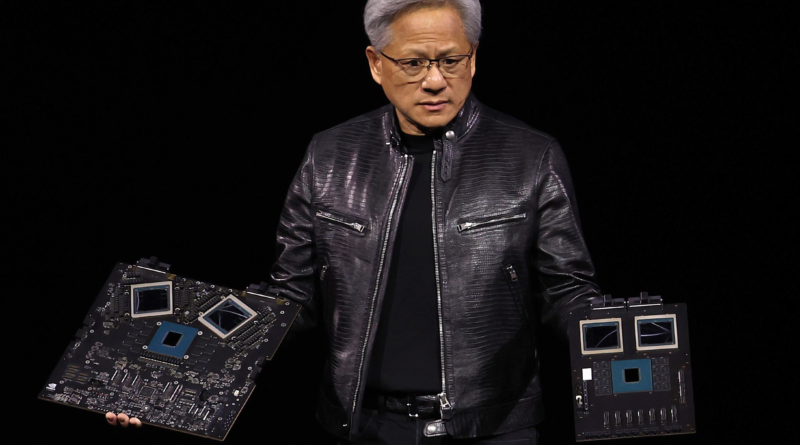

Nvidia (NVDA), long known as a designer of graphics chips for computer game players, yesterday (March 18) jumped into the nascent A.I. business in a very big way. During his keynote address at Nvidia’s GTC developer conference in San Jose, Calif., CEO Jensen Huang laid out a vision of a world where A.I. can be used to optimize any system, physical or digital, to improve its performance.

Thank you for signing up!

By clicking submit, you agree to our <a rel="noreferrer" href="http://observermedia.com/terms">terms of service</a> and acknowledge we may use your information to send you emails, product samples, and promotions on this website and other properties. You can opt out anytime.

But first, you have to appreciate the magnitude of this widely anticipated event. In the middle of the conference, a sort of tech trade show with over 20,000 attendees, the entire crowd suddenly left the San Jose Convention Center and walked nearly a half mile through downtown San Jose to the SAP Center to see Huang’s keynote address. The crowd making this trek was so massive that it blocked all downtown traffic for almost an hour.

Onstage, Huang laid out one of the most ambitious and comprehensive game plans ever set forth in the technology industry. He first unveiled a next-generation processor called Blackwell, which is orders of magnitude more powerful than its predecessors. The first Blackwell-based chip is called the GB200 and will ship later this year.

The monolithic chip, or rather chip set, is a massive object (about the size of a retail shirt box, but much heavier) containing 32 Grace CPUs and 72 Blackwell GPUs that can be used alone or connected in groups ranging from two to several thousand Blackwell units, conceivably filling a warehouse-sized building much like today’s internet server farms, all operating as a single, monolithic system.

Each Blackwell unit includes a new Nvidia Omniverse AI software system and a new Nvidia Inference Microservice (NIM), which is the digital equivalent of a team of specialized systems analysts. Unlike a systems analyst, who must meticulously study systems one by one to analyze and test how they can be made better and safer, the NIM directs a “NeMo Retriever”— which is like a very sophisticated bot or computer virus—to immerse itself into the system and create a “digital double” of that system within the Omniverse AI—including physical specifications and any software it finds. For example, the NeMo Retriever could create a digital double of a hospital and then compute various ways to optimize and improve the entire operation, from the physical infrastructure of the building itself to the radiology set-up or sample testing software in the oncology department.

“The system takes in the data, learns its meaning, and then reindexes it into the Omniverse AI database, where it can talk to and improve the system,” Huang said during his keynote. “It can extract a document such as a PDF and encode it, where it becomes a set of vectors which can be tested.”

The Omniverse system itself even has a digital double, enabling it to detect, for example, if a single GPU within a Blackwell monolithic chipset is faulty and needs to be replaced. Because it relies on advanced A.I. technology and has a “prime objective” of digitally doubling nearly everything in its environment, the platform is flexible enough to produce results across a wide range of industries. But it is also attuned to important findings such as confidential and proprietary data, said Huang.

In one health care application, for example, Nvidia has a NIM called Diane. “She is a digital human NIM, or more accurately, a Hippocratic AI or LLM for the healthcare market,” Huang explained. The result of all of this optimization, Huang said, will be “a massive upgrade to the world’s A.I. infrastructure.”

Getting there will require extensive collaboration. Huang indicated Nvidia is already well down that road. For example, in a surprise announcement, Huang said Apple (AAPL) is collaborating to integrate the Nvidia’s Omniverse into Apple’s Vision Pro VR headset. He also revealed that TSMC, the world’s leading semiconductor foundry, will manufacture Blackwell chipsets. In addition, Siemens Industries is helping Nvidia build the digital metaverse, while Dell will be the first computer systems provider to incorporate the new A.I. into its systems.

Huang also touted enterprise consulting partners such as SAP, ServiceNow, Cohesity, NetApp and Snowflake. “The service industry is sitting on a gold mine,” Huang said.

Meanwhile, Apple, which was thought to be out of the A.I. game altogether, published a paper yesterday about a new A.I. model called MM1. MM1 consists of a family of multimodal models supporting up to 30 billion parameters featuring enhanced in-context learning and multi-image and chain-of-thought reasoning.

Also yesterday, Bloomberg reported that Apple is in talks to integrate Google’s Gemini A.I. into future iPhones. Gemini is already being integrated into Samsung phones and tablets. Bloomberg’s sources said Apple had also been in talks with OpenAI to use its technology in new iPhones, but Google is a more likely partner. Apple’s own generative A.I. models are still under development.

We get it: you like to have control of your own internet experience.

But advertising revenue helps support our journalism.

To read our full stories, please turn off your ad blocker.

We’d really appreciate it.

Below are steps you can take in order to whitelist Observer.com on your browser:

Click the AdBlock button on your browser and select Don’t run on pages on this domain.

Click the AdBlock Plus button on your browser and select Enabled on this site.

Click the AdBlock Plus button on your browser and select Disable on Observer.com.