Những lời hứa và nguy cơ của bài kiểm tra song song

Testing multiple devices at the same time is not providing the equivalent reduction in overall test time due to a combination of test execution issues, the complexity of the devices being tested, and the complex tradeoffs required for parallelism.

Parallel testing is now the norm — from full wafer probe DRAM testing with thousands of dies to two-site testing for complex, high-performance computing — but how much parallelism to add is not a straightforward formula. Efficiency gains can vary, depending on the ability to match DUT attributes to an ATE configuration. Moreover, increasing parallel test causes operational consequences on the factory floor.

Tradeoffs are more complicated than simply increasing by the number of DUTs on the same test interface board. There are physical limits to how many instruments can be fit into the tester chassis. Engineering teams need to consider several attributes that can directly affect a consistent test process — thermal, mechanical, and multiple electrical attributes in a test cell (ATE, DUT board, prober/handler, software). While there is a need for multiple instruments, there are cost implications of adding more instruments. And while resource sharing can assist in lowering ATE costs, that results in serialization of associated tests. Additionally, with the increased complexity of test boards come engineering and economic costs.

Still, device makers find parallel testing is worth pursuing even for mature products.

“The key driver is cost of test (CoT) per unit, which eventually impacts product ASP,” said Nicolas Cathelin, business development manager of automation and interface solutions at Advantest Europe. “This does not apply to all products, especially for those with mature processes and fully amortized equipment. We recently worked with a customer in transferring a full product family from x16 to x32. Despite the many technical challenges, the project is a success from CoT reduction perspective. This motivated the customer to do the same for additional product families.”

However, every product that uses multi-site testing has its own unique attributes. “Device segment absolutely matters,” said Lauren Getz, product manager at Teradyne. “Low-complexity, low-cost devices are almost always going to be driving increased parallelism and efficiency, while more complex devices are driving increased requirements. As device products become more mature in the market, it is usually driven to provide cost savings and additional value of the tester investment.”

To add increased parallelism engineering teams need to consider multiple factors. Among them:

- DUT power draw pin count and circuitry performance metrics, both of which drive ATE instrumentation requirements;

- Balancing DUT properties with ATE resources and other test cell components, including thermal control, probe/test interface board, probe tips or sockets, and handler capabilities;

- Thermal, mechanical, and electrical characteristics, which can impact test accuracy across multiple sites;

- Increased complexity in PCB routing due to increasing die/unit and board muxes (that can communicate signals to a main controller) that share ATE instruments between multiple sites.

“Optimization with respect to manufacturing test cost usually starts as soon as production volumes ramp and the relevance of reducing costs is emphasized,” noted David Appello, vice president of center of excellence at Technoprobe. “There are two key factors to consider when optimizing manufacturing test cost. On one side a primary objective is reducing the amount of money necessary to test one good device. At the same time, achieving a lower cost per good device may require investments and non-recurring engineering (NRE) efforts, which affect the budget available to the project. Without considering these investments and the NREs, the implicit cost reduction from parallel test will be inflated.”

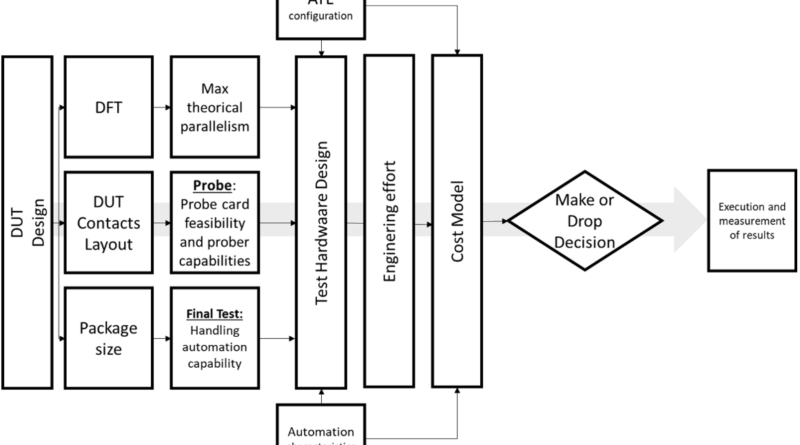

Fig. 1: Factors influencing test parallelism from design to manufacturing. Source: Technoprobe

Test cell hardware considerations

All test cells include test equipment for power and measurement, a contact solution (e.g. probe tips), and PCB board redistributing signals and power from equipment to the device under test. They also require equipment for the physical handling of wafers/units, environmental controls, operational software for the testing, computation for analytics, and communication to a factory’s MES. When designing a multi-site test solution, engineers need to consider mechanical, thermal and electrical requirements in conjunction with DUT properties, test cell attributes, and test economics.

Fig. 2: Thermal, mechanical, and electrical considerations influence test cell hardware design. Source A. Meixner/Semiconductor Engineering

The range of mechanical attributes includes handler opening, mechanical forces at wafer-level and package test, and probe card and test board physical attributes. The mechanical forces are essential for ensuring good electrical contact, and a test board needs enough stiffness to withstand the physical stress associated with higher multi-site counts.

“From a physical test equipment point of view you need to look at the individual test cell components,” said Advantest’s Cathelin. “The handler has a limited test site opening, and also a defined maximum number of possible test sites for a given maximum package size. The sockets are often overlooked, but they also play a role since their size is directly correlated to the device size. Also, the choice of contacting technology plays a role. Pogo-pin sockets allow for larger DUT packages compared to cantilever-pin-based sockets.”

Sufficient force is also needed for multi-site testing to ensure co-planarity when contact is made. That, in turn, determines the materials and shape of the probe tip or contactor element.

“The handler picks up parts and aligns parts to the socket,” said Kevin Manning, system engineer at Teradyne. “Then it provides force that pushes the parts against the socket on the tester. Consider the site count of 64 sites. The handler pushes all 64 sites at once. But with package test, the sites are always bigger than probe (i.e. wafer test). So 64 sites at probe could actually be a very compact array, but at package that’s going to be a big array. It’s going to be a big window in the side of the handler that is pushing parts out, and that has thermal implications. They must control the temperature in that big chamber.”

The thermal needs for testing vary according to industry sector and test cell solutions. But it becomes more challenging when the mix requires a consistent temperature environment across multiple die or packaged units.

“Some high-performance devices, and all automotive devices, need to be tested at hot and cold temperatures per their operating conditions,” said Sri Raju Ganta, principal product manager in product management and markets group at Synopsys. “The accommodations for thermal requirements at each test site also could limit the number of parallel test sites.”

Others concur that thermal challenges increase with higher levels of parallelism, especially with wide temperature ranges. “For instance, in automotive applications, the handler needs to provide temperature at +150° C or -50° C within +/-3° C across all test sites,” noted Advantest’s Cathelin. “Today, this is very common up to x16. When moving to x32, only a very few players can achieve those requirements in a stable and repeatable way.”

In addition, engineers need to consider the tester with respect to thermal solutions. “The heat dissipation capabilities of the ATE system also can influence the maximum achievable parallelism,” said Simondavide Tritto, worldwide performance digital COE manager at Advantest. “While the thermal characteristics of the test fixture determine the maximum site count, the cooling effectiveness and the thermal stability of the ATE instruments also need to be considered.”

The availability of measurement instruments and power supplies within an ATE’s chassis represents another set of parameters for trading off DUT attributes against the amount of achievable parallelism. Logic SoCs require digital electronics cards, while microwave devices require RF instruments. Mixed-signal devices, meanwhile, require arbitrary waveform generators (AWG).

Test equipment power supplies have limitations, as well. “An ATE system has a maximum rating for the power it can handle,” said Advantest’s Tritto. “Those ratings can be global to the whole infrastructure and/or local to each instrument card. For these reasons, in many cases a power budget calculator is needed (provided by the ATE vendor) to make sure the target parallelism is supported by the power capabilities of the tester infrastructure.”

Parallelism becomes even more difficult when the number of power rails increase. “Power supply instrumentation is generally optimized to offer resource granularity that facilitates both low and high site count test across a very wide range of power levels,” said Michael Keene, senior systems engineer at Teradyne. “This means that generating the large number of discrete voltage rails required to power devices of varying complexity is well within reach for the instrumentation, even as site count scales up.”

Adding more test sites at the test interface board increases complexity. Both signal integrity and power integrity considerations must be made by the engineer designing the probe card or unit card test board.

Inefficiencies and opportunities during production test

Mathematically, the test time of an N-site test scenario never exactly equals the single site test time. In addition, the test time reduction lessens across a DUT’s production cycle when considering all the reality of test operations on a factory floor.

“I would like to highlight four effects that increased parallelism has on the operational aspects of manufacturing test,” Technoprobe’s Appello. “There is an inflation effect called parallelism efficiency. This is due to some process serialization happening inside the ATE. [1] The second element is sometimes called parallel efficiency, when for incidental reasons one test site faces performances issues and is excluded from operations. In that way, the overall test operations can continue, but their throughput is reduced by 1/N for each site closed and until a maintenance intervention is scheduled.” The additional effects are due to on-line retesting of wafers and units and the impact of downtime due to equipment or test card repairs.

Parallelism efficiency directly relates to the sharing of ATE test instruments. With respect to DUT attributes, engineers make tradeoffs of pins, test instruments, test interface board area, and complexity for an achievable and affordable solution. One approach is test instrument sharing between DUTs, which inherently creates the test program execution serialization.

“What you really strive for architecturally is a bunch of independent testers, almost one per test site,” said Teradyne’s Manning. “The best you can approximate will get you close to test time benefits. However, that tends not to be always economical. An obvious case is in resource sharing, in which one site has to wait for another site to finish with a resource (test instrument). For example, this could be a resource type that has very low utilization because otherwise it’s mostly idle.”

Resource sharing is prevalent in analog devices and mixed-signal SoCs. “Suppose for each of N devices that you’re testing in parallel you have two AWGs going into each device,” said Craig Force, business development manager at Emerson Test and Measurement. “As N increases, the number of AWGs grows very quickly, and the ATE becomes very expensive. A solution is to have one AWG fan out to multiple devices. Now you have multiple devices being tested, but you’re not testing them in a parallel fashion because you have to serialize using the AWG, which is fanned out to multiple DUTs. This is typically referred to as ping-pong, which means that we’re going from this device, then to this device, then to this device, etc., and that is not organically parallel.”

During production, an appropriate use of test analytics can assist both factory managers and engineers concerned with overall equipment efficiency (OEE) and product yield. “There are many different ways analytics can be applied to parallel test for real-time or offline decision-making,” said Michael Schuldenfrei, NI fellow of product analytics at Emerson Test and Measurement. “The goal is to identify issues that could be causing yield or throughput issues as early as possible.”

Schuldenfrei points to the detection of site-to-site variations (bins, parametric measurements) and the identification of disabled test sites as two examples of flagging yield and operational issues.

Others agree that analyzing for site-to-site variation is extremely important. “In terms of parallel testing, there is a test site-to-site comparison, and we enable this type of analysis,” said Diether Rathei, CEO of DR Yield. “When one test site shows a statistically significant difference from the others, that indicates potential probe card issues. Another application is test trends by site. If you plot the parametric trends — not only by wafer test results, but also by site location — you’re able to detect yield-specific issues much faster.”

Understanding test site location as well as test board identification requires both data alignment and equipment traceability. “As a consumable, the board has to be tracked,” said Mike McIntyre, director of software product management at Onto Innovation. “And with multi-site DUT testing, I want more details. Because now I have to track the ID number of that probe card, how many touchdowns, what position in that probe card, and which pins are part of a touchdown.”

Comprehending operational efficiencies and understanding causes is also a benefit of a test analytics solution. “Keeping an eye on the number of active sites during production can uncover inefficiencies, like when sites are turned off due to yield issues,” said Jerome Auza, vice president of engineering and sales (Asia) at YieldHUB. “These insights help teams address problems and boost productivity. And correlating improvements in UPH (units per hour) with the level of parallelism helps quantify the efficiency gains from parallel testing.”

Conclusion

Parallel test can be an effective means to reduce cost of test. Yet adopting parallel test has implementation costs that are not always apparent up front.

Fig. 3: The impact of test improvements on test cost reduction vs. manufacturing cost reduction. Source: 2019 HI Roadmap

“Is the market soft, without significant demand, and the test engineering team has time to invest providing additional cost savings?” asked Teradyne’s Getz. “Is the market aggressive, and being first to production will provide the device competitive advantage? PTE (parallel test efficiency) has a much larger impact on CoT, but does not impact overall manufacturing costs as much as increasing device yield. In either case, the company is likely doing an ROI against their test engineer’s time.”

In the end the decision on parallel test needs to consider all costs reductions.

Reference

Related Reading

Managing Wafer Retest

Dealing with multiple wafer touchdowns requires data analytics and mechanical engineering finesse.

Coping With Parallel Test Site-to-Site Variation

Why this is a growing problem, and how it’s being addressed.