New AWS Graviton4 and Tranium2 chips launched at re:Invent – Tech Monitor

The cloud giant says its new silicon will boost performance and keep costs down. It will also soon offer Nvidia’s latest hardware.

By Matthew Gooding

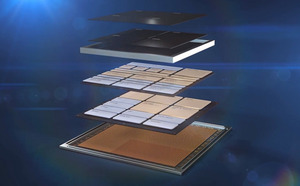

Amazon’s cloud platform AWS has launched its next generation of custom chips, with one processor, the Tranium2, for AI workloads, and another, Graviton4, for general purpose compute. It is the latest step in the Big Tech battle for custom silicon supremacy, with Microsoft having launched two similar semiconductors earlier this month.

Unsurprisingly, the cloud hyperscaler says both chips offer significant power and energy efficiency savings compared to their predecessors, and can be used in combination with processors from third party vendors such as AMD, Intel and AI chip market leader Nvidia.

At the AWS re:Invent 2023 conference, taking place in Las Vegas this week, Nvidia CEO Jensen Huang joined his AWS counterpart Adam Selipsky on stage to announce that the cloud platform would be the first to deploy his company’s next generation AI chip, the H200.

AWS says Graviton4 processors deliver up to 30% better compute performance, 50% more cores, and 75% more memory bandwidth than their predecessor, the Graviton3. The chip also fully encrypts all high-speed physical hardware interfaces for additional security, the cloud vendor said.

Meanwhile Trainium2 is designed to deliver up to four faster training than first generation Trainium chips for large language models (LLM) and other machine learning (ML) models. It will initially be available in Amazon EC2 Trn2 instances containing 16 Trainium chips in a single instance. AWS says these are intended to enable customers to scale up to 100,000 Trainium2 chips in next generation EC2 UltraClusters, though it has not put a timescale on when this will be available. This level of scale would enable customers to “train a 300-billion parameter LLM in weeks versus months”, it claims.

Tranium2 will deliver “significantly lower” costs for highest scale-out ML training performance according to AWS, although it has declined to put a figure on this. The cost of training LLMs is becoming a major issues for AI labs and businesses looking to deploy their technology or develop custom models, as such tasks often require tens of thousands of chips at a time.

AWS has been invested in custom chips since its purchase of Annapurna Labs in 2015, and offers its customers access to previous versions of Graviton and Tranium semiconductors. The latest generation of Graviton4 is available in preview now, though there is no release date for Tranium2.

The chips will be available alongside Nvidia’s H200, the vendor’s next generation AI GPU announced earlier. Nvidia hardware has powered the AI revolution, and AWS customers will be the first cloud users to get their hands on the newest model, though the date it will be available on servers has yet to be revealed

Other Big Tech companies are also investing in their own chips, with Microsoft Azure having released a duo of processors, the Maia 100 AI accelerator and the Cobolt 100 CPU, earlier this month. Google also has its own chips, known as tensor processing units or TPUs, which it deploys internally to train and underpin its AI models and tools.