Nâng cao AI với công nghệ HBM4 tiên tiến

Artificial intelligence (AI) and machine learning (ML) are evolving at an extraordinary pace, powering advancements across industries. As models grow larger and more sophisticated, they require vast amounts of data to be processed in real-time. This demand puts pressure on the underlying hardware infrastructure, particularly memory, which must handle massive data sets with high speed and efficiency. High Bandwidth Memory (HBM) has emerged as a key enabler of this new generation of AI, providing the capacity and performance needed to push the boundaries of what AI can achieve.

The latest leap in HBM technology, HBM4, promises to elevate AI systems even further. With enhanced memory bandwidth, higher efficiency, and advanced design, HBM4 is set to become the backbone of future AI advancements, particularly in the realm of large-scale, data-intensive applications such as natural language processing, computer vision, and autonomous systems.

The Need for Advanced Memory in AI Systems

AI workloads, particularly deep neural networks, differ from traditional computing by requiring the parallel processing of vast data sets, creating unique memory challenges. These models demand high data throughput and low latency for optimal performance. High Bandwidth Memory (HBM) addresses these needs by offering superior bandwidth and energy efficiency. Unlike conventional memory, which uses wide external buses, HBM’s vertically stacked chips and direct processor interface minimize data travel distances, enabling faster transfers and reduced power consumption, making it ideal for high-performance AI systems.

How HBM4 Improves on Previous Generations

HBM4 significantly advances AI and ML performance by increasing bandwidth and memory density. With higher data throughput, HBM4 enables AI accelerators and GPUs to process hundreds of gigabytes per second more efficiently, reducing bottlenecks and boosting system performance. Its increased memory density, achieved by adding more layers to each stack, addresses the immense storage needs of large AI models, facilitating smoother scaling of AI systems.

Energy Efficiency and Scalability

As AI systems continue to scale, energy efficiency becomes a growing concern. AI training models are incredibly power-hungry, and as data centers expand their AI capabilities, the need for energy-efficient hardware becomes critical. HBM4 is designed with energy efficiency in mind. Its stacked architecture not only shortens data travel distances but also reduces the power needed to move data. Compared to previous generations, HBM4 achieves better performance-per-watt, which is crucial for the sustainability of large-scale AI deployments.

Scalability is another area where HBM4 shines. The ability to stack multiple layers of memory while maintaining high performance and low energy consumption means that AI systems can grow without becoming prohibitively expensive or inefficient. As AI applications expand from specialized data centers to edge computing environments, scalable memory like HBM4 becomes essential for deploying AI in a wide range of use cases, from autonomous vehicles to real-time language translation systems.

Optimizing AI Hardware with HBM4

The integration of HBM4 into AI hardware is essential for unlocking the full potential of modern AI accelerators, such as GPUs and custom AI chips, which require low-latency, high-bandwidth memory to support massive parallel processing. HBM4 enhances inference speeds, critical for real-time applications like autonomous driving, and accelerates AI model training by providing higher data throughput and larger memory capacity. These advancements enable faster, more efficient AI development, allowing for quicker model training and improved performance across AI workloads.

The Role of HBM4 in Large Language Models

HBM4 is ideal for developing large language models (LLMs) like GPT-4, which drive generative AI applications such as natural language understanding and content generation. LLMs require vast memory resources to store billions or trillions of parameters and handle data processing efficiently. HBM4’s high capacity and bandwidth enable the rapid access and transfer of data needed for both inference and training, supporting increasingly complex models and enhancing AI’s ability to generate human-like text and solve intricate tasks.

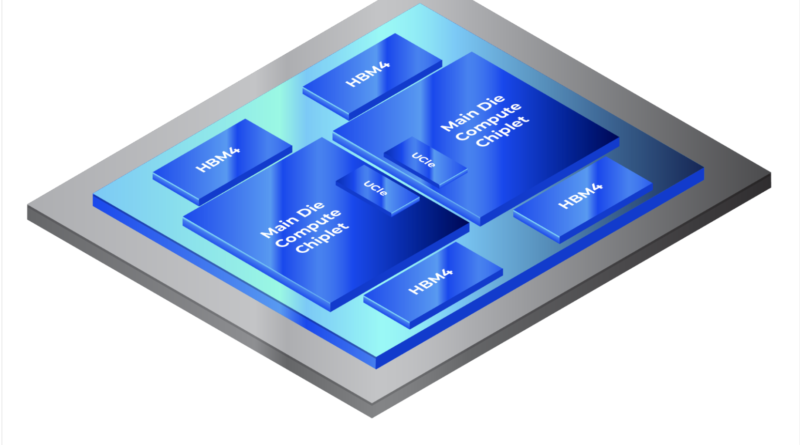

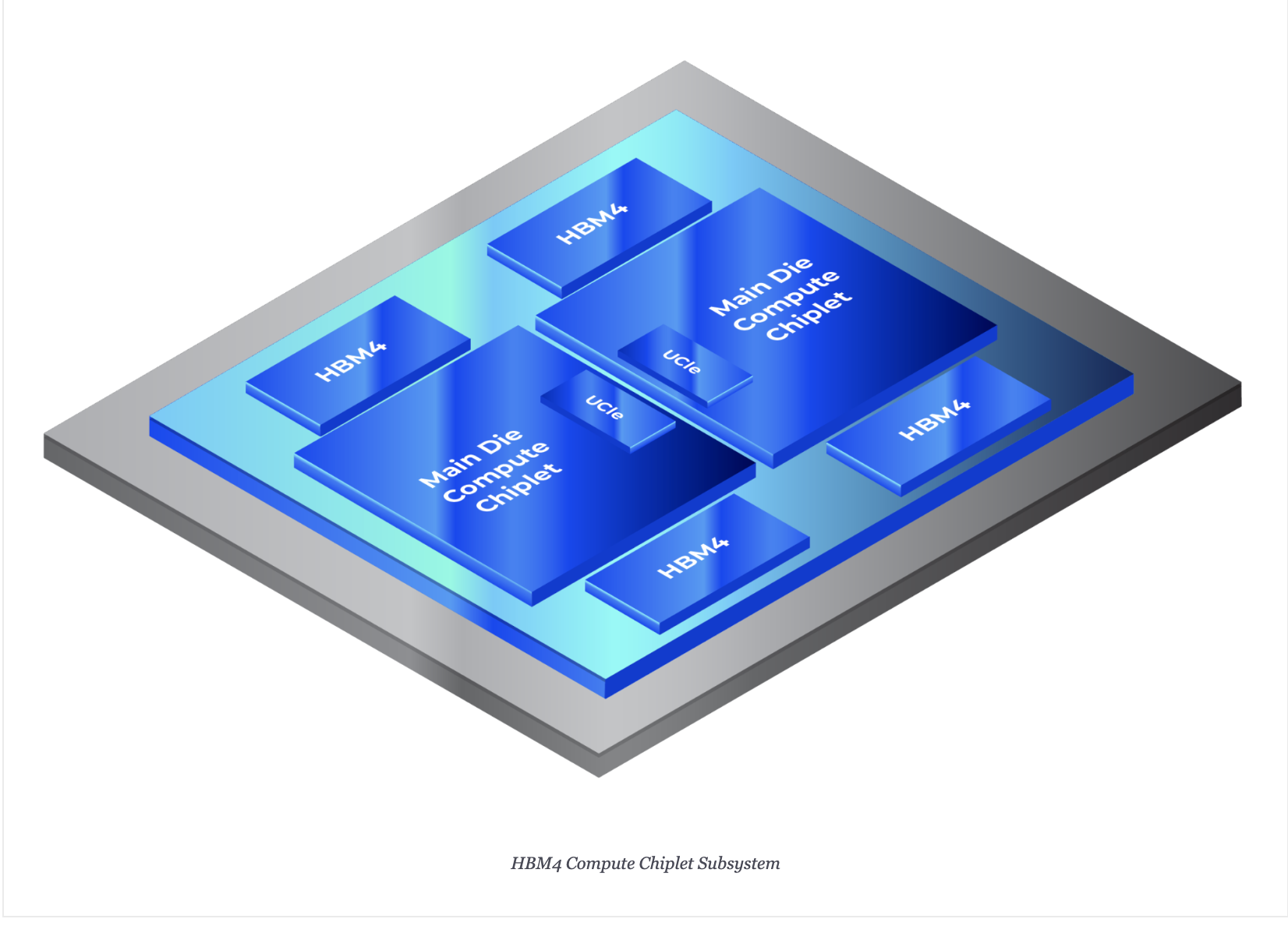

Alphawave Semi and HBM4

Alphawave Semi is pioneering the adoption of HBM4 technology by leveraging its expertise in packaging, signal integrity, and silicon design to optimize performance for next-generation AI systems. The company is evaluating advanced packaging solutions, such as CoWoS interposers and EMIB, to manage dense routing and high data rates. By co-optimizing memory IP, the channel, and DRAM, Alphawave Semi uses advanced 3D modeling and S-parameter analysis to ensure signal integrity, while fine-tuning equalization settings like Decision Feedback Equalization (DFE) to enhance data transfer reliability.

Alphawave Semi also focuses on optimizing complex interposer designs, analyzing key parameters like insertion loss and crosstalk, and implementing jitter decomposition techniques to support higher data rates. The development of patent-pending solutions to minimize crosstalk ensures the interposers are future-proofed for upcoming memory generations.

Summary

As AI advances, memory technologies like HBM4 will be crucial in unlocking new capabilities, from real-time decision-making in autonomous systems to more complex models in healthcare and finance. The future of AI relies on both software and hardware improvements, with HBM4 pushing the limits of AI performance through higher bandwidth, memory density, and energy efficiency. As AI adoption grows, HBM4 will play a foundational role in enabling faster, more efficient AI systems capable of solving the most data-intensive challenges.

For more details, visit this page.

Also Read:

Driving Data Frontiers: High-Performance PCIe® and CXL® in Modern Infrastructures

Share this post via: