Meta Sees Little Risk in RISC-V Custom Accelerators – The Next Platform

Many have waited years to hear someone like Prahlad Venkatapuram, Senior Director of Engineering at Meta, say what came out this week at the RISC-V Summit:

“We’ve identified that RISC-V is the way to go for us moving forward for all the products we have in the roadmap. That includes not just next-generation video transcoders but also next-generation inference accelerators and training chips.”

He explained that in the last four years of robust plotting to bring the RISC architecture into the fold, they’ve not only rolled out production hardware but they’ve set the stage for future custom RISC-V silicon via a standardized a RISC-based control system and made it scalable so any IP Meta develops for any domain will adaptable and connect to the NOC with ease.

In other words, Meta has a template to quickly move any such new silicon into production, which is a big deal for those looking for RISC-V success stories at massive scale. All of this at a time when high-end GPUs are in short supply–with pricing to reflect.

Venkatapuram says Meta’s reasoning in looking to RISC was driven by a need for a acceleration of all “the business critical things we couldn’t do on CPUs” and also “power efficiency, performance, and absolute low latency on the server.” He adds that flexibility to support different workloads and resiliency in the architecture were also paramount.

“Anytime we design or deploy, we expect it to be there for 3-4 years so there has to be resiliency and also programmability—we want to put software in charge of how we use our hardware resources.”

He adds that 64-bit addressing is critical as well as both vector and SIMD capabilities in the core are essential and stressed the need for deep customization. “It’s apparent RISC-V does all of these things; it’s open, has strong support, there are multiple providers of IP and a growing ecosystem we’ve seen emerge over the last 4-5 years.” But ultimately, customization is key.

The video transcoding hardware Meta built on RISC-V alone has provided context to the customization piece. According to Venkatapuram, Meta’s Scalable Video Processor (MSVP), which is where Meta’s production RISC journey began, is in production and handling 100% of all video uploads across its Facebook, Messenger and Instagram services. “We were doing this on CPU earlier but we’ve now replaced 85% of those so we’re using only 15% of those CPUs.”

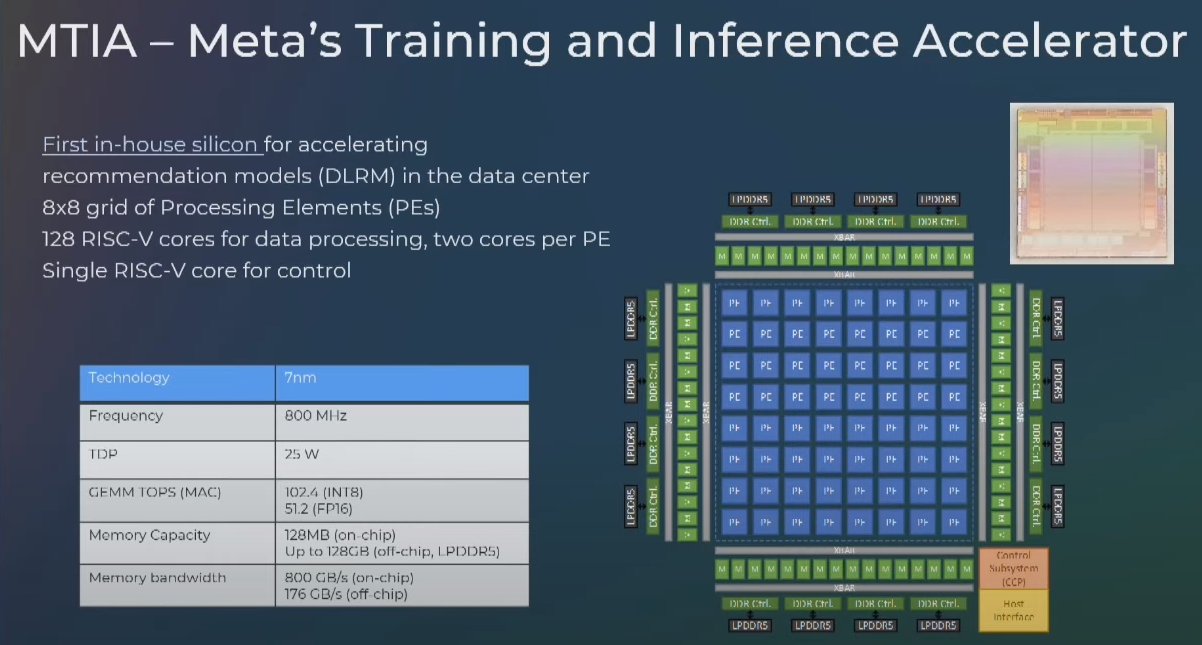

The real story, the one that should get the processor world sitting up to notice, is that Meta is skipping the ubiquitous GPU and building AI inference and a training chip on RISC-V. The Facebook giant revealed some of this work in May, as we covered here, a project that has been ongoing since 2020.

For now, the RISC-V AI processors are dedicated to accelerating recommendation models, both in inference and training. The architecture is not unfamiliar with an 8×8 grid of processing elements, each element hosting 2 RISC-V cores (one scalar, one vector) and a single core for control. The scalar and vector cores sync with a command processor, which work in conjunction with baked-in fixed functions developed at Meta.

Beyond that, not much is known but we’ll press for more insight, including what volume of production Meta has of the RISC-V architecture.

While all of this is promising, there are some challenges that do give pause, although they do not seem to diminish Venkatapuram’s optimism.

Despite massive customization, Meta still needs more from the existing IP options. “There’s very little in terms of offerings that allow seamless integration of custom instruction and resources into RTP, simulators, software tools and compilers,” he explains. Another challenge is a lack of interoperability from the various vendors but he did not provide deep detail. Ultimately, the sense was the challenges are not insurmountable.

One of the most important hurdles, especially as Meta seeks to build more production AI workloads on RISC-V, is around support for matrix extensions. Matrix math is a key component of AI and while RISC-V has vector extensions there are not standard extensions for matrices, he explains. There is work happening on this front that he cites (many vendors, including Stream Computing and T-Head Semi) but ultimately, whatever they come up with should be standardized.

Venkatapuram emphasized above all the importance of broader ecosystem support, from support for all the major libraries and tools to the hardware ecosystem:

“RISC-V, due to its open standard nature, has the potential to attract a bigger set of third party providers of tools, software, peripherals, and more than a proprietary ISA but this potential has yet to be realized fully.”

Featuring highlights, analysis, and stories from the week directly from us to your inbox with nothing in between.

Subscribe now

There are some unique developments afoot at the Barcelona Supercomputing Center (BSC), which stands to reason for the only HPC site to be housed in a former church. The site will be home to the world’s first RISC-V-based supercomputer, a collaboration underpinned by commercial RISC-V company, SiFive, whom we talked …

It was just over a year ago that the RISC-V Foundation, the group shepherding the chip architecture in what over the past decade has become an active and crowded processor market, ratified the base instruction set architecture (ISA) and related specifications. It was a significant step for a relatively new …

As we pointed out a year ago when some key silicon experts were hired from Intel and Broadcom to come work for Meta Platforms, the company formerly known as Facebook was always the most obvious place to do custom silicon. Of the largest eight Internet companies in the world, who …

Using RISC-V as a basis for developing custom accelerators makes sense to me, especially as such development might be quite exploratory, and proof-of-concept may occur through prototyping on an FPGA. NIOS, NIOS II, PicoBlaze and MicroBlaze are proprietary, and either 8-bit or just 32-bit. LatticeMico8 and 32 are more open, but also top out at 32-bits. ARM core instances (eg. 32-bit Cortex-A9) are found in many FPGAs but are not freely customizable that I know of (hard). Accordingly, RISC-V offers the better prospect, especially in the most relevant field of 64-bit device development.

In my opinion, ARM’s RISC risks losing out to RISC-V in 2 areas here. Firstly, they should already have a 64-bit microcontroller profile (say Cortex-E) in the field, to embed deeply in hardware and edge devices where RISC-V is finding a welcoming niche for itself (including HDDs for example) — 32-bit microcontrollers are a bit long in the tooth these days. Secondly, they should have an open source cutomizable FPGA profile for these 64-bit units (say Cortex-F), with a simplified version of that arch, to allow folks to freely tinker with it, and possibly develop and prototype ARM-based accelerators at low cost (in their labs, studio garages, or bedrooms). This may take the form of their Cortex-M1 and M3 free use soft IP programs ( https://www.arm.com/resources/free-arm-cortex-m-on-fpga ) but at the 64-bit microcontroller level — those could readily beat the pants off of just about any RISC-V device out there in my view! Better yet if they’d pack this straight into Yosys, resulting in a most exceptional “voilà”!

Quote: “Meta is skipping the ubiquitous GPU and building AI inference and a training chip on RISC-V”

Way to go! But I’m not sure if MTIA ( https://www.nextplatform.com/2023/05/18/meta-platforms-crafts-homegrown-ai-inference-chip-ai-training-next/ ) can beat the dataflow designs of Tenstorrent, Groq, Cerebras, and PasoDoble, that implement forms of configurable “near-memory” compute. MTIA is more reminiscent of the server-oriented 64-core Sophon SG2042 that SC23 found to be somewhat lacking in performance.

I’m no expert in this but the difference seems to be where design sliders are set in relation to (1) the amount of dedicated memory per computational core (eg. near-memory compute, minimal latency, proper bandwidth, not shared), and (2) the degree to which the underlying NoC or interconnect can simultaneously (and independently) shuffle computed outputs from any core to any other core to form a deep (and efficient) software-configurable “macroscopic” parallel computational pipeline (dataflow).

SambaNova’s (PasoDoble?) Reconfigurable Dataflow Units (RDUs), Groq’s Software-defined Scale-out TSP (or LPU?), Cerebras’ waferscale neocortex with 2D mesh interconnect (and new “weight streaming” mode), and Tenstorrent’s “dataflow machine [that] can send data from one processing element to another” ( https://www.nextplatform.com/2023/08/02/unleashing-an-open-source-torrent-on-cpus-and-ai-engines/ ) have set secret sauce sliders on the side that looks best for AI acceleration. If I’m not mistaken, MTIA, Veyron, and Sophon set their sliders towards a more static inter-core network design and less dedicated memory per core, making them best for (maybe) HPC applications [*](like A64FX, Rhea1, …)?

[*] Note: HPC applications as considered separately from dynamic-language-based DC applications that commonly require register-based or stack-based abstract/virtual machines and automated garbage collection among others (Java, Javascript, Python, Scheme, Julia, Lua, Erlang, Smalltalk, …).

No matter it’s a X86, ARM, Power64, Power64le, RISCv64, S390x server or device, when you run Linux on it, only our software can provide the true zero trust environment for users to run commands on it.

Your email address will not be published.

*

*

This site uses Akismet to reduce spam. Learn how your comment data is processed.

The Next Platform is published by Stackhouse Publishing Inc in partnership with the UK’s top technology publication, The Register.

It offers in-depth coverage of high-end computing at large enterprises, supercomputing centers, hyperscale data centers, and public clouds. Read more…

Featuring highlights, analysis, and stories from the week directly from us to your inbox with nothing in between.

Subscribe now

All Content Copyright The Next Platform