Meta Places Orders For NVIDIA’s Cutting-Edge Blackwell B200 AI GPUs, Expecting Shipments Later This Year – Wccftech

NVIDIA’s Blackwell B200 AI GPUs have started to gain the market spotlight, as Meta expects to receive initial shipments later this year.

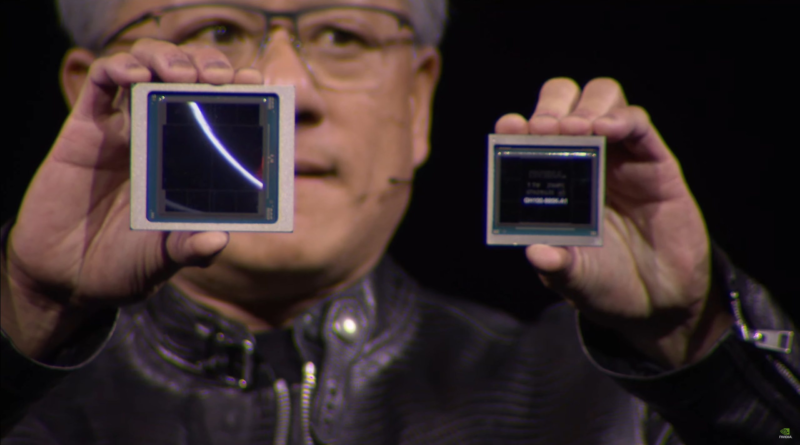

NVIDIA’s GTC conference has proved to be a success for the firm, especially regarding the industry exposure the company gained with its latest Blackwell AI platform. Leading the pack is the Blackwell B200 AI accelerator, which boasts high-performance leaps from the previous Hopper generation, attracting massive clientele interest.

Meta expects to receive initial shipments of Nvidia’s new flagship AI chip, the B200 Blackwell chip, later this year, Reuters reports, noting Nvidia CFO Colette Kress said of the B200 GPU, “we think we’re going to come to market later this year,” but volume shipments will not…

— Dan Nystedt (@dnystedt) March 20, 2024

Meta has reportedly placed initial orders for the B200s, becoming the first big tech name to order NVIDIA’s latest AI product. However, NVIDIA’s CFO Colette Kress has expressed that the company will enter the markets with its Blackwell products until 2025, which might suggest the timeline for the lineup’s massive adoption.

It’s no hidden fact that Meta has been an “iron partner” for NVIDIA in its journey into the AI markets and has been one of the biggest customers of the firm, reportedly accumulating 600,000 units of H100s by the end of this year, which is undoubtedly a huge number. Meta’s CEO, Mark Zuckerberg, has already intended to train the company’s “Llama” LLM model using the Blackwell platform, potentially gaining the edge in computing power over others.

Nvidia CEO Jensen Huang said he’s working with TSMC to avoid bottlenecks in chip packaging like that seen with the H100, Reuters reports. “The volume ramp in demand happened fairly sharply last time, but this time, we’ve had plenty of visibility” of demand, Huang said. $NVDA $TSM…

— Dan Nystedt (@dnystedt) March 20, 2024

NVIDIA’s CEO, Jensen Huang, also stated that the AI segment has a $250 Billion year opportunity each year and that the company will have a large percentage of that value with its latest AI GPU accelerators.

The opportunity for the world today, the data center size is $1 trillion. Right. And it’s a $1 trillion worth of installed, $250 billion a year. We sell an entire data center in parts and so our percentage of that $250 billion per year is likely a lot, lot, lot higher than somebody who sells a chip. It could be a GPU chip or CPU chip or networking chip. That opportunity hasn’t changed from before. But what NVIDIA makes is an accelerated computing platform data center scale. Okay. And so our percentage of $250 billion will likely be higher than the past.

Jensen Huang – NVIDIA CEO (GTC Q&A)

For a quick rundown of what to expect with the Blackwell B200, it incorporates 160 SMs for 20,480 cores. The GPU will feature the latest NVLINK interconnect technology, supporting the same 8 GPU architecture and a 400 GbE networking switch. We have summarized the key points below:

Now that Meta has placed orders for NVIDIA’s Blackwell B200, this will ultimately mark the transition to next-gen markets, where, yet again, Team Green is expected to retain its dominance.

Subscribe to get an everyday digest of the latest technology news in your inbox

Some posts on wccftech.com may contain affiliate links. We are a participant in the Amazon Services LLC Associates Program, an affiliate advertising program designed to provide a means for sites to earn advertising fees by advertising and linking to amazon.com

© 2024 WCCF TECH INC. 700 – 401 West Georgia Street, Vancouver, BC, Canada