Meta Discloses Its Second Custom Processor, And This Should Interest Investors – Forbes

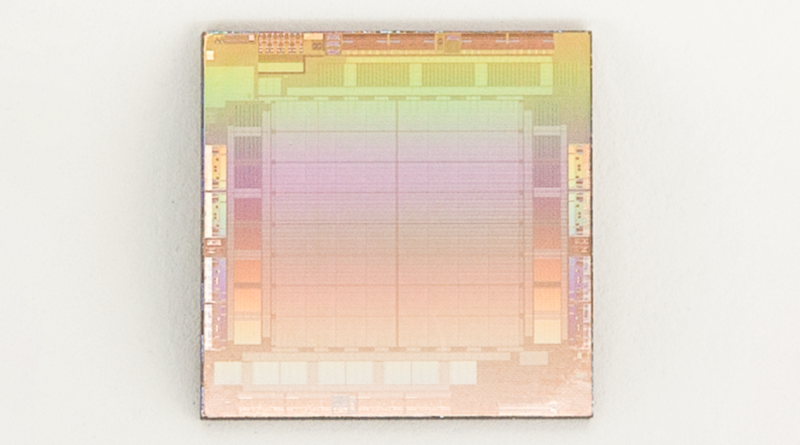

Meta “MTIA” V1 (Meta Training and Inference Accelerator) die shot

When most people think about Meta, they think about its apps, including Facebook, Instagram, WhatsApp, or the upcoming metaverse. Many don’t know that the company has designed and built some of the world’s largest and most sophisticated datacenters to operate those services.

Unlike Cloud Service Providers like AWS, GCP, or Azure, Meta doesn’t have to disclose details about its silicon choices, infrastructure, or datacenter design aside from its OCP designs to impress buyers. Meta users want better and more consistent experiences, unconcerned with how it happens.

Unprecedented disclosures

The lack of details changed today with what I consider an unprecedented disclosure of the company’s latest AI inference accelerator, an in-production video transcoder, its next-generation datacenter architecture designed for AI, and details on the second phase of its 16,000 GPU AI research supercomputer, the first phase which has been powering the LLaMA generative AI model.

This disclosure should be of interest to Meta stakeholders, including investors, as this impacts time to market, differentiation, and cost.

Earlier this week, I caught up with Alexis Björlin, VP of Infrastructure at Meta, about the company’s full-stack approach to its silicon and to get more details on its new AI inference and video accelerator.

I am impressed with Meta’s progress so far, but this is only the beginning.

Meta’s full-stack approach

Before I jump into “MTIA” or “Meta Training and Inference Accelerator,” I wanted to review Meta’s approach to silicon. I am pleased to say that the silicon strategy has not changed since I chatted with the company a year and a half ago. You can read that write-up here. Meta will continue to buy tons of merchant silicon from AMD, Broadcom, Intel, Marvell, and NVIDIA. However, it will design its own for unique workloads where merchant silicon isn’t optimal to deliver the best performance per watt and TCO.

This approach makes perfect strategic sense to me as Meta has a “full-stack” infrastructure approach, owning nearly every level of the stack, from infrastructure to the app and everything in between. When industry standards optimize for its full-stack, it uses those, and when not, it helps create industry standards like OCP and PyTorch.

Meta’s apps and services are unique and operate at an incredible scale, which, I believe, magnifies the need and benefits for custom silicon. Facebook and Instagram users watch tremendous amounts of video and are constantly being served up recommendations of people to connect with, posts to interact with, and, of course, ads to click on. You can imagine how AI-infused metaverse and generative AI will push the need for lower power, more highly optimized solutions.

MSVP: Meta Scalable Video Processor

MTIA isn’t Meta’s first custom silicon. The MSVP, or “Meta Scalable Video Processor,” is in production. A few details on the video transcoder emerged last year, but the company has decided to disclose even more today.

According to Meta, Facebook users spend 50% of their time watching 4B videos per day. Each video gets compressed after being uploaded, stored, and then decompressed into a suitable format when the user wants to view it. Those videos are transcoded (compressed/decompressed) using standard formats like H.264 and VP9. The trick is to make the file small quickly, store it quickly, and stream it at the highest quality for the appropriate device (i.e., phone, tablet, PC) at the highest quality possible.

Meta’s MSVP V1 (Meta Training and Inference Accelerator) packaged chip.

This type of workload characteristic is perfect for an ASIC (Application Specific Integrated Circuit), a workload that needs the highest efficiency across a fixed standard. ASICs are the most efficient but not as programmable as a CPU or GPU. When the video standard changes from H.264 and VP9 to AV1, which is likely to happen in the future, Meta will need to create a new ASIC, a new version of MSVP.

Meta said that in the future, it will be optimizing for “short-form videos, enabling efficient delivery of generative AI, AR/VR, and other metaverse content.” You can find an MSVP focused-write-up here.

Onto AI inferencing.

MTIA V1: Meta Training and Inference Accelerator

I think the most significant announcement at Meta’s event is its foray into custom AI inferencing. AI has changed everything for consumers and will change even more in the future. Meta is no stranger to AI in its workflow. It currently uses AI in content selection, ad suggestions, content filtering of restricted content, and even tools for its internal programmers. You can imagine how much AI will be needed for its metaverse and generative AI-infused experiences.

Like the MSVP, the MTIA is also an ASIC but focused on next-generation recommendation models and integrated into PyTorch to create an optimized ranking system . Think about every time a Facebook or Instagram user is recommended content, new “friends,” or ads. I think it’s likely one of the most used AI workloads on the platform.

Developed back in 2020, up to 12 MTIA V1 M.2 cards can be seated in a server to accelerate “small batch low” and “medium” complexity inference recommender workloads developed using PyTorch. Meta’s evaluation showed that MTIA V1 provided optimal recommender performance, measured by TFLOPs, per watt. You can find more details on Meta’s recommender tests here. Meta says that “GPUs were not always optimal for running Meta’s specific recommendation workloads at the levels of efficiency required at our scale.”

Training these models is clearly on the roadmap, hence the “T” in “MTIA.” Ultimately Meta will continue to use merchant silicon GPUs for those workloads where the GPU provides better performance per watt. Don’t forget that 100s of AI workloads still work better on GPUs today and many other frameworks in play other than PyTorch.

Wrapping up

Based on Meta’s disclosures, I believe the company has demonstrated it is a capable player in home-grown silicon. I have long considered Meta an innovator in infrastructure based on its contributions to OCP, but silicon is one giant step further. Many service and device companies, from the datacenter to smartphones, have dabbled in home-grown silicon and exited, but I don’t think this will be the case here. Meta’s unique requirements and scale make the payoff bigger than those compared to companies that run more homogenous apps or at a small scale.

Bigger-picture, I believe if Meta can successfully continue adding home-grown silicon based on its unique requirements and scale, this should be important to Meta stakeholders, including investors. Home-grown silicon, done right, as we have seen with Apple and AWS, leads to advantages in time to market, differentiation, and cost. That alone should get investor attention. The most aggressive engineers want to work for technological leaders that work on cool stuff. Meta’s home-grown silicon is cool, adding to those other cool developers, infrastructure, and AI projects like Grand Teton, PyTorch, and the company’s latest Research SuperCluster.

I am looking forward to assessing future Meta’s home-grown technologies.

Moor Insights & Strategy provides or has provided paid services to technology companies like all research and tech industry analyst firms. These services include research, analysis, advising, consulting, benchmarking, acquisition matchmaking, and video and speaking sponsorships. The company has had or currently has paid business relationships with 8×8, Accenture, A10 Networks, Advanced Micro Devices, Amazon, Amazon Web Services, Ambient Scientific, Ampere Computing, Anuta Networks, Applied Brain Research, Applied Micro, Apstra, Arm, Aruba Networks (now HPE), Atom Computing, AT&T, Aura, Automation Anywhere, AWS, A-10 Strategies, Bitfusion, Blaize, Box, Broadcom, C3.AI, Calix, Cadence Systems, Campfire, Cisco Systems, Clear Software, Cloudera, Clumio, Cohesity, Cognitive Systems, CompuCom, Cradlepoint, CyberArk, Dell, Dell EMC, Dell Technologies, Diablo Technologies, Dialogue Group, Digital Optics, Dreamium Labs, D-Wave, Echelon, Ericsson, Extreme Networks, Five9, Flex, Foundries.io, Foxconn, Frame (now VMware), Fujitsu, Gen Z Consortium, Glue Networks, GlobalFoundries, Revolve (now Google), Google Cloud, Graphcore, Groq, Hiregenics, Hotwire Global, HP Inc., Hewlett Packard Enterprise, Honeywell, Huawei Technologies, HYCU, IBM, Infinidat, Infoblox, Infosys, Inseego, IonQ, IonVR, Inseego, Infosys, Infiot, Intel, Interdigital, Jabil Circuit, Juniper Networks, Keysight, Konica Minolta, Lattice Semiconductor, Lenovo, Linux Foundation, Lightbits Labs, LogicMonitor, LoRa Alliance, Luminar, MapBox, Marvell Technology, Mavenir, Marseille Inc, Mayfair Equity, Meraki (Cisco), Merck KGaA, Mesophere, Micron Technology, Microsoft, MiTEL, Mojo Networks, MongoDB, Multefire Alliance, National Instruments, Neat, NetApp, Nightwatch, NOKIA, Nortek, Novumind, NVIDIA, Nutanix, Nuvia (now Qualcomm), NXP, onsemi, ONUG, OpenStack Foundation, Oracle, Palo Alto Networks, Panasas, Peraso, Pexip, Pixelworks, Plume Design, PlusAI, Poly (formerly Plantronics), Portworx, Pure Storage, Qualcomm, Quantinuum, Rackspace, Rambus, Rayvolt E-Bikes, Red Hat, Renesas, Residio, Samsung Electronics, Samsung Semi, SAP, SAS, Scale Computing, Schneider Electric, SiFive, Silver Peak (now Aruba-HPE), SkyWorks, SONY Optical Storage, Splunk, Springpath (now Cisco), Spirent, Splunk, Sprint (now T-Mobile), Stratus Technologies, Symantec, Synaptics, Syniverse, Synopsys, Tanium, Telesign,TE Connectivity, TensTorrent, Tobii Technology, Teradata,T-Mobile, Treasure Data, Twitter, Unity Technologies, UiPath, Verizon Communications, VAST Data, Ventana Micro Systems, Vidyo, VMware, Wave Computing, Wellsmith, Xilinx, Zayo, Zebra, Zededa, Zendesk, Zoho, Zoom, and Zscaler. Moor Insights & Strategy founder, CEO, and Chief Analyst Patrick Moorhead is an investor in dMY Technology Group Inc. VI, Fivestone Partners, Frore Systems, Groq, MemryX, Movandi, and Ventana Micro.

One Community. Many Voices. Create a free account to share your thoughts.

Our community is about connecting people through open and thoughtful conversations. We want our readers to share their views and exchange ideas and facts in a safe space.

In order to do so, please follow the posting rules in our site’s Terms of Service. We’ve summarized some of those key rules below. Simply put, keep it civil.

Your post will be rejected if we notice that it seems to contain:

User accounts will be blocked if we notice or believe that users are engaged in:

So, how can you be a power user?

Thanks for reading our community guidelines. Please read the full list of posting rules found in our site’s Terms of Service.