Kiểm tra các vấn đề về nhiệt trở nên khó khăn hơn

Increasingly complex and heterogeneous architectures, coupled with the adoption of high-performance materials, are making it much more difficult to identify and test for thermal issues in advanced packages.

For a single SoC, compressing higher functionality into a smaller area concentrates the processing and makes thermal effects more predictable. But that processing can happen anywhere in an advanced package, where there may be a combination of general-purpose and highly specialized processors and complex partitioning and prioritization schemes. The resulting thermal profiles are complex, often unique, and often very different than in conventional SoCs. Heat generated by processors, as well as by moving data through thin wires, often must traverse multiple metal and substrate layers, as well as a variety of different materials and interconnect schemes.

While this may be an engineering marvel, it creates a far more intricate environment for testing these devices. Thermal profiles may vary greatly, often resulting in gradients that are specific to different workloads. On top of that, any physical effects can be additive, such as thermal buildup in one part of a package or chiplet, and one chiplet’s thermal profile can influence others.

“How do you put your hottest die in a place where the energy can get out and not affect your other die in the stack?” asked Marc Hutner, Tessent product management director at Siemens Digital Industries Software. “If the heat goes down or through to the next die, you’re going to see performance changing, depending on what’s happening.”

In the past, thermal effects were largely an afterthought for test engineers. Devices operating at higher frequencies or employing materials like silicon-germanium (SiGe), gallium nitride (GaN), or silicon carbide (SiC) have distinct thermal behaviors at the chip level. That’s not the case for heterogeneous stacks of chiplets. In fact, each chiplet may have different power and thermal characteristics.

“Thermal management is no longer an afterthought in semiconductor testing,” said Adrian Kwan, senior business manager at Advantest. “It’s a design imperative. Without precise thermal control, the accuracy of test measurements can degrade rapidly and device reliability can suffer, especially in high-power and high-frequency applications where heat dissipation is critical.”

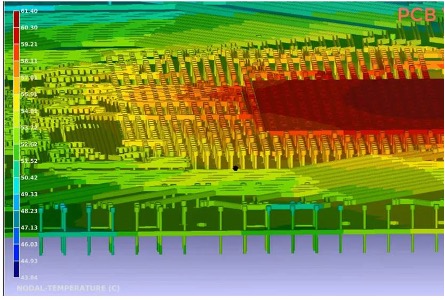

Fig. 1: Simulating heat dissipation as thermal behavior couples. Source: Ansys

As advanced devices combine RF, analog, memory, and photonics within the same package, hotspots become harder to predict. Even slight temperature variations can cause performance drift. This complexity is magnified in 3D architectures, where vertical heat flow through TSVs and hybrid-bonded layers differs significantly from planar thermal paths.

Thermal modeling gaps

Traditional thermal models that are validated for planar die lack the fidelity needed to predict behavior when those die are decomposed and stacked. More detailed models must account for the complex interactions of multiple materials, vertical heat flows, and the nonlinear behavior of advanced cooling techniques. This is forcing the industry to rethink thermal simulation for both design and test stages.

One of the key drivers behind this shift is the thinning of substrates and metal layers. In the past, when all the various compute elements were on the same die, thicker metal layers and substrates didn’t have a significant impact on performance or power, and they were very effective in removing heat. But inside a package, signals need to travel from chiplets through different materials in the stack. Chiplets are thinner to meet the application’s need for lower profiles. These changes make it harder to dissipate heat, and it has led to a variety of innovative approaches, such as using vias to channel heat, adding vapor caps and inert cooling liquids in fixed systems such as server racks, and using thermal interface materials (TIMs).

All of these changes have a big impact on test strategies. Earlier corner-based thermal assumptions may not hold when devices are composed of heterogeneous stacks. Traditionally, engineers picked a few standard temperature points — often a “cold” corner, a “room-temperature” corner, and a “hot” corner — and assumed that if the device operated correctly at those discrete conditions, it would be reliable throughout its entire operating range.

Instead of modeling temperature variations continuously across the entire device or package, engineers relied on these fixed temperature setpoints as stand-ins for the device’s real-world thermal environment. By testing only at a few corners (e.g., -40°C, 25°C, and 125°C), they aimed to ensure that the device would function correctly within the entire range. However, as packaging becomes more complex and heat distribution becomes less uniform, these corner-based assumptions may miss subtle thermal gradients, localized hotspots, or dynamic temperature changes that occur in actual use. This requires more sophisticated modeling and test structures so engineers can accurately measure and interpret device behavior under varying thermal conditions.

High frequencies and exotic materials

As operating frequencies rise toward mmWave and beyond, dynamic power increases and internal heating becomes more pronounced. At the same time, the diversity of materials used in advanced packaging can complicate test conditions. Wide-bandgap semiconductors and novel materials each have distinct thermal profiles, requiring custom setups and potentially higher temperature capabilities in test environments.

“High-frequency testing can be significantly impacted by temperature,” said Nader Abazarnia, director of global thermal systems at Advantest. “Temperature variations can affect signal integrity, amplitude, and phase, distorting the signal. Having accurate feedback from critical parts of the silicon and controlling the temperature externally during ATE testing allows more precise measurements or pattern generation.”

Reliable characterization requires more advanced thermal sensing. Engineers are incorporating on-chip thermal sensors, leveraging IR imaging or laser-based metrology, and exploring embedded instrumentation that correlates power, frequency, and thermal states. The move from a single, global thermal view to a granular, per-block or per-core understanding is key to enabling engineers to isolate localized hotspots, minimize parametric drift, and apply targeted cooling strategies. By correlating local thermal variations with device performance and functional blocks, test teams can refine test conditions, improve yield, and ensure that each component operates within its optimal thermal envelope.

Power delivery and thermal density

The trend toward denser integration and multiple processing cores places substantial demands on power delivery. As HPC and AI devices scale upward, handling the resulting thermal load becomes essential. Advanced test cells may include integrated liquid cooling, specialized TIMs, and active cooling solutions right at the test head.

“The next step that is a problem now, and will continue to be even more so, is power dissipation and the thermal management related to that power dissipation,” said Jeorge Hurtarte, Senior Director of Product Strategy at Teradyne. “As these heterogeneously integrated devices advance, the ability of the ATE instrumentation to supply the needed DC power and handle the associated heat becomes a primary challenge.”

In many high-performance segments, the cost of advanced thermal solutions may be justified by the need to guarantee proper characterization. Thermal instabilities can mask latent defects or cause parameter shifts that lead to incorrect binning. Even minor temperature variations can shift transistor timing and introduce noise. As test frequencies climb, stable and well-controlled temperatures are essential for maintaining signal integrity and preventing false test results.

“If the thermal is varying, timing is varying,” said Hutner. “Your clocks might shift and skew results. Understanding how to measure and control temperature across the device is critical. The complexity of multi-die systems means we must correlate thermal conditions to device behavior, ensuring we know what’s signal and what’s thermal noise.”

This correlation allows engineers to differentiate real device issues from thermally induced anomalies. By integrating design for test (DFT) and thermal considerations early, devices can include appropriate sensors and monitors. This approach provides valuable feedback, both during design validation and production test runs.

Adaptive test strategies and AI

Static test flows may yield incomplete insights into thermal behavior. As devices grow more complex, adaptive testing — where conditions adjust in real-time based on observed thermal and electrical responses — becomes appealing. By changing test vectors or adjusting power delivery dynamically, engineers can maintain stable temperature profiles and gather more meaningful data.

“We can change how many cores are active during test, and thus change the power profile,” explained Hutner. “This flexibility gives test engineers the ability to do more learning about the power-thermal relationship. With proper data infrastructure and machine learning algorithms, we can anticipate hotspots and proactively adjust test conditions.”

Machine learning could analyze massive datasets generated during testing, identifying patterns and predicting thermal challenges before they become critical. AI may help interpret subtle correlations between test parameters and temperature, guiding engineers to refine test sequences and improve yield.

“If we can combine thermal data with electrical test results, we start to see patterns that help us predict where issues might arise,” added Hutner. “That can ultimately allow us to refine test flows, improve yield, and catch latent defects that only show up under certain thermal conditions.”

This data-driven approach helps ensure test outcomes remain stable and representative of the device’s real operating environment. As advanced packaging and 3D integration push test methodologies to new levels of complexity, adaptive strategies and AI-driven analytics will play a pivotal role in navigating the thermal landscape.

3D integration and chiplets

As chiplets become more common, each die within a package may have unique thermal signatures, stressing the importance of thermal planning at the system level. A stack’s overall performance might hinge on its thermally weakest die. Recognizing these differences early helps ensure balanced performance and avoids later reliability issues.

“This includes RTL level optimization – how to partition a large SoC into smaller dies and chiplets to achieve optimal PPA including thermal performance,” said Shawn Nikoukary, senior director services solution team at Synopsys. “Die partitioning needs to be co-designed with package floorplanning and consider thermal simulations—so if I place the dies in certain configuration in my package, how does the thermal performance look like? What if I move a certain block into another partition with a different node? That drives the design decisions.”

These thermal considerations influence how designers choose nodes, materials, and die configurations. A carefully planned 3D architecture might cluster similar thermal profiles together, or strategically locate high-power blocks adjacent to superior heat spreaders. As a result, test engineers must correlate temperature gradients and power consumption patterns to verify that these thermal assumptions hold true under real-world operating conditions. Early modeling and simulation, combined with iterative architecture exploration, help avoid blind spots that only become apparent after devices are fabricated and assembled.

“If we can combine thermal data with electrical test results, we start to see patterns that help us predict where issues might arise,” said Hutner. “That ultimately can allow us to refine test flows, improve yield, and catch latent defects that only show up under certain thermal conditions.”

In addition, ensuring known-good thermal behavior, not just known-good die, affects binning and yield. Identical chiplets can still differ in thermal and electrical characteristics. Identifying a die with sub-optimal thermal response early in the process can prevent mismatches that reduce system performance. The key is not only to measure temperatures at a few fixed points, but to dynamically correlate them with electrical test results.

“Thermal conductivity is a key factor in thermal management solutions in ATE test equipment,” said Abazarnia. “High thermal conductivity for the interface to the DUT is crucial, and using thermal interface materials in the contact areas helps ensure uniform contact and reduce hotspots.”

Incorporating photonics and interposers

The rise of chiplet-based systems increasingly includes photonics for high-speed I/O, and managing the thermal environment for these optical components presents new challenges for test. Some companies are integrating optical functionality within interposers, taking advantage of CMOS-based manufacturing to add layers of optical and electrical interconnects with built-in thermal solutions.

“We build integrated heat spreaders and things of that like in the interposer, so that when lasers are mounted on the interposer, there are spreaders built in that enable thermal flow,” said Suresh Venkateson, chairman and CEO at POET Technologies. “We’ve inherently addressed crosstalk and thermal management by design. With photonics in the interposer, we can ensure that optical and electrical signals co-exist without degrading each other’s performance.”

By incorporating photonic devices at wafer scale in a platform that also supports advanced thermal management, test engineers gain more predictable conditions and reduce complexity. This approach aligns with the broader industry trend of leveraging advanced substrates, interposers, and 3D integration to optimize thermal, electrical, and now optical paths.

Substrates and structures

Exploration of new substrate materials and interconnect schemes offers opportunities to improve thermal management. Silicon interposers, glass substrates, and fan-out wafer-level packaging can influence how heat is spread and extracted. Each substrate choice and interconnect pitch affects the thermal environment that test engineers must maintain.

“IC packages with multiple die could exhibit hot spots in multiple locations,” said Abazarnia. “These devices would benefit from technologies enabling independent control of hotspots — multi-zone thermal control — or socket designs and materials that can spread heat and integrate forced-convection cooling. We’re developing kits to make forced-convection integration a simple add-on.”

At finer pitches and with advanced bonding techniques, designers have more ways to influence thermal behavior. Vertical stacking can improve performance, but it also complicates cooling. This is why there is renewed activity around microfluidics and thermal interface materials that must be tested and validated.

As wafer-to-wafer bonding and other advanced steps move earlier in the fabrication cycle, thermal considerations once confined to back-end packaging steps become relevant at the front end. By the time a device reaches final test, thermal constraints and solutions already may have been shaped by choices made in front-end processing.

Put simply, thermal management is no longer isolated. Designers, foundries, OSATs, and test equipment suppliers must cooperate more closely. And EDA vendors and test engineers are working together to create simulation, modeling, and DFT solutions that consider temperature from the start.

“We’re talking millions and millions of bumps and complex routing structures, and we have to have auto routers, more automated ways to do extractions and run thermal simulation,” said Nikoukary. “All this complexity is adding challenges to the EDA side, pushing us to develop new tools and methodologies that can handle these advanced packaging and thermal requirements.”

Scaling Up Solutions

Adopting advanced thermal management techniques at volume poses a non-trivial challenge. While high-performance computing (HPC) parts can justify the expense of integrated cooling systems, specialized thermal chucks, or liquid-cooled test heads, other device segments require cost-effective solutions that still ensure temperature stability and repeatability. As test floors host dozens or even hundreds of test cells, maintaining consistent thermal profiles and data correlation across different sites and shifts is critical.

“The test conditions must be stable and repeatable across multiple test cells, shifts, and factories,” said Hurtarte. “If different floors use slightly different cooling strategies, the results won’t align, complicating yield analysis and binning. Standardization in thermal management, materials and sensor calibration is essential.”

This standardization supports the consistent implementation of advanced cooling methods, embedded sensors, and machine learning-driven analytics. Establishing best practices for thermal head design, TIMs, and fluid properties can minimize variability, ensuring that devices are tested under comparable thermal conditions worldwide.

“There seems to be a need to test not only structural test but also limited burn-in and functionality (SLT) with some of the latest packaging architectures,” said Abazarnia. “These new requests are driving not only changes to the test content and load board but also a convergence in the test thermal performance across back-end test and SLT in one socket. The lines between ATE test, SLT and in some cases burn-in are being blurred and may all happen in one socketing.”

With industry-wide guidelines, robust supply chains for cooling materials, and proper training, advanced thermal management solutions can become accessible to a broader range of devices. And by treating thermal strategies as a coordinated and standardized effort, rather than a one-off engineering feat, the industry can maintain quality, improve yield, and control costs even as devices grow more complex and thermally demanding.

Conclusion

As scaling strategies shift from planar shrinking toward volumetric integration, thermal management is taking on a central role in enabling next-generation performance. Novel cooling solutions, more accurate models, adaptive test protocols, and machine learning insights all point the way toward a more thermally aware future.

“With proper data infrastructure and machine learning algorithms, we can anticipate hotspots and proactively adjust test conditions,” added Hutner. “As devices become more complex, this proactive stance will be key to managing thermal conditions and ensuring reliable performance.”

Thermal issues, once peripheral, now intersect with core design, manufacturing, and testing decisions. By proactively addressing these challenges, the industry can continue delivering higher performance, better efficiency, and more reliable devices as integration scales upward. A holistic approach, rooted in collaboration and early planning, will be key to managing the thermal landscape of advanced semiconductor testing.

Related Reading

Signals In The Noise: Tackling High-Frequency IC Test

Millimeter-wave frequencies require new test approaches and equipment; balancing precision with cost-efficiency is the challenge.

New Challenges In IC Reliability

How advanced packaging, denser circuits, and safety-critical markets are altering chip and system design.