Hướng tới một thế giới phần cứng được xác định bằng phần mềm

Software-defined hardware may be the ultimate Shift Left approach as chip design grows closer to true co-design than ever with potential capacity baked into the hardware, and greater functionality delivered over the air or via a software update. This marks another advance in the quest for lower power, one that’s so revolutionary that it’s upending traditional ideas about model-based systems engineering (MBSE).

“Software-defined hardware constitutes a halfway point between ASIC and FPGA design. It is a particularly attractive solution for scenarios where the compute needs to be reconfigured very often,” said Guillaume Boillet, senior director of product management & strategic marketing at Arteris. “Its use is particularly attractive in areas where the algorithms are quickly evolving. Today, it is also very relevant for defense, IoT, and AI applications.”

Traditionally, software and hardware were developed separately and then integrated together. Software-defined hardware tackles that integration from outset of the design process. The software defines a system’s required functionality, and the silicon must be malleable enough for design teams to optimize the hardware for that functionality.

“With modern software-defined products, the algorithm is defining almost every aspect of the electronics as well as the physical systems,” said Neil Hand, director of marketing at Siemens EDA. “In cyber-physical systems, the software informs the hardware choices, informs the electronics choices, informs everything. From the very early system architecture, EDA helps quantify the decomposition of the algorithm into physical and then electronic systems. Then, as you get into the decomposition of the electronics part, you look at synthetic workloads to understand the choices to be made.”

This opens the door to much more customization and optimization in chip architectures, which has become especially necessary for leading-edge designs, which increasingly are domain-specific and heterogeneous. “You can do things now in a software-defined context that never would have been possible before,” said Rob Knoth, product management director at Cadence. “You can react to new situations and add capabilities that weren’t originally planned for, and that’s tremendously powerful.”

Shifting left, shifting mindsets

In this new world, it isn’t just design planning that needs to shift. The average life expectancy for cars is now up to 200,000 miles, according to Consumer Reports.

“The extended consumer products upgrade cycles and the decade-long automotive electronics lifespan have definitely driven the adoption of the SDH approach,” said Arteris’ Boillet.

Indeed, the industry is changing from a mindset in which both software and hardware have short lifecycles, to more of a space/military mindset, where hardware upgrades are hugely expensive and not to be taken lightly. The average consumer is unlikely to buy a new car just because the manufacturer is trying to push an ADAS upgrade.

“If necessary, you can replace a card, which will give the device a new interface without necessarily changing the network stack,” said Hand. “In a car, for instance, maybe you update one of the ECUs to give it more power. In fact, you even see that with some of the Tesla cars that are out there already, where the full self-driving hardware requires a hardware update, but it’s still a software-defined system.”

But how many times will consumers go to the dealership for hardware upgrades? It could be a moot point, because there’s also evidence that increasing electrification could shorten vehicle lifecycles from the six-figure mileage projections. This is where the advantages of software-defined architecture become evident.

“Instead of having to buy a whole new car, you can just pay a subscription fee where new features are unlocked,” said Knoth. “Think about what that does for the overall cost of manufacturing. It can deliver a significant amount of profit if a manufacturer doesn’t have to maintain SKUs in warehouses, as well as a much better environmental footprint, because the consumer is not throwing away the old device and it can work better. As algorithms get smarter based on more training data from more cars on the road, safety goes up beyond what it was when the car was purchased.”

Another issue, especially for the sake of security, is broad and immediate adoption. It’s one thing to worry about thousands of consumers who do not upgrade their browsers ahead of Zero Day exploits. It’s quite another when thousands of car owners have not bothered with a software/hardware maintenance check.

“If your device is software-definable, that involves very intimate access to everything that’s going to operate the device, including allowing an over-the-air update to reprogram it,” Knoth said. “You have to think about security every step of the way, because you’ve just opened a tremendous backdoor.”

Still, the downside for security in software-defined hardware, especially software-defined vehicles, could be matched by an upside for safety. “You can react to new capabilities, which weren’t originally planned for. As algorithms get smarter based on more training data coming from more cars on the road, safety systems can go beyond what they were when the consumer bought the car,” Knoth said.

Design decisions throughout an ecosystem

From a development point-of-view, design choices must go beyond merely balancing hardware/software tradeoffs to also consider mechanical issues. “For example, you’ve got a car with emergency braking or pedestrian-avoidance braking,” Hand said. “If you build a digital twin of the design, and you’re testing it and realize you’re going to miss the braking distance by a meter — and the car is now parked on top of the crash test dummy instead of stopping — you have many choices. You could include bigger brakes, stronger hydraulics, faster actuators, or even better sensors with faster compute to save those few milliseconds. It becomes a tradeoff, and the whole ecosystem of that design environment needs to work together to discover the most effective resolution, because it may turn out it could be best solved mechanically, not through software or hardware.”

This approach isn’t unusual in the semiconductor industry, but it may be viewed very differently in other industries. “A lot of mechanical systems companies are just starting to learn more of these techniques that we in semiconductors take for granted,” said Knoth. “We produce systems that are absurdly complex in their interactions, and we’re able to simulate all that before manufacturing. If you’re a maker of a physical system, and you want to start shifting left, it requires you to have a lot more available earlier in your design process, and more use of aids, such as CFD modeling and digital twinning. There is a lot more that has to be mature earlier than these industries are traditionally used to, and that can make things extremely challenging. You’re going to be adding a lot of cost, complexity, and risk into a system that never needed it before.”

It also means re-thinking reliability, which may require more self-monitoring to detect problems in devices whose useful lives can be extended with software updates. That leverages some of the technologies developed for fault-tolerant automotive hardware, as well as using AI for predictive maintenance.

“The longer you hold on to an item, the more reliability is a concern,” said Knoth. “Also, the more safety-critical that item is, the more reliability becomes an even more important concern. And so, as electronics get more pervasive — whether it’s moving into more safety-critical applications like self-driving cars or industrial automation, or if you’re concerned about e-waste and you’re trying to get people to hold on to their devices for longer — the more you have to think about reliability. While it may seem the software-defined trend will make reliability worse, it can actually help, because you’re now building in the assumption that the product is going to be in use longer. The software on the device can be harvesting and leveraging data to give you early warnings for when a device might be wearing out and giving you proactive information about maintenance or shutting off some features that may look like the hardware they’re running on is starting to wear out. The consumer may not get as many bells and whistles, but the core device is still able to work. We’ve worked with some partners in this space, where you’re leveraging on-device sensors and lightweight, smart on-product diagnostics, and AI to help sense patterns in the data. All of that helps protect against reliability problems as much as possible.”

Reliability and extensibility require a non-trivial design calculation of what constitutes useful extras versus what is just a wasteful over-design. Digital twins can help here, because they allow for interactive modeling and updating. So can chiplets, by providing modularity and flexibility. Today, chiplet-based reference flows and designs allow for more modular system architecture exploration, including the creation of software digital twins, so that more simulations, software development, software testing, and other techniques can be leveraged.

Take jet engines for an example. “Digital twins mean that every specific engine with a specific serial number has a model running in the main office,” explained Marc Swinnen, product marketing director at Ansys. “Every time that plane takes off and lands, all that information is sent back and fed into the model, so they can predict when that engine is running into trouble because of how often and in what conditions it’s being flown.”

In a software-defined hardware environment, that information could then be used to create an over-the-air update.

This is one reason why software-defined hardware has been so prominent in automotive design. “At the 2024 Automotive Computing Conference, Mercedes illustrated the concept of an ‘Autonomous Drive Unit’ (ADU) as an approach away from the classic assembly of computing blocks to fully leveraging heterogeneous architectures,” said Frank Schirrmeister, executive director for strategic programs, system solutions, at Synopsys.

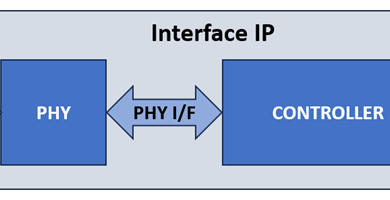

It also has increased the attention paid to embedded FPGA (eFPGA) technologies. “At the recent Chiplet Summit, there were discussions centered around keeping some of the protocol layers, network-on-chip (NoC) adaptors and some of the actual functionality in eFPGA regions of a chiplet,” said Schirrmeister. “One of the reasons cited was future-proofing in the context of a rapidly evolving landscape of chiplet interfaces, leaving the final configuration to software. Time will tell.”

Advances have grown to enable chiplet-based reference flows and designs that allow for more modular system architecture exploration, including the creation of software digital twins so that more simulations, software development, software testing, and other techniques can be performed.

“If the vision of a thriving multi-die ecosystem becomes a reality, one can expect the emergence of mainstream chiplets acting as software-defined hardware building blocks with the associated software reconfigurability solution,” said Boillet. “This could accelerate the standardization of leading solutions.”

Re-thinking MBSE

The move to software-defined hardware is also leading to the re-thinking of traditional MBSE.

“Model-based systems engineering, as it’s defined today, doesn’t cut it because of the rise of the software component,” said Siemens’ Hand. “We refer to it as ‘model-based cybertronic systems engineering,’ because the cybertronic concepts are at the top of the stack. It’s not a decomposition anymore. It’s a constant co-design across all of the different systems because any change can impact any other system, and you can’t ignore them. If you wait to do the traditional decompose, implement, integrate, and test, it’s too late.”

Fig. 1: Rethinking cybertronic systems engineering. Source: Siemens EDA

In a model-based system, a designer creating a car knows what the components, parameters, and other hardware/performance requirements need to be, all of which would be tracked through system modeling software. Given those standard elements, along with specific requests, such as the emissions rules for a state or country, the designer can decide on necessary components and make black-box assumptions about their capabilities and how they’ll fit into the whole system of systems.

“Traditionally, in system design, you make sure you’ve met your requirements, and as long as you have, the world is a happy place,” Hand said. “But in the new reality of software-defined hardware, that process starts to break down when there are a lot of software components. Now you’re integrating your software with your hardware very late in the process, and only then can you recognize a problem. But if you only realize it when the plane can’t do its control surfaces correctly, the abstraction just doesn’t work. With software-defined systems, the requirements themselves drive a set of system management requirements, which is spread across the whole device. That, in turn, might give additional system management requirements. For example, a car has battery management, which has the control electronics for that battery, which then impacts the batteries themselves, which impacts the packaging, which impacts the chassis design. You could have a minor software change in your battery management that has a direct impact on your chassis design, so you can’t abstract a lower-level block anymore because a change in a lower-level block could change the top-level design requirements.”

To resolve this new challenge, cybertronic engineering has to simultaneously link all of these domains. “You can’t serialize these,” said Hand. They’re all working in parallel, but you have to bring them back together. When you start to get into these model-based cybertronic systems, you’ve got a decomposition from the platform to the compute to the 3D-IC, and you need a method to push down requirements all the way through, while simultaneously making sure items are still behaving as one expects. You’ve got an architecture model that is your golden model, which is then going into a set of requirements and parameters, in what we refer to as verification capture points. And then, as you push down, they stay consistent. But what you’re checking is hierarchical level-specific. Therefore, you can start to get into this idea of, ‘No matter what I’m testing, it plugs into this overall modeling environment that allows us to understand where we are at any given point and how the whole system comes together.’ Cybertronic systems is the idea that you’re being driven by the coming together of software, hardware, electrical systems, mechanical systems, and multi-domain physics, and you’ve got to start looking at them holistically. You do that by building a digital twin. And once you’ve got the digital twin, you need to have verification traceability that goes across multiple domains. It’s a constant co-design across all of the different systems, because any change can impact any other system and you can’t ignore them. If you wait to do the traditional steps in the traditional order, it’s too late.”

Data management and other impacts

What holds all of these areas together is data, which leads to the world of data management. “If I’m going to verify 2,000 requirements, there are some requirements I can verify in simulation that I can’t verify in hardware tests, because I can’t probe at every single point in a chip in the hardware, said Chris Mueth, new opportunities business manager at Keysight. “It’s expensive. There are some things that it makes a lot more sense to verify in hardware test land because it would take forever to simulate. As such, most verification programs have both these elements, and they take pieces of it and try to shove the data together and make sense of it. What they want to do also is push everything to the left and do more in the design verification, because it’s expensive once you go to fabricate something. Based on a survey we conducted, about 95% of the customers that responded said they want to correlate the design and hardware test results together, but now they’re dealing with an exponential set of data. Between the two spaces, there are things that when you digitize this process and get going virtual world hardware world, you’re flowing down requirements, plans, processes and IP in a digital state through the workflow. When you come back, you’re floating back lots of results data from simulations, from tests, you’re doing your analytics and models. Those are the things that would fly back to the virtual state to update those systems.”

What can people do with the data downstream? “Part of it is updating those models,” Mueth said. “Part of it is correlating and making sure that my design and my test results match. If they don’t match, I have a big problem. Either, I didn’t model this correctly, or something’s wrong in the design and I’d better figure it out. We’ve had a number of customers who have said that if they have a missed correlation, they’ll put a stop on the whole process until they figure out what’s going on. So it’s really important that this happens. Then, tying these things together, you do it through engineering lifecycle management, and work across different layers. At a mission level, I might be dealing with functional requirements of a mission, ‘What if my call drops for a given communication scenario?’ Whereas down here in subsystem land where a lot of EDA companies play, they’re mostly saying, ‘Give me the electrical or mechanical requirements, and I’ll simulate that.’ There’s a hierarchy stack, and it works across different domains. For data analytics, in a system-of-systems, you are dealing with multiple disciplines including mechanical, software, electrical. All that data that’s produced and collected there needs to be analyzed.”

There are other issues to consider, as well. “Historically, there have been multiple proprietary initiatives to do hardware/software co-design,” said Boillet. “Today, with the advent of symmetric multi-processing and configurable instruction set extensions, like with RISC-V, more mainstream flows are emerging to adapt hardware to the software workload. DARPA, in the context of the Electronics Resurgence Initiative (ERI), also contributes to formalizing how software-defined hardware should be achieved. In general, the resulting designs are gigantic fabrics of processors and those require very configurable interconnects.”

There is another important factor that cannot be overlooked, as well. “Software-defined configurability of the hardware also impacts the software that executes on the re-defined hardware,” said Schirrmeister. “At one point, both items need to be stable and work with each other. As a result, more efficient updates and management of the required hardware abstraction layers will become an important topic.”

Conclusion

The challenges of software-defined hardware are best addressed with a mix of semiconductor knowledge and domain knowledge, with other approaches from a wide range of manufacturers to draw upon for lessons.

“We’re all motivated to solve this problem. If you work for an automotive OEM, you don’t have to figure it out by yourself. You can look to other industries,” said Knoth. “It’s not trivial but it’s a fascinating area for a design challenge. It’s a beautiful problem, because the compute system, the wiring harnesses, the sensors, all of that can ripple throughout the application. And it takes a wickedly good crystal ball to know where you’re future-proofing versus where you’re just raising the cost of the vehicle.”

Related Reading

Shifting Left Using Model-Based Engineering

MBSE becomes useful for identifying potential problems earlier in the design flow, but it’s not perfect.

Software-Defined Vehicle Momentum Grows

Challenges are massive and multi-faceted, but the auto industry recognizes the whole ecosystem needs to shift direction to remain viable.

EDA Looks Beyond Chips

System design, large-scale simulations, and AI/ML could open multi-trillion-dollar markets for tools, methodologies, and services.