Google Joins The Homegrown Arm Server CPU Club – The Next Platform

If you are wondering why Intel chief executive officer Pat Gelsinger has been working so hard to get the company’s foundry business not only back on track but utterly transformed into a merchant foundry that, by 2030 or so can take away some business from archrival Taiwan Semiconductor Manufacturing Co, the reason is simple. The handful of companies that have grown to represent more than half of server CPU shipments and nearly half of revenues are all going to make their own compute engines.

If you can’t sell ‘em, fab ‘em.

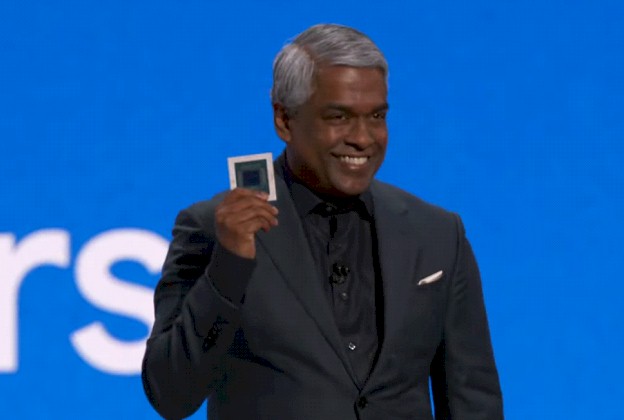

At the Google Cloud Next 2024 show in Las Vegas – Alphabet chief executive officer called in a “show” in his recorded keynote address, not a conference, and the labeling is accurate considering the lack of details in many of its announcements – Google just entered the Arm server CPU fray with its own Neoverse V2 design, which will be talked and rented about under the brand name Axion.

We say talked about and rented rather than sold because like the other hyperscalers and cloud builders who have designed their own CPUs, DPUs, and AI accelerators – what we commonly call XPUs these days – Google does not intend to sell these Axion server CPUs to anyone else. They have one and only one real customer, and Google has sufficient volumes and sufficient need to control its own supply chain and destiny that it can now save money by designing and fabbing its own CPUs.

The wonder, when you think about it, is that Google didn’t create its own server CPUs already. The truth is that for years Google could get special SKUs and special deals from AMD or Intel, and it wasn’t worth the trouble. It wasn’t until the advertising business got squeezed and that GenAI started putting pressure on search that Google had a rethink.

Rival Amazon Web Services launched its first Graviton Arm server more than five years ago, and is getting ready to ramp its fourth generation Graviton4 chips. The Graviton4 was launched last November and sports 96 of the “Demeter” V2 cores created by Arm Ltd for its Neoverse server platforms. The 72 of the V2 cores are also used by Nvidia in its “Grace” CG100 server CPUs. Last November, Microsoft announced its homegrown 128-core Cobalt 100 Arm server CPUs, which are based on the earlier “Perseus” N2 cores from Arm, which have two 64-core chiplets in the package. The Grace CPU is a monolithic design, and the Graviton3 and Graviton4 were based on chiplets, with memory and I/O controllers broken free of their monolithic core tiles. There are other Arm server CPUs, of course.

The fact that Google is using the Demeter V2 cores, and not the “Poseidon” V3 or “Hermes” N3 cores that Arm revealed in February of this year, shows you that the search engine and cloud behemoth has been working on the Axion chips for a while.

Rumors surfaced back in February 2023, in fact, that Google was working on two different Arm server CPUs. One, codenamed “Maple,” is apparently based on technology from Marvell and presumably based on the ill-fated “Triton” ThunderX3 and its successor ThunderX4. The second, codenamed “Cypress,” was being designed by a Google team in Israel. Maple was a stopgap in case Cypress didn’t work out, so the story goes. Both Maple and Cypress were shepherded into existence by Uri Frank, who joined Google from Intel in March 2021 to become vice president of server chip design. Frank spent more than two and a half decades at Intel in engineering and management roles, eventually being put in charge of many Core chip designs for PCs.

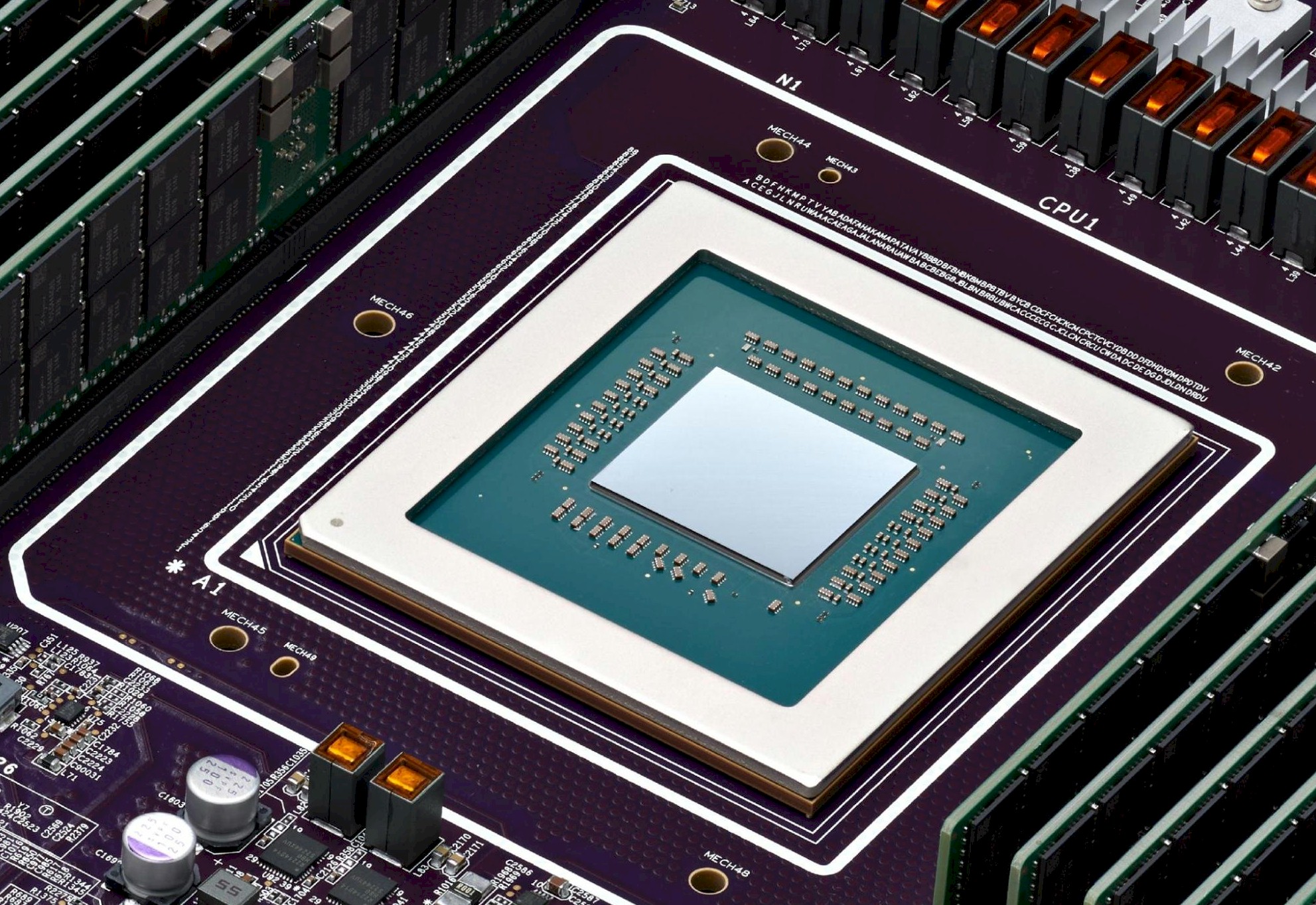

We don’t know anything, but Axion is almost certainly the Cypress design if it uses Neoverse V2 cores, and we strongly suspect from the image that it is a monolithic chip based on 5 nanometer manufacturing technologies from Taiwan Semiconductor Manufacturing Co.

Thomas Kurian, chief executive officer of Google Cloud, did make some performance claims on the Cypress Arm CPU, which were tossed out there during the cameo appearance of the chip from his sportcoat pocket, as shown in the feature image at the top of this story. Kurian said that the Axion chips are already in production – presumably limited – inside of Google, supporting BigTable, Spanner, BigQuery, Blobstore, Pub/Sub, Google Earth Engine, and the YouTube Ads platform, and added that later this year instances would be available for customers to rent raw on Google Cloud.

In a blog posting that echoed his part of the keynote address, Amin Vahdat, general manager of machine learning, systems, and cloud AI at Google, had this to say: “Axion processors combine Google’s silicon expertise with Arm’s highest performing CPU cores to deliver instances with up to 30 percent better performance than the fastest general-purpose Arm-based instances available in the cloud today, up to 50 percent better performance and up to 60 percent better energy efficiency than comparable current-generation X86-based instances.”

There was no quantifying or even qualifying these statements. Presumably we will get more insight into the Axion design and its performance and price/performance benefits when it is rolled out to public consumption later this year. (It is in preview now to select customers.)

Google is not new to messing around with CPUs to put pressure on Intel and AMD. Google joined the OpenPower Consortium started by IBM back in 2013 as one of the founding members, alongside Big Blue, Nvidia, Mellanox, and Tyan, and was designing its own system boards in 2015 based on Power8 and followed that up with more robust Power9 designs in 2016 that were deployed in reasonable volumes for several years. Google helped IBM rewrite and open up its Power firmware and port the KVM hypervisor to Power as part of the effort.

In July 2022, Google rolled out instances based on Ampere Computing’s Altra line of Arm server CPUs, which had already been put into production by Microsoft, Alibaba, Baidu, Tencent, and Oracle. And four of these five vendors were working on their own Arm server designs at the time. Ampere Computing might be a second source, or it may have been a testbed. Time will tell.

Featuring highlights, analysis, and stories from the week directly from us to your inbox with nothing in between.

Subscribe now

Computex, the annual conference in Taiwan to showcase the island nation’s vast technology business, has been transformed into what amounts to a half-time show for the datacenter IT year. And it is perhaps no accident that the CEOs of both Nvidia and AMD are of Taiwanese descent and in recent …

A pattern seems to be emerging across the original equipment manufacturers, or OEMs, who have been largely waiting on the sidelines to get into the generative AI riches story. Nvidia is making money, and now AMD is, too. HBM memory suppliers are making money, and so is the Taiwan Semiconductor …

This is how a competitive chip market is supposed to look, and this is how a competitive chip maker recovers from faults, competes against a seemingly unassailable foe, and then rides up the revenue and income curves to be able to invest in the future and profit from the present. …

“People who are really serious about software should make their own hardware.”

― Alan Kay

Seeing how ARM is commonly less standardly interoperable than x86 (esp. wrt the address space for peripherals, and related discovery by the bootloader and OS), I wonder if we could see this enthusiasm for in-house ARM as part of a moat-digging (or deepening) effort by Google and other hyperscalers? ( https://www.nextplatform.com/2023/05/04/missing-the-moat-with-ai/ )

I do like ARM (proven performant since Fugaku and Apple M1), but wonder how straightforward it might be to migrate ARM-workloads from one hyperscaler or cloud to another, as compared to x86 (maybe it’s a non-issue given the proprietary nature of workload management software, and accelerator hardware?).

I think that is an excellent question for Jon Masters, formerly of Red Hat and Nuvia and now of Google. Jon?

Great choice! … looove his hair products: https://johnmastersorganics.fr/ 8^b

Jon Masters with hair products…. now that is funny.

I’m not sure I agree with your assessment of ARM being in any way less interoperable than x86. ARM-architecture chips have been deployed in many, many different markets by many different vendors and work with peripherals from pretty much everyone.

In addition, since cloud vendors actually like to limit the variability between their machines for efficiency and controllability, I don’t think the point is relevant, even if it were true.

“It wasn’t until the advertising business got squeezed and that GenAI started putting pressure on search that Google had a rethink.”

That’s simply incorrect. With the lead time for an SoC chip being YEARS, you can’t possibly think that the market changes of 2023 being the instigators of such a project.

The advertising business squeeze happened five years ago with the surge in mobile and the rise of AWS as an advertiser. GenAI is just one layer of many changes. I think the changes started happening a long time ago, in datacenter terms, but were realized more recently as this all has come to a head.

Your email address will not be published.

*

*

This site uses Akismet to reduce spam. Learn how your comment data is processed.

The Next Platform is published by Stackhouse Publishing Inc in partnership with the UK’s top technology publication, The Register.

It offers in-depth coverage of high-end computing at large enterprises, supercomputing centers, hyperscale data centers, and public clouds. Read more…

Featuring highlights, analysis, and stories from the week directly from us to your inbox with nothing in between.

Subscribe now

All Content Copyright The Next Platform