Chip Aging Becoming Key Factor In Data Center Economics

Chip aging is becoming a much bigger concern inside of data centers, where it can impact server uptime, utilization rates, and the amount of energy needed to drive signals and cool entire server racks.

Aging in chips is the result of both higher logic utilization and increasing transistor density. This is problematic for data centers, in general, but especially for AI chips where digital logic is expected to run at maximum speed. That generates more heat, which becomes harder to dissipate as the number specialized and general-purpose processing elements per square millimeter of silicon continues to rise. Heat typically gets trapped between the fins of finFETs and gate-all-around FETs, accelerating electromigration and reducing the time it takes for dielectrics to break down. It also can cause warpage, which can rupture the bonds and contacts between different components in an advanced package or on a PCB.

For data centers, that creates a number of challenges:

- Thermal management: This requires a deep understanding of workloads and the resulting transient thermal gradients as processing is load-balanced on-chip, between chips or chiplets, and between servers;

- More data: Data from sensors everywhere, along with larger training sets, all need to be processed faster than in the past to keep up with the flood of data, but all of that needs to happen in the same or smaller footprint without overheating any part of a device, and

- In-circuit monitoring: Sensors can be added into chips to detect variations in heat and data speeds in different paths, but it’s much more difficult to keep track of tens of thousands of these monitors as they collect data from heterogeneous processing elements, each of which can age at different rates depending on process variation, defectivity, varying workloads, and ambient thermal conditions.

“Servers are much more capable today than they were 10 years ago, and the issue is that power hasn’t scaled like it used to,” said Steven Woo, Rambus fellow and distinguished inventor. “Now, if you want to do lots more work in your server, you have to burn more power to do it. Twenty years ago, a server might dissipate a couple hundred watts. But with the latest servers that NVIDIA just announced around Grace Blackwell, the whole rack is 120 kilowatts, and the individual servers are many kilowatts. Just delivering power into those racks is causing changes in the infrastructure in the industry. Now that you have to bring in and dissipate more power in a small space, you get all kinds of interesting things that could happen over time. The heat that’s being dissipated can have effects on the chip, and you have to worry sometimes about thermal cycling where, as the chip is doing a lot of work, maybe part of the chip stops and then it does more work. You get these rapid cycles of dissipating a lot of power, then not, then dissipating a lot of power, then not. That cycling causes local heating and cooling, leading to thermal stresses, and this impacts all chips, including memory.”

As a result, everyone from the data center manager to the chip architect now has to understand how a chip behaves in the field, and how increasingly customized chip and system architectures will function over time. Downtime is costly for a data center, but under-utilization and reduced performance also carries a high price tag. That, in turn, affects how much margin is considered essential, such as extra data paths if some of them are fully or partially closed off by electromigration, and how that margin will impact performance, power, and area/cost over a chip’s projected lifetime — especially in a heterogeneous design with specialized compute elements.

“When it comes to the hyper-scalers and high powered, highly customized, heterogeneous chips for various different workloads, these chips are on 24/7, so consistent uptime is critical,” said Dan Lee, product management director at Cadence. “Since all of these chips are done at the really advanced nodes, with the smaller device sizes, more developers are looking to do aging analysis, and derive the wear and tear so they can see if the chip is going to last a year or five years. At the same time, an important consideration is also thermal — especially when we’re talking about these heterogeneous integrations, and you don’t really get the thermal conductivity that you would in a straightforward, monolithic design. There’s a bit more thought or planning that needs to be a part of this because aging and heating are related. All things being equal, if you’re operating in a very hot environment, you’re going to expect a lower lifespan.”

Still, determining how much shorter that lifespan will be isn’t always a precise calculation. “Data center SoCs that execute mission-critical workloads need to provide scalable visibility, predict problems before they occur, provide deep-dive analyses into problems, and be optimized to increase longevity of investment,” said Padmakumar Karthik, senior technology manager at Arm. “Data center diagnostic patterns are often deployed to measure the health of an SoC post-manufacturing to prevent silent data corruption (SDC) issues. But on-chip sensors provide an additional layer of insights, detecting droops or aging or thermal events on-chip, all of which can cause SDC incidents. For this reason, scalable, customizable sensor frameworks that can monitor and adapt throughout the useful life of the device, enabling continuous design optimization and preventive maintenance, will be increasingly important.”

There are multiple ways to achieve this, but each data center can be very different. In some cases, chips are designed by systems companies for internal use. And in most cases, there is a mix of different hardware and software, not all of which is state-of-the-art. “Many data centers have legacy infrastructure that may not be inherently designed for optimal power efficiency,” noted Noam Brousard, vice president of systems at proteanTecs, in a recent blog. “Upgrading or retrofitting such infrastructure poses challenges in achieving comprehensive power optimization.”

Even within a single rack, stresses can vary greatly from one server to the next, and from one chip to the next even in the same server. “You can imagine when you have a very big chip, toward the edges of the chip it will expand more than in a small chip, and that can add stress,” said Rambus’ Woo. “You have to really be careful about how you cool things, and memory is no different. You have very specific things you worry about with memory, like the ability to retain data, depending on how hot the chip is.”

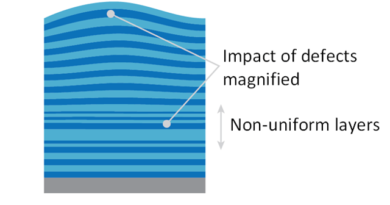

In addition, as chips age, parameters drift. Marc Swinnen, director of product marketing in Ansys’ semiconductor division, said the traditional approach has been to use a library that’s characterized as a brand new chip. “The library is characterized at 1 year, 5 years, 10 years, 15 years, and you can run all your analysis multiple times with these different aged libraries. That sounds good on paper, and that’s what a lot of people do, but the problem is that not all parts of the chip age at the same rate. This is why aging is often associated with activity and temperature. Some parts of the chip are more active and hotter than other parts of the chip, so the aging time runs differently for different parts. This means you want to apply some of the old library to some parts of the chip, and the younger library to other parts of the chip, because if signals run between them you have setup and hold issues. If everything slows down at the same time — or one slows down and the other one doesn’t — you’re going to get mismatches, and that’s the difficulty. At the bottom level, it’s easy. Every gate is assigned its right age. That’s simple. You do an analysis with every gate. But how do you assign the age to every gate? Where do you get that information from? You need a lot of realistic activity, and then predict that over the lifespan and with temperature. That’s the problem. How do you actually construct this aging map? Once you have it, the analysis is not that hard.”

Aging maps are application- and workload-specific. Every chip will age differently depending on the functions it performs.

But aging is just one of many factors that affect data center uptime. “When we look at data center, we look at the whole application first, then whittle it down to what that means for chips and packages,” said Kelly Morgan, senior principal application engineer at Ansys. “From the mechanical reliability lens of the data center operation, we go through thermal cycling, obviously. We’re in a controlled environment. But what does that influence? How does that influence the integrity of the chips as you go through thermal cycles? Typically, we’ll look at things like solder fatigue and other effects.”

Another factor to consider is shipping and handling, which can affect the aging of a chip, package, and board.

“Even before the device is put in place, there are opportunities for vibration,” Morgan said. “You might hit something, which is a bit of a shock. We have customers who are looking at things like drop, shock, and vibration, and they have goals they need to test to. Typically, the standard process is to do a lot of physical testing. Now as you can imagine, that can be pretty challenging. You have to be pretty far along in the design process before you really start to go and test, and if there’s an issue, then you’ve got to go back and retest. Early simulation helps here, especially for those larger-scale events, and that comes down to the chassis, the board, to all the components, including the ICs.”

Fig. 1: Components of complete electronic system analysis. Source: Ansys

Quality control remains a big challenge when it comes to mechanical stresses that can affect aging. Adam Cron, distinguished architect at Synopsys, pointed to a recent Intel white paper, which noted that at the current acceptable defectivity rates, one core fails every two days. To account for this, Cron noted that certain commercial tools support in-system delay testing in a BiST mode. By adding specific IP, any ATPG patterns could be added to that. (Intel’s paper said its solution only applies to stuck-at testing.)

“In very large, millions-of-cores data center-type environments, the implication is that you’d better be ready,” Cron said. “One of the things they were talking about in this paper was in-system scan. Intel was bringing a database of test patterns in, and then applying it in-system after isolating a core. And then, upon a failure, they’d quarantine and move on. But the data centers are apparently running out of that opportunistic time slot to do any of this. We’ve heard some interesting conversations about the fact that people do run a lot of things during certain times. However, other times are cheaper, so all the holes are just getting filled in terms of runtime. Monitors are certainly something to look at, but monitors are looking at systemic degradation. That’s known, if you will. And so as things degrade, Vmin will change, maybe frequency will change. And they’ll be on a pace. They can figure out when to do that. That’s easy enough to figure out. However, if there’s a marginality or some broken component in there, it is not up to the tool to find that. And frankly, the in-system scan wasn’t addressing all components on the die. It was only up to like 80% of stuck-at coverage, which isn’t that much, especially when you’re not looking at all of the pieces inside the die. The point is, there are still opportunities to do better.”

Cron noted that one big systems company suggested a dual-core lockstep mechanism, starting out the data center in dual-core lock-step mode for X number of months. “When it looks like you’ve squeezed the major part of the curve out, in terms of finding these defective components, then unlock them, double your capacity, run like that for a while, and periodically hook some back up again. That means everything is utilized, at least. Of course, some are working at half capacity here and there, but it’s not the whole die. And there are some implications there from a design standpoint, at least for the hardware, but also possibly the operating system, depending on who decides what physical core is used versus what virtual core is used.”

Approaches to measuring aging

Any discussion around aging circuits really boils down to extending the life of the machines in the data center, and not getting caught by surprise when failures occur.

“How do you do that? You have to measure the aging of those machines,” said Neil Hand, director of marketing, IC segment at Siemens EDA. “Right now, if you speak to the CIOs of these big companies with big data centers, they say, ‘We’ve got to get rid of the machines after three years because we can’t risk it going down.’ If you look at embedded analytics capabilities, you can start to embed aging monitors in those devices, you can start to monitor those in real time. It doesn’t look that different than what it does from an automotive perspective. It’s all the same technologies, effectively, but you’re monitoring them. And then you can say, ‘We’re now at 90% of our life for this server.’ We can then just replace that server.”

This feeds into corporate goals around sustainability, as well. “It comes down to building the best thing to begin with, then building it with design for manufacturing in mind so that you don’t get waste during manufacturing, achieve better yields, and finally extend the life of products and build them in environmentally-sustainable ways,” Hand said. “If you can extend the data center lifecycle from three years to five years, that’s big. And especially if you start going to these high-performance, application-specific type of clusters, you may not need to change them as often, because if the underlying capabilities aren’t changing, that might drive the cycling of it. In the case of a biological computer, if there’s no new change to the underlying protein folding mechanisms, you might say, ‘We don’t need a new compute platform. This is really good.”

The longer the product life can be extended, the better. Design for aging is a matter of, first, performing the aging analysis with the foundry models. “Run the simulations and observe the effects,” said Cadence’s Lee. “When you’re doing the simulation, you want to have the right mission profiles, so you come up with an accurate prediction of how your device is going to behave after a certain number of years in deployment. You may want to combine that with thermal analysis, for example, because how that aging is going to behave will depend on what temperature this design is going to be working at. You may think it’s 22 degrees Celsius, but maybe through some thermal analysis you realize it’s actually going to be operating at 35 or 40 degrees most of the time. That may change the outcome of your aging analysis.”

In terms of the associated thermal analysis, this can extend beyond a single device. “It’s also how that heat is moving,” Lee said. “Let’s say you have this integrated design, where you have some power devices alongside some logic, or some other functionality that is lower power. What you may want to understand is, if those bandgaps or power circuits are generating a lot of heat, that may be shifting over into other parts of your design. So when you run your aging analysis, you may assume that you’re running at 25 degrees, whereas the power devices are at 40 or 45 degrees. They’re on the same chip, they’re very close to each other, and you have to understand how much of that heat is moving over to your logic and what that’s going to bring the temperature up to. You want to know that so you can perform the aging analysis based on that higher temperature.”

Another consideration is combining aging analysis and interconnect parasitics, which is especially relevant for advanced nodes due to the parasitics in the interconnect. “They’re dominant when it comes to performance and functionality,” Lee added. “So when thinking about aging, you also have to think about it being an aged device that has to push the electrons through this interconnect. That’s a pretty heavy load. When you’re doing the aging analysis, you probably will have to be doing it with extracted parasitics. You just can’t do it on a pure schematic design. It doesn’t give you enough detail about what’s really happening physically. This may be included in the aging analysis tool. When most people talk about aging, they may not think about the parasitic aspect to it.”

Combating aging, thermal in memory

While standards don’t work in custom silicon, they do work for some standard components in those devices, such as memory. Over the past 10 to 15 years, memory standards have started to address the impact of heat.

“If you start to exceed certain temperature limits, you’ve got to refresh the device more frequently because the charge can leak off the cells more quickly,” said Rambus’ Woo. “So there are temperature-dependent refresh rates. There are other things that can be exacerbated, like the capacitors are getting smaller, they’re holding fewer electrons because there are so many more of them on a chip now, so we’ve seen memories adopt on-die error correction. This on-die error correction is something that is hidden from the outside world. In many cases, you don’t even know an error has occurred and been corrected on the chip. Those kinds of technologies become even more important now because the temperatures can be higher.”

There also is growing demand for more telemetry to provide monitoring information. “You just want to know if anything is overheating,” said Woo. “Does something seem like it’s malfunctioning? The data center manager will get regular updates about the status of the major components of the system. A lot of boards now in servers have baseboard management controllers (BMCs), which are little chips that sit on each board and are responsible for, among other things, reporting back the health of that board when a server might have five or six boards. We’re frequently seeing more of these BMC chips.”

Design for aging

While the goal is to be able to guarantee a certain lifetime for the chips in a data center, the challenges for achieving that are expanding. “There’s a growing list of things that can be harmful to devices over their lifetime,” Woo said. “It’s a balance between not adding too much cost, even though you have to increase the reliability and maybe add new features, and all of these things are in play with each other.”

Whether it is liquid cooling or higher levels of RAS ECC in the system, there is no single best answer for every application. In general, the industry is moving toward higher reliability and increasing resilience, but there are many ways to get there and challenges with each of them.

“Just as 15 years ago we didn’t necessarily always think we had to talk about power, now we have to talk about it all the time,” Woo said. “The same thing is going to be true for resilience and reliability. It’s going to be required to become part of the way people think about architectures, and part of that is how the memory system improves its reliability. You can’t really do anything unless you can compute on some data, and you have to make sure that data is reliable. It will touch how memory is stored in a DRAM. It will touch how memory is communicated across links. And it even will touch how processors manipulate data once they get a hold of it in their caches, and in the compute pipelines. Also, one of the key things people will worry about is how much of that susceptibility is brought about by age-related issues, like heating cycles, etc.”

Finally, there are even issues around the quality of the power that comes into a system. “The servers get noise on the power rails, and it’s a balance between how much money you’re willing to pay for the power delivery versus the quality of power,” said Woo. “You have to be tolerant of those kinds of things, too. Power management becomes more challenging, as well as the amount of power that these systems are using today. NVIDIA systems bring 48-volt power into the racks, and there is talk about even higher voltage levels. Those changes in infrastructure can all impact heat, and can age components differently.”