Cải thiện hiệu suất xác minh

Without methodology improvements, verification teams would not be able keep up with the growing complexity and breadth of the tasks assigned to them. Tools alone will not provide the answer.

The magnitude of the verification task continues to outpace the tools, forcing design teams to seek out better ways to intermix and utilize the tools that are available. But as verification teams take on even more skill sets to fully solve the tasks assigned to them, the list of tools they are using continues to grow. And because few companies can afford to have verification specialists, verification engineers must keep learning just to stay in the same spot, which makes finding enough skilled workers increasingly difficult.

Problems of this scope require a plethora of approaches, which can be bundled in various manners. Bryan Ramirez, director of solutions management within the Digital Verification Technologies Division at Siemens EDA, defines three such bundles. “First, how do we help them scale their verification? That includes the performance of the engines, but also how we provide more automation so that they can do more with less. Second, is data-driven verification. How can they take advantage of all the data they have and apply things like AI to be smarter in how they address that? The third is about connected verification. How do we tie formal more closely to simulation? How do we tie that more closely to emulation or prototyping? How do we tie that more closely to other adjacencies, like test and implementation?”

The bundling is done slightly different at Synopsys. Frank Schirrmeister, executive director for strategic programs and systems solutions, groups them in this manner: “There is the base speed of the engines, and that is increasing. The second thing we refer to as ‘smarter verification.’ That’s where you have methodology, and you have the right scope. Solving the right problems at the IP level, that may not transpose to the SoC level or the system level. The third is automation and AI. It is a three-legged stool — making things faster, smarter verification, which is methodology, and then there’s automation on top of that.”

A changing problem scope

In the past, verification teams only had to worry about functionality of the chip being implemented. Today, that has broadened in several ways. First, the chip cannot be considered in isolation, instead becoming a chip, package, board, system problem. Secondly, other aspects of the chip, such as power, thermal, and reliability have becoming increasingly important. And third, system-level considerations, such as safety, security, test, and software have become increasingly relevant for more product sectors.

This is forcing an increasing number of companies to look at the entire problem. “If we think about thermal issues, it involves the IC level, it involves the board level, it involves the chassis,” says Matt Graham, product management group director at Cadence. “It goes well beyond just a verification problem. It is a whole-system problem. That’s why many companies are looking toward digital twins. The industry needs to figure out where the limits are, what it is that we’re designing and ultimately going to build. It needs to be within a defined envelope for power, thermal, cost, and provide the maximum sustainable performance. From a verification point of view, how much can we reliably — in a performant way, and in a feasible way — drag this into the pre-silicon phase so that we know more before we build.”

Many silos still need to be broken down. “The challenges are not just because of the increase in design complexity, but also about the scope of verification that’s being asked of these designs,” says Siemens’ Ramirez. “You look at it from either the increasing needs to verify beyond functionality for things like safety, security and reliability, or the emerging set of needs around DFT verification, constraint verification, and much more. Having design be more power-aware is expanding beyond consumer grade devices. And then you have software defined everything that’s forcing customers to think in a completely different way, where software comes first rather than after the fact.”

Verification teams need to evolve. “Verification methodologies have grown up but need to go further to mimic what’s going on in the design process,” says Synopsys’ Schirrmeister. “This includes advances such as chiplets. That is why automation of test generation is the next level. In the functional space, with portable stimulus (PSS), you have a constraint solver that gives you all the variations of tests you could generate. For power you have experts who basically look at the activity, and based on the activity allow you to figure out where your hot spots are, where you need to focus, and where you may have a power bug. All of these are different experts, so it has branched out into different expertise fields and their needs for verification.”

As more problems become interconnected, so must design and verification. “Companies have found the need to control the system end-to-end,” says Cadence’s Graham. “This is required to have any kind of repeatability in terms of thermal and power, based on software workloads. They need to own the software. They need to know if the power grid will fail under realistic workloads. Can the software even get into that corner? Can software be written that is performant, and still gives the user all the features they need, but avoids that corner?”

This requires a lot of thought about what ‘maximum’ means. “What is the maximum power scenario, even ignoring the impacts of thermal?” asks Bernie Delay, senior engineering director at Synopsys. “You need automation that can find the maximum stress scenarios, because you’re not going to come up with that by yourself. It’s the verification engineer who ultimately has to do the analysis of what the max power is — which is different than the real scenario power, where you plug in the software load that you also have to calculate.”

Thermal compounds the problem. “We are seeing power and thermal problems that only show up after 30 minutes of real-time execution,” says Ramirez. “Running that in emulation would probably take days or weeks. The industry is aware that we need better types of workloads, which can more accurately stress the devices so that we can start exposing those types of problems. This problem is far from solved. On the power side, we have good foundational solutions to be able to measure and analyze power across different engines. That’s a good starting point. But how do we get the right set of stimuli to leverage those foundational technologies? That’s probably where the next step of innovation is.”

Tools available today

EDA companies spend a lot of time and effort attempting to improve the foundational engines, but those engines already are fairly well optimized and unlikely to see the major gains that have been seen in other algorithms by porting to massively parallel architectures. “We cannot rely on CPUs getting faster,” says Graham. “The algorithms and the simulator are optimized, and we’re not going to get 2X, 3X, or 4X just by making them more optimal. The biggest problem for functional verification is we don’t have the same kind of algorithms used in thermodynamic simulation or CFD, where those go on the GPU and see orders of magnitude or more improvement. We just can’t do that. We have a lot of big matrices that are sparse, and that’s the problem. When they’re big and they’re sparse, they respond a little bit to GPUs, but we are not going to see the big numbers like in other areas.”

Emulation is actually a massively parallel engine specifically designed for functional verification. That solution is just one in the arsenal. “There’s a very good set of engines spanning the spectrum of verification today, starting with virtual prototyping, moving into static, formal, simulation, and then all the different phases of hardware assistance,” says Ramirez. “The key is, how do you use each of those tools? You don’t want to start by jumping into an FPGA prototype, because the turnaround times to debug in order to get the RTL stable would be too great. We are figuring out better ways to move between these different domains of verification, and more broadly semiconductor development, because it’s moving beyond just functional verification.”

There are several examples of this tool interaction. “Formal is being shifted left and used before the test bench has been developed,” says Graham. “Because of that, we see the concept of the formal regression coming to the fore. Emulators are getting faster and certainly seeing capacity expansion, but also an applicability expansion. We recently added four state emulation, and real number modeling in the box, which is allowing problems that have run out of steam in the simulation space to be moved into emulation.”

EDA companies continually look for ways to improve all of the engines. “You start by bifurcating your engines between the scalable engine, which have lower performance, and smaller engines, where performance is optimized, but can’t go to the full scale,” says Schirrmeister. “This has impacted the product portfolio, where high-end solutions can scale to 30 billion gates. That’s the workhorse for the very big designs. But for designs in the few-billion gate range, the medium-sized designs, we have other solutions that are performance-optimized. It gets faster speed, but it now doesn’t scale to 30 billion gates at that speed.”

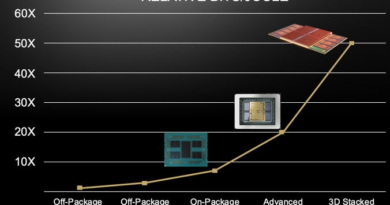

And verification can mimic the design to get speed improvements. “As designs migrate to 3D-ICs, we can use different technologies to do a simulation of that,” says Bradley Geden, director of product marketing at Synopsys. “We can take each chiplet and create a different executable. Each of those chiplets can run on a different machine, and provided you have low latency protocol-level communication, you can get excellent scaling in performance. You run the UCIe over an actual physical interface. You run it over PCIe to connect the distributed engines.”

But there are physical limits. “From the chiplet, or 3D-IC, we have a fundamental issue in terms of scale,” says Graham. “Systems require terabytes of RAM just to boot the simulation. We need to come up with methodologies and solutions that are going to address that problem. This is not a case of is it fast enough, or is it thorough enough? It’s a case of, ‘We can’t do it at all.’ If we don’t do something, we’re going completely blind into this area where we’re not even sure that these two chiplets can talk to each other, beyond just verifying that the two IPs at the interface are going to talk to each other.”

This leads to two levels of problems. The first is getting the right scenarios. “The ideal stimulus is always a workload that matches what you’re going to actually have,” says Ramirez. “Then you know you’re only testing what actually is going to happen in real life. The problem is, how hard is it to get that real world stimulus, those real workloads? Will you know that before your project, or your chip, is finished? This problem of understanding real workloads becomes more difficult, and it’s different as we start moving to the system level. Most of these companies aren’t just chip companies anymore. They’re system-level companies. That gives them some context of what their workload is within that system, but it also increases the complexity of that workload.”

The second part of the problem is transforming and partitioning those workloads for things other than full system verification. “We are lacking the ability to abstract down or refine a higher-level workload, a system-level workload, into something that’s applicable at the block level,” adds Ramirez. “You don’t want to be running this really big thing if you’re just doing block-level verification. The whole point of block level is to do it fast, efficiently, and to be able to cover the context of how this IP would be used. And sometimes you don’t know the context you have to verify for the different configurations.”

Conclusion

Verification engines are slowly improving, but the problem space is expanding at a faster pace and into multi-disciplined areas. It is highly unlikely that any new verification engines are likely to appear, which means that better usage of the existing ones is the only path forward. Progress is being made in the migration of problems, once confined to a specific engine, to use on higher-performing engines. But while it may be a cliché, the problem is not going to be solved by working harder, but by working smarter. That will be addressed in a subsequent story.

Related Reading

Verification Tools Straining To Keep Up

Widening gap in the verification flow will require improvements in tools. AI may play a bigger role.