Các phương pháp tiếp cận kiến trúc HW và SW để chạy các mô hình AI

How best to run AI inference models is a current topic of much debate as a wide breadth of systems companies look to add AI to a variety of systems, spurring both hardware innovation and the need to revamp models.

Hardware developers are making progress with AI accelerators and SoCs. But on the model side, questions abound about whether the answer might come from revisiting older, less complicated models, setting up a competition between transformers and recurrent neural networks (RNNs), such as state space models.

“Reducing model complexity can lead to significant gains in performance and energy efficiency. Simplified models require less computation, which naturally reduces power consumption and speeds up processing times,” said Tony Chan Carusone, CTO of Alphawave Semi. “However, optimizing hardware provides another dimension of improvement that shouldn’t be overlooked. Custom hardware tailored to specific models can unlock performance gains and energy savings that generic hardware cannot achieve. With advancements in chiplet technology, the non-recurring engineering (NRE) costs and development time for custom silicon are decreasing. This means we now can design hardware that is optimized for specific applications without prohibitive costs or delays. In essence, both reducing model complexity and tuning hardware are crucial. They are complementary approaches that, when combined, can lead to the most significant overall impact on performance, power efficiency, and cost.”

Making this all work requires a tight integration of hardware and software. “Software developers constantly tune their models to suit the target hardware,” said Carusone. “In collaborative environments, hardware designers work closely with software and machine learning teams to ensure that models are optimized for the target hardware. If a particular model poses challenges in terms of cost or energy efficiency, hardware designers can provide valuable feedback. By highlighting the tradeoffs associated with different models, designers can influence models to better align with hardware capabilities. This collaborative approach can lead to more efficient systems overall, balancing performance with cost and energy consumption.”

In enterprise deployment, both kinds of optimization may be required, depending on the application. “Reducing the model size improves inference efficiency and hence reduces cost, but inference efficiency depends a lot on the hardware/kernel-level optimizations,” observed Akash Srivastava, chief architect for InstructLab and principal research scientist at MIT-IBM. “In fact, going a step deeper, we are seeing model architectures being inspired by the hardware design, like flash attention, for example.”

Traditional thermal models

While those working with AI are trying to reduce model sizes, more classical uses, such as thermal modeling, are also striving for balance between size and accuracy. “In an SoC design, there are many components that make up the whole, and some of these circuits are highly temperature-sensitive,” said Lee Wang, principal product manager at Siemens EDA. “Hence, it is important to model these with higher accuracy to properly capture the electrical impact caused by the temperature and its gradient during circuit operation. Examples of this would be analog circuits, clocking circuits, power management modules, and integrated photonics circuits. Modeling high accuracy everywhere can lead to performance issues for these large designs, so it is necessary to have a solution where the modeling resolution can be adapted to what’s needed in critical regions while keeping a coarser grid elsewhere for performance.”

Sometimes an AI can help find the balance between size and accuracy in a classical model. “For thermal simulation, you need to build a finite element mesh, which basically chops the whole design into tiny little triangles. The problem is that a fine mesh gives you good accuracy, but it takes a lot longer to process because there are more triangles,” said Marc Swinnen, director of product marketing for the Semiconductor Division of Ansys. “A coarser mesh with fewer triangles is much faster to process, but doesn’t give you as good accuracy because you’re glossing over some of the details within each triangle. You want to find the optimal mesh size. In fact, the best way is a variable mesh. You want to have fine accuracy modeling where there’s thermal hotspots, but there are a lot of thermal gradients. For example, out on the edges of the chip, where the temperature stays much the same, you don’t need a fine mesh. There’s not a lot of detail that needs to be captured there, so a coarse mesh would be fine. The problem is where to put the fine mesh. The obvious answer is on the hotspots, but the whole point of the analysis is to find hotspots. If you knew, you could optimally place the fine mesh. That’s where AI comes in. There is now trained AI that understands the silicon, chips, structures, and the conductivity elements that can be used to quickly estimate where the hotspots are.”

Neural networks and power reduction

AI research has been happening far longer than the 1980s work on Hopfield Networks that recently was awarded the Nobel Prize. In fact, it can be traced as far back as the beginnings of the computer science and neuroscience fields, as both disciplines tried to understand how the computations behind thoughts and actions were performed.

The direct antecedent of the models in use today is the late Frank Rosenblatt’s 1958 design of the Perceptron[1], one of the first artificial neural networks (ANNs). It’s a simple, one-layer feed-forward structure of weighted inputs, a summation of those inputs and weights, and then an output. Multiplied by billions of layers and interactions, that basic structure still underlies today’s generative AI models, with the once easy-to-interpret “summation” step now an intricate complex of “hidden layers” of connected nodes (artificial neurons) that perform computations that are no longer fully understood.

Development in ANNs is often spurred by new models trying to solve the problems presented by previous models. The oldest models were feed-forward networks, which could not remember previous inputs and thus could not process time-series data. In response, researchers developed recurrent neural networks (RNNs). As IBM describes them, “While traditional deep learning networks assume that inputs and outputs are independent of each other, the output of recurrent neural networks depends on the prior elements within the sequence.” In other words, RNNs are networks with loops in them, allowing information to persist.

But RNNs in turn had their own issues, called the “vanishing gradient problem,” which trips up an RNN’s ability to learn long-term dependencies. In 1997, researchers Hochreiter & Schmidhuber solved the problem by developing long short term memory networks (LSTMs), which could learn long-term dependencies and are now the predominant form of RNNs.

One of the biggest breakthroughs for the commercialization of AI came in 1986, when new Nobel Laureate Geoffrey Hinton, along with late colleagues David Rumelhart and Ronald J. Williams, published on back-propagation and how it could help neural networks by introducing “hidden layers” in the architecture. Despite reports to the contrary, Hinton did not invent back-propagation, and won his recent prize for precursor AI work on Boltzmann machines.

In essence, the 1986 paper showed how neural nets could learn from and correct their mistakes. As the authors described their work, “The procedure repeatedly adjusts the weights of the connections in the network to minimize a measure of the difference between the actual output vector of the net and the desired output vector. As a result of the weight adjustments, internal ‘hidden’ units, which are not part of the input or output, come to represent important features of the task domain, and the regularities in the task are captured by the interactions of these units.”

While RNNs are ideal for working with sequential data, on a separate track of development, in 1989, Yann LeCun and his colleagues created Convolutional Neural Networks (CNNs) to better process images. CNNs underlie much of the current work in computer vision and ADAS image detection. NVIDIA’s blog explains how they can work in complementary ways with RNNs.

A 2017 breakthrough, the now famous “Attention Is All You Need” paper introduced transformer models, with their promise of “a new simple network architecture…based solely on attention mechanisms, dispensing with recurrence and convolutions entirely.” While the paper kicked off the flood of research into large language models (LLMs), its promise of simpler architecture has been belied by the power it takes to run models with trillions of parameters.

That left another opening for a new approach, or in this case, a return to an older approach, the state space model (SSM), which is similar in structure and function to RNNs. The “Attention Is All You Need” moment for SSMs was the December 2023 publication of the Mamba state space model (now at .v2) by Carnegie Mellon’s Albert Gu and Princeton’s Tri Dao.

State space models

“SSMs essentially take control theory and apply deep learning to it so that you hold state like in an RNN, that feeds back results,” said Steve Brightfield, chief marketing officer of BrainChip. “Power has always been an issue with AI models, but it really accelerated with LLMs and transformers. Transformers have a squared computational complexity. When you have a longer contact window, the computations square. It’s a logarithmic power increase, and that’s what everybody’s been challenged by. It’s a scaling problem. What now you’re seeing is, even at the data center level, they’re looking at modifying a transformer to be a hybrid model. It will use a transformer for some pieces of it, but it will replace other portions of the transformer with either a state space model or some other type of model approach.”

The advantage of state space models is that they can maintain state, as RNNs do, and scale linearly with the sequence length, solving basic problems with transformers. State space models already are showing up in the marketplace. Besides BrainChip’s own offerings based on SSMs, Gu’s startup Carteisa is now offering what it describes as an “ultra-realistic” generative voice API.

But just as state space models became popular, a skeptic’s backlash began. An NYU Center for Data Science (CDS) paper reported experiments showing that Mamba-style SSMs indeed struggle with state tracking. Researchers from Harvard’s Kempner Institute stated that SSMs “struggle to copy any sequence that does not fit in their [fixed-size] memory…and require 100X more data to learn copying long strings.”

Meanwhile, Dao and colleagues proposed a way to combine transformers and linear RNN architectures into a hybrid model that could perform more efficient inferencing.

“At the moment, it is hard to say how much mainstream adoption we’ll see for SSMs/RNNs against transformers,” said MIT-IBM’s Srivastava. “While some of these architectures are managing to get performance parity on some use cases against transformers, their adoption is limited. This is because, unlike transformers, the developer ecosystem around these new architectures is tiny. LLMs are complex systems and require several components ranging from hardware libraries to software libraries. All these libraries have been developed over the years for only transformers, and until that happens for one of these architectures, their adoption will be limited. Edge, however, is a different domain where transformers have struggled due to their outsized power and resource requirements. I expect to see more energy-efficient architectures there.”

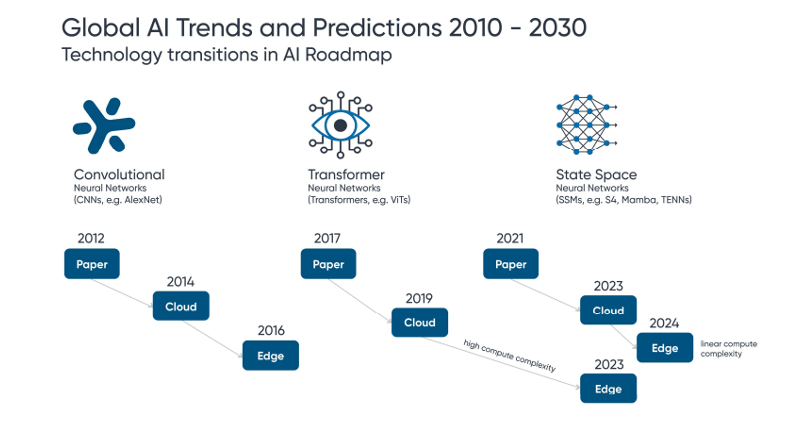

Fig.1 The trajectory of models to the edge, as projected by BrainSpace. Source: BrainSpace

Conclusion

Ultimately, whatever approach is chosen, reducing model sizes should benefit all designs. As Prem Theivendran, director of software engineering at Expedera noted, “The NN model is the source. Optimizing it can save significant numbers of operations, cutting down latency and power. Traditionally, data scientists have designed models to achieve high accuracy without much consideration for the underlying hardware. While some NPU architectures perform better than others, reducing model complexity will benefit everyone.”

Still, the type of hardware used in the future is still up in the air. “There are different ways of constructing accelerators, and some of them are more tuned to different models than others,” said Paul Martin, global field engineering director at Sondrel. “As we’re getting into more complex models, many of the existing accelerators are becoming useless. We’re having to look for alternatives. It comes down to the underlying structure of those accelerators and what kind of models they’re built to support. For example, with simple CNNs, we’re really just talking about an array of MAC units. More sophisticated models, such as generative AI, require a completely different approach. There’s not necessarily a one-size-fits-all accelerator that addresses that problem.”

None of this will make designers’ lives easier, at least for now. “A lot of chip designers are being forced to see how many models they can support to provide flexibility, because they don’t really know what models their customers are going to use,” said Brightman. “The problem is they can’t implement them all optimally, and they don’t necessarily easily move from a server to a single chip, because there’s basic memory I/O and computational problems to doing that. Users will be looking at more model-specific solutions that are much more power efficient to move to the edge. Their fear is that they’re going to lose all that flexibility.”

As to whether “Swiss Army Knife” chips that can run nearly any type of model or custom hardware will predominate, that’s still up for debate. “As much as the world would love to have a Swiss Army Knife NPU,” said Theivendran, “I don’t see a future where a single chip or NPU can run all types of models efficiently. For example, LLM needs are completely different from CNN models regarding memory requirements and data movement within attention blocks. An architecture designed to work specifically well for CNNs would perform poorly for LLMs, if not impossible to deploy. I see a future where general-purpose NPUs are deployed for generic application requirements, supported by highly specialized chips/chiplets/NPUs that cater to specific models/model types.”

Alphawave’s Carusone believes the future likely holds a blend of both approaches. “General-purpose chips, the “Swiss Army Knife” type, will continue to play a role, especially in applications requiring flexibility and rapid deployment. However, as the demand for performance and energy efficiency grows, there will be an increasing need for custom chips optimized for specific models or tasks. Advancements in chiplet technology allow for a middle ground — semi-custom chips that combine standardized modules with specialized components. This modularity enables us to create hardware tailored to specific needs without the full cost and time investment of entirely custom designs.”

Reference:

- Sejnowski, Terrence J. The Deep Learning Revolution. Cambridge, Massachusetts, The MIT Press, 2018.

Related Reading: