Are Alphabet’s Days Numbered? – Brownstone Research

Dear Reader,

It’s hard to believe that trillions of dollars of value emerged from the nondescript 1950s building shown below.

This was the headquarters of Fairchild Semiconductors in Palo Alto, CA, which came to life in 1957. As one of the earliest semiconductor companies, it quickly found a market for its transistors and became a leader in the industry.

That kind of first-mover advantage often leads to dominant market conditions. But that’s not what happened with Fairchild.

At its apex, Fairchild only reached a valuation of about $3.4 billion back in 2001, and it was eventually acquired for $2.4 billion in 2016. It’s amazing it remained independent for that long. But that’s not why the company is so famous.

Fairchild’s place in history wasn’t because of its own success. It is because of what companies emerged from Fairchild’s executive and engineering ranks during its early decades. The list is remarkable. Intel, AMD, Intersil, Atmel, Cirrus Logic, PMC-Sierra, Synaptics, Xilinx, and so many others emerged from Fairchild. They all became multi-billion-dollar companies and went on to achieve much greater success than Fairchild ever did.

Even venture capital firms Kleiner Perkins Caufield & Byers and Sequoia Capital emerged from Fairchild. In total, more than $2 trillion of value was created by those offshoots from Fairchild Semi.

The reality was that the semiconductor industry was simply moving too fast for one company to manage and contain it all. The growth was exponential, and there were too many applications for the new technology that needed to be built quickly.

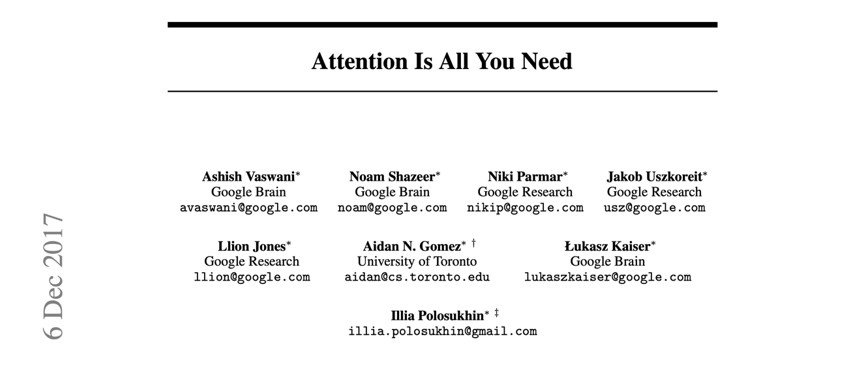

I couldn’t help but be reminded of Fairchild as we watch the remarkable developments in artificial intelligence (AI) unfolding at a far faster pace. And I was reminded of a 2017 research paper out of Google on artificial intelligence titled “Attention Is All You Need.”

This was a seminal paper that led to the foundation of the generative AI that we’re seeing today. And just like Fairchild, the paper’s alumni have quickly moved on to build things faster than Google was able to do itself. Here’s what has already happened:

Ashish Vaswani and Niki Parmar left to found Adept AI, which is now worth $1 billion.

Noam Shazeer left to found Character AI, which is now worth $850 million.

Jakob Uszkoreit left Google to found Inceptive Nucleics, a hybrid AI/biotech company now worth $90 million.

Aidan Gomez left to found Cohere AI, which has already raised $370 million and is worth at least $1 billion (my estimate).

Lukasz Kaiser left to be a key contributor to OpenAI, which we now know to be worth about $29 billion.

Illia Polosukhin was one of the earliest departures who founded the NEAR Protocol, a promising blockchain project, which currently has a market cap of around $1.7 billion.

The innovation that spurned from the team behind a single research paper at Google is incredible. Just seven individuals directly related to tens of billions of dollars of value created. And Google failed to keep them in-house.

There simply wasn’t enough room for them to move quickly enough to build what they knew needed to be built. And there was no way for them to monetize what they believed they could create inside Google.

This is always the challenge of large, dominant corporations. And their failure to manage and incentivize those capable of this kind of innovation is why Fairchild never became an Intel, an AMD, or an NVIDIA.

And it raises the question… are Alphabet’s (Google’s) days numbered?

A new development in artificial intelligence in the last few days already had the industry buzzing. It’s called Auto-GPT.

Auto-GPT is a new piece of software that plugs into OpenAI’s large language models, GPT-3.5 and GPT-4. That gives it the ability to do everything that these incredible generative AIs can do.

As a reminder, GPT-3.5 is used in ChatGPT. And GPT-4 is simply the next iteration of that large language model.

Both of these AIs can produce content and write software code upon demand. And they can have intelligent conversations with us humans.

Auto-GPT is an AI that can be given instructions and then complete tasks on its own by using ChatGPT. Its ability to interact with other systems, however, is not limited to ChatGPT. Auto-GPT can also interact with other online software systems, and it can even use voice synthesizers to make phone calls.

The end result is that Auto-GPT can carry out a series of tasks autonomously… just as the name implies.

Whenever we interface with a generative AI like ChatGPT, we must “prompt” it to do what we want it to. And as we’ve discussed before, the better we structure our prompts, the better the output from the AI will be.

As such, we often have to refine our prompts a few times to figure out how to get the optimum result. There’s still a fair amount of manual work there. And if our work with a generative AI requires a series of tasks, we need to provide a series of individual prompts to get the work done.

Auto-GPT cuts out a lot of this manual effort. We can give it specific instructions on what we want to achieve with a defined outcome, and the AI will go out and figure out how to make it happen using a variety of software tools and technology.

For example, early adopters are already putting Auto-GPT to work on market research.

Users have instructed Auto-GPT to research golf balls, water-proof shoes, headphones, and various other consumer products. They just tell the AI what they are looking for and then ask Auto-GPT to provide them with a list of suitable products.

Auto-GPT then does all the research and compiles its own reports on the pros and cons of the best products out there. And it can even make specific recommendations for people.

Other users are having Auto-GPT help them with technical support.

The AI is capable of quickly scanning the files on our computer to determine if we are missing anything critical to certain applications. Then it can go out and download any software we may need to perform whatever task we’re interested in.

And Auto-GPT can also serve as a personal digital assistant for us.

Suppose we need to set up a business dinner at a restaurant that accommodates our customer’s personal preferences. We could tell Auto-GPT to research the top restaurants in our city offering specific menu items. Then the AI could rank each restaurant according to our criteria.

From there, Auto-GPT could call the top restaurant on its list to see if they have availability for the day and time of our meeting. If there is availability, the AI could book our reservation on the spot for us. And if the restaurant can’t accommodate us for our meeting, Auto-GPT could simply move on to the next restaurant on its list… until it has our reservation in place.

So Auto-GPT represents the next step with AI. It’s all about autonomy.

Unlike ChatGPT, this new technology doesn’t need to be prompted every step of the way. Instead, we can give it instructions and a desired outcome, and the AI will figure out how to get it done for us.

This puts us one step closer to something that I’ve been predicting before the end of this year: personalized AI assistants that can automate menial tasks for us. Well, this is the bridge to bringing that technology to life.

I suspect we’re less than four or five months from a product like that being announced to the general public.

With all the accelerated development in generative AI and AI software in general, it’s about time Amazon had some big news to announce.

Amazon Web Services (AWS) announced its new initiative called Amazon Bedrock. And it will feature access to several popular generative AIs. The list includes Anthropic’s Claude, Stability AI, and – you guessed it – Amazon’s very own suite of generative AI. Amazon calls it Titan.

As a reminder, AWS is the largest player in the cloud infrastructure space. It competes directly with Google Cloud, Microsoft Azure, Oracle, and other smaller players.

And as we know, both Google and Microsoft have made generative AI available through their cloud service offerings. Google hosts its own generative AI Bard and makes its cloud services available to other AI companies. And Microsoft hosts OpenAI’s GPT-3.5, GPT-4, and ChatGPT.

So it’s no surprise that Amazon is anxious to catch up.

And what’s especially interesting is that Amazon will allow its customers to customize Titan to optimize it for their own use cases. This is a really smart move.

When we talk about these large AI language models, most of the development costs come from the initial training. The larger models train from billions of parameters. This requires intensive computing power, which means millions or tens of millions of dollars of cost.

But once the initial training is done, it’s not nearly as expensive to update the AI by training it on smaller, more focused datasets. And that’s exactly what Amazon is proposing here.

Anyone using AWS can take Titan and feed it a customized dataset to customize it. This can be done relatively cheaply. And it will result in a generative AI that’s highly targeted for specific applications. It will also generate additional revenues for Amazon Web Services, which is why the offering is so smart.

I can’t emphasize enough how big this will be. In a world where these highly functional AI models are rapidly becoming pervasive, the ability to customize one’s own AI will become more and more important.

And that’s only going to make AWS even more “sticky.” Once an entity is running its own custom AI based on Amazon’s Titan, the chances of it moving to Google Cloud or Microsoft Azure are slim. So this is a great competitive move by Amazon.

We had a look at Stability AI’s new large language model yesterday. But that’s not the only thing the AI startup had cooking.

Stability AI just released its next generation of Stable Diffusion. It’s called Stable Diffusion XL (SDXL).

If we remember, Stable Diffusion is the text-to-image AI that put Stability AI on the map. It was trained on about 900 million parameters.

Well, Stable Diffusion XL is much more sophisticated and capable of ridiculous levels of photorealism. It trained on 2.3 billion parameters. And the improvement is very clear. Check this out:

Source: Stable Diffusion

Here we can see a woman standing out in the front yard with a house in the background. Some of the lights in the windows are on… some aren’t.

And it must be a little windy outside – look at how her stands of hair are waving a little bit.

It looks like a professional photograph. I doubt many would ever guess that it was created entirely by an AI and the person doesn’t even exist.

Stability AI is making the new and improved version of Stable Diffusion available through a software platform called DreamStudio. It’s designed to help people generate art and images using Stable Diffusion XL.

I highly recommend that people give this a try through DreamStudio. It’s available in a beta version format right now, which means it’s going to get even better in the coming weeks. It’s a lot of fun to experiment with the tech. I’ve used it to create some really interesting images myself.

Regards,

Jeff Brown

Editor, The Bleeding Edge

The Bleeding Edge is the only free newsletter that delivers daily insights and information from the high-tech world as well as topics and trends relevant to investments.

The Bleeding Edge is the only free newsletter that delivers daily insights and information from the high-tech world as well as topics and trends relevant to investments.

Protected by copyright laws of the United States and international treaties. This website may only be used pursuant to the subscription agreement and any reproduction, copying, or redistribution (electronic or otherwise, including on the World Wide Web), in whole or in part, is strictly prohibited without the express written permission of Brownstone Research.