AI Is Reshaping Chip Design. But Where Will It End? – Forbes

Using AI reduces design costs, improves yields and performance, and shortens time-to-market for better chips. Synopsys, Cadence Design Systems, and many hyperscal chip designers are now adopting generative AI capabilities to facilitate chip design. Think of this as the second generation of AI for EDA. Where might this lead?

Every chip is designed with a slew of design and test applications, usually from EDA vendors like Synopsys and Cadence Designs. A couple of years ago, Synopsys took the lead in applying AI with a bold move called DSO.ai to help chip design teams evaluate “floorplans”, evaluating some 1090,000 potential design alternatives, each of which could produce chips of distinct power, performance and costs. DSO.ai and its primary competitor, Cadence Cerebrus, can save a lot of engineering time, taking the back-end process from months to weeks, from a full team to just one or two talented engineers. And each company says hundreds of clients use their (reinforcement learning) AI with quantified benefits. But wait! Now there is more!

Recently, the EDA market began to evolve to take advantage of generative AI, applying the next wave of AI to improve the workflow. The industry has already engaged GOFAI (good old-fashioned AI) in everything from physical layout to design verification to test and manufacturing. Many vendors call this generative AI, and it is “generative,” but it is not based on Large Language Models, which opens new avenues to assist engineering teams. LLM’s will not replace existing EDA AI.

Now, as ChatGPT taught the world over the last 12 months, a new kid in town called LLMs can sing and dance like no software before. So, how can large language models and chat interfaces help chip designers?

A great example is the new CoPilot from Synopsys. Launched last month, Synopsis.ai Copilot is the industry’s first GenAI capability for chip design. The product of indepth collaboration with Microsoft Azure, Synopsys.ai Copilot brings the OpenAI generative AI services on Azure to the design process for semiconductors. Synopsys.ai cloud services are hosted exclusively on Azure, and we would not be surprised to learn that the two companies collaborated on the recent Arm Cobalt and Maia AI accelerator silicon products.

The new Synopsys Copilot results from a strategic collaboration with Microsoft to integrate Azure OpenAI Service that brings the power of GenAI into one of the most complex engineering challenges – the design process for semiconductors.

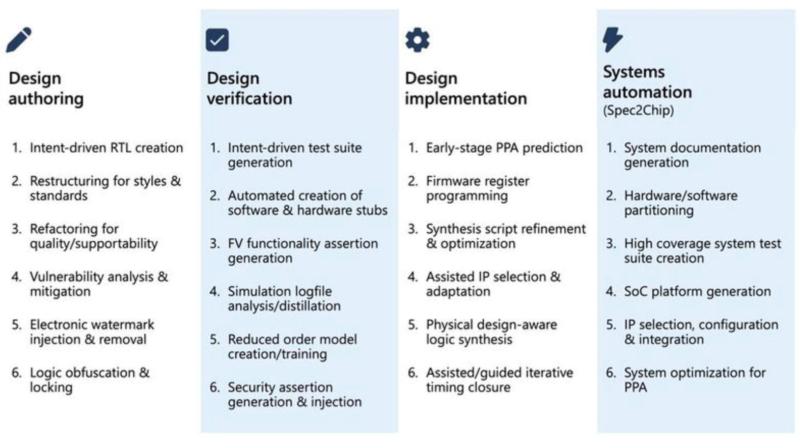

Synopsys is applying generative AI across its stack of EDA tools, accessible on Microsoft Azure on … [+]

“Our history with Synopsys is built on a shared vision for accelerating semiconductor innovation through Cloud and AI,” said Corey Sanders, corporate vice president Microsoft. “Microsoft’s engineering teams worked closely with Synopsys to bring the transformational power of Generative AI to EDA, which will empower semiconductor design engineers using the Synopsys.ai Copilot with the best AI infrastructure, models, and toolchain built on Microsoft Azure.”

The span of AI enabled collaborative projects underwy at Microsoft, supported by Synopsys.

From the Synopsys website, we learn that this far-reaching collaboration is designed to deliver:

Cadence has been building out and expanding it’s generative AI portfolio over the last year and recently announced it has been collaborating with Renasas to build generative AI to improve semiconductor quality and design team productivity. “Ensuring alignment between specification and design is critical, and the cost of verification has increased with the complexity of design functions,” said Shinichi Yoshioka, Senior Vice President and CTO, Renesas. “Renesas and Cadence have collaborated to develop a novel approach to address this challenge by leveraging generative AI’s LLM capabilities, which significantly reduce the time from specification to final design by efficiently controlling design quality.” Renesas is also an early adopter of Cadence Cerebrus for chip implementation, Verisium AI-driven verification, and the Cadence Joint Data and AI (JedAI) Platform which underpins the entire design process with a common database of designs and rules.

The Cadence portfolio of generative AI applications.

Some innovations for applying AI to the design process come from hyper-scalers and hardware designers, like Google and Nvidia. While tools from EDA vendors such as Synopsys and Cadence are great, they do not fully embody the experiences and expertise a chip team has developed. Nvidia has built a proprietary LLM called ChipNeMo, from emails, problem tickets, and other data to help engineers without having to call or email more senior and experienced engineers for help. “It turns out our senior designers spend a lot of time answering questions from junior designers,” said Bill Dally, Nvidia’s head of research. “If the first thing the junior designer can do is to go to ChipNeMo and say, ‘What does this signal coming out of the memory unit do?’—and if they get a possible answer that saves the senior designer’s time, the tool is well worth it.”

Nvidia and AWS recently announced they are building a new supercomputer, Project Ceiba, made with 16,384 GH200 Arm-GPU Superchips. Ceiba will be the world’s fastest GPU-powered AI supercomputer and will be used exclusively by Nvidia engineering teams to create new models and to design and test new GPUs. Clearly, Nvidia believes AI and its GPUs are instrumental in accelerating the Nvidia chip roadmap, which the company announced earlier this year.

We believe the end-state of this progression of various AI models for EDA will produce faster, lower-cost chips in only a few months after the logic design is complete. That latter part of the workflow will also compress, as copilot services help leverage previous innovations and expertise. This revolution will dramatically change and reduce the chip design workforce, which is already short some 10,000 engineers globally. Jobs will increasingly focus on the imaginative part of the work, creating new design logic at higher salaries and far less on tedious, labor-intensive tasks.

We are already seeing this evolution materialize. Nvidia has recently announced it will double the pace at which it will develop and release new silicon, much to the dismay of its competitors. This doesn’t come by doubling the R&D spend, it comes from relying on AI to shorten the design cycle. We note that the company has built in-house supercomputers, now with AWS hosting services, not for customer use but for internal engineering. The system such as Selene and Helios give NVIDIA a competitive edge and are applied to both LLM research and chip design.

And Cadence Design and Synopsys are more than happy to help.

Disclosures: This article expresses the opinions of the author and is not to be taken as advice to purchase from or invest in the companies mentioned. My firm, Cambrian-AI Research, is fortunate to have many semiconductor firms as our clients, including BrainChip, Cadence, Cerebras Systems, Esperanto, IBM, Intel, NVIDIA, Qualcomm, Graphcore, SImA.ai, Synopsys, Tenstorrent, Ventana Microsystems, and scores of investors. We have no investment positions in any of the companies mentioned in this article. For more information, please visit our website at https://cambrian-AI.com.

One Community. Many Voices. Create a free account to share your thoughts.

Our community is about connecting people through open and thoughtful conversations. We want our readers to share their views and exchange ideas and facts in a safe space.

In order to do so, please follow the posting rules in our site’s Terms of Service. We’ve summarized some of those key rules below. Simply put, keep it civil.

Your post will be rejected if we notice that it seems to contain:

User accounts will be blocked if we notice or believe that users are engaged in:

So, how can you be a power user?

Thanks for reading our community guidelines. Please read the full list of posting rules found in our site’s Terms of Service.