Nvidia R&D Chief on How AI is Improving Chip Design – HPCwire

Since 1987 – Covering the Fastest Computers in the World and the People Who Run Them

Since 1987 – Covering the Fastest Computers in the World and the People Who Run Them

April 18, 2022

Getting a glimpse into Nvidia’s R&D has become a regular feature of the spring GTC conference with Bill Dally, chief scientist and senior vice president of research, providing an overview of Nvidia’s R&D organization and a few details on current priorities. This year, Dally focused mostly on AI tools that Nvidia is both developing and using in-house to improve its own products – a neat reverse sales pitch if you will. Nvidia has, for example begun using AI to effectively improve and speed GPU design.

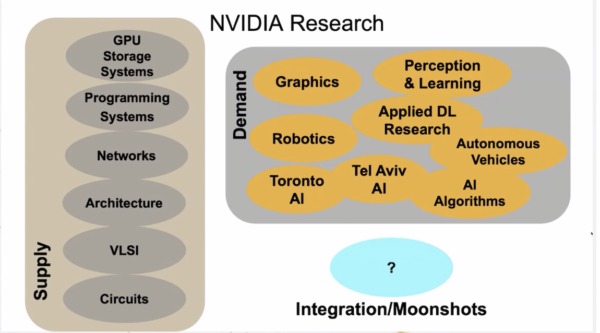

“We’re a group of about 300 people that tries to look ahead of where we are with products at Nvidia,” described Dally in his talk this year. “We’re sort of the high beams trying to illuminate things in the far distance. We’re loosely organized into two halves. The supply half delivers technology that supplies GPUs. It makes GPUs themselves better, ranging from circuits, to VLSI design methodologies, architecture networks, programming systems, and storage systems that go into GPUs and GPUs systems.”

“The demand side of Nvidia research tries to drive demand for Nvidia products by developing software systems and techniques that need GPUs to run well. We have three different graphics research groups, because we’re constantly pushing the state of the art in computer graphics. We have five different AI groups, because using GPUs to run AI is currently a huge thing and getting bigger. We also have groups doing robotics and autonomous vehicles. And we have a number of geographically ordered oriented labs like our Toronto and Tel Aviv AI labs,” he said.

Occasionally, Nvidia launches a Moonshot effort pulling from several groups – one of these, for example, produced Nvidia’s real-time ray tracing technology.

As always, there was overlap with Dally’s prior-year talk – but there was also new information. The size of the group has certainly grown from around 175 in 2019. Not surprisingly, efforts supporting autonomous driving systems and robotics have intensified. Roughly a year ago, Nvidia recruited Marco Pavone from Stanford University to lead its new autonomous vehicle research group, said Dally. He didn’t say much about CPU design efforts, which are no doubt also intensifying.

Presented here are small portions of Dally’s comments (lightly edited) on Nvidia’s growing use of AI in designing chips along a with a few supporting slides.

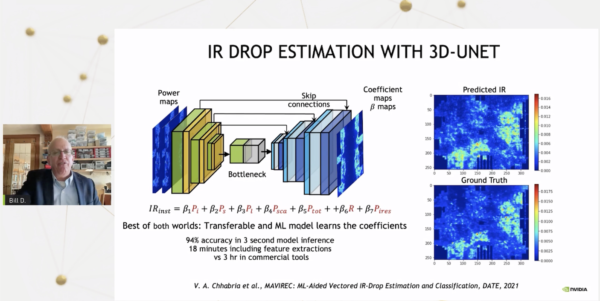

1 Mapping Voltage Drop

“It’s natural as an expert in AI that we would want to take that AI and use it to design better chips. We do this in a couple of different ways. The first and most obvious way is we can take existing computer-aided design tools that we have [and incorporate AI]. For example, we have one that takes a map of where power is used in our GPUs, and predicts how far the voltage grid drops – what’s called IR drop for current times resistance drop. Running this on a conventional CAD tool takes three hours,” noted Dally.

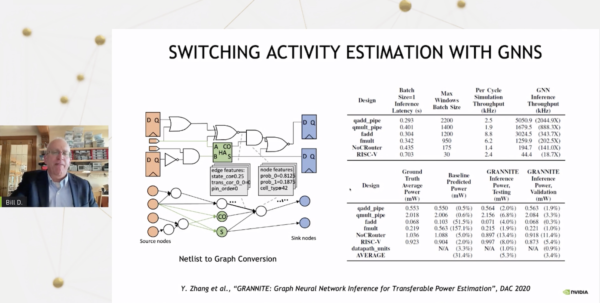

“Because it’s an iterative process, that becomes very problematic for us. What we’d like to do instead is train an AI model to take the same data; we do this over a bunch of designs, and then we can basically feed in the power map. The [resulting] inference time is just three seconds. Of course, it’s 18 minutes if you include the time for feature extraction. And we can get very quick results. A similar thing in this case, rather than using a convolutional neural network, we use a graph neural network, and we do this to estimate how often different nodes in the circuit switch, and this actually drives the power input to the previous example. And again, we’re able to get very accurate power estimations much more quickly than with conventional tools and in a tiny fraction of the time,” said Dally.

2 Predicting Parasitics

“One that I particularly like – having spent a fair amount of time a number of years ago as a circuit designer – is predicting parasitics with graph neural networks. It used to be that circuit design was a very iterative process where you would draw a schematic, much like this picture on the left here with the two transistors. But you wouldn’t know how it would perform until after a layout designer took that schematic and did the layout, extracted the parasitics, and only then could you run the circuit simulations and find out you’re not meeting some specifications,” noted Dally.

“You’d go back and modify your schematic [and go through] the layout designer again, a very long and iterative and inhuman labor-intensive process. Now what we can do is train neural networks to predict what the parasitics are going to be without having to do layout. So, the circuit designer can iterate very quickly without having that manual step of the layout in the loop. And the plot here shows we get very accurate predictions of these parasitics compared to the ground truth.”

3 Place and Routing Challenges

“We can also predict routing congestion; this is critical in the layout of our chips. The normal process is we would have to take a net list, run through the place and route process, which can be quite time consuming often taking days. And only then we would get the actual congestion, finding out that our initial placement is not adequate. We need to refactor it and place the macros differently to avoid these red areas (slide below), which is where there’s too many wires trying to go through a given area, sort of a traffic jam for bits. What we can do instead now is without having to run the place and route, we can take these net lists and using a graph neural network basically predict where the congestion is going to be and get fairly accurate. It’s not perfect, but it shows the areas where there are concerns, we can then act on that and do these iterations very quickly without the need to do a full place and route,” he said.

4 Automating Standard Cell Migration

“Now those [approaches] are all sort of using AI to critique a design that’s been done by humans. What’s even more exciting is using AI to actually do the design. I’ll give you two examples of that. The first is a system we have called NVCell, which uses a combination of simulated annealing and reinforcement learning to basically design our standard cell library. So each time we get a new technology, say we’re moving from a seven nanometer technology to a five nanometer technology, we have a library of cells. A cell is something like an AND gate and OR gate, a full adder. We’ve got actually many thoundands of these cells that have to be redesigned in the new technology with a very complex set of design rules,” said Dally.

“We basically do this using reinforcement learning to place the transistors. But then more importantly, after they’re placed, there are usually a bunch of design rule errors, and it goes through almost like a video game. In fact, this is what reinforcement learning is good at. One of the great examples is using reinforcement learning for Atari video games. So this is like an Atari video game, but it’s a video game for fixing design rule errors in a standard cell. By going through and fixing these design rule errors with reinforcement learning, we’re able to basically complete the design of our standard cells. What you see (slide) is that the 92 percent of the cell library was able to be done by this tool with no design rule or electrical rule errors. And 12 percent of them are smaller than the human design cells, and in general, over the cell complexity, [this tool] does as well or better than the human design cells,” he said.

“This does two things for us. One is it’s a huge labor savings. It’s a group on the order of 10 people will take the better part of a year to port a new technology library. Now we can do it with a couple of GPUs running for a few days. Then the humans can work on those 8 percent of the cells that didn’t get done automatically. And in many cases, we wind up with a better design as well. So it’s labor savings and better than human design.”

There was a good deal more to Dally’s talk, all of it a kind of high-speed dash through a variety of Nvidia’s R&D efforts. If you’re interested, here is HPCwire’s coverage of two previous Dally R&D talks – 2019, 2021 – for a rear-view mirror into work that may begin appearing in products. As a rule, Nvidia’s R&D is very product-focused rather than basic science. You’ll note his description of the R&D mission and organization hasn’t changed much but the topics are different.

More Off The Wire

Be the most informed person in the room! Stay ahead of the tech trends with industry updates delivered to you every week!

May 23, 2024

The ISC High Performance show is typically about time-to-science, but breakout sessions also focused on Europe’s tech sovereignty, server infrastructure, storage, throughput, and new computing technologies. This round Read more…

May 22, 2024

Legendary computer scientist Gordon Bell passed away last Friday at his home in Coronado, CA. He was 89. The New York Times has a nice tribute piece. A long-time pioneer with Digital Equipment Corp, he pushed hard for de Read more…

May 22, 2024

If you were looking for quantum computing content, ISC 2024 was a good place to be last week — there were around 20 quantum computing related sessions. QC even earned a slide in Kathy Yelick’s opening keynote — Bey Read more…

May 21, 2024

Atos – via its subsidiary Eviden – is the second major supercomputer maker outside of HPE, while others have largely dropped out. The lack of integrators and Atos’ financial turmoil have the HPC market worried. If Atos goes under, HPE will be the only major option for building large-scale systems. Read more…

May 20, 2024

UAE-based Core42 is building an AI supercomputer with 172 million cores which will become operational later this year. The system, Condor Galaxy 3, was announced earlier this year and will have 192 nodes with Cerebras Read more…

May 17, 2024

On Tuesday May 14th, Google announced its sixth-generation TPU (tensor processing unit) called Trillium. The chip, essentially a TPU v6, is the company’s latest weapon in the AI battle with GPU maker Nvidia and clou Read more…

May 23, 2024

The ISC High Performance show is typically about time-to-science, but breakout sessions also focused on Europe’s tech sovereignty, server infrastructure, storag Read more…

May 22, 2024

If you were looking for quantum computing content, ISC 2024 was a good place to be last week — there were around 20 quantum computing related sessions. QC eve Read more…

May 21, 2024

Atos – via its subsidiary Eviden – is the second major supercomputer maker outside of HPE, while others have largely dropped out. The lack of integrators and Atos’ financial turmoil have the HPC market worried. If Atos goes under, HPE will be the only major option for building large-scale systems. Read more…

May 17, 2024

On Tuesday May 14th, Google announced its sixth-generation TPU (tensor processing unit) called Trillium. The chip, essentially a TPU v6, is the company’s l Read more…

May 16, 2024

What an interesting panel, Quantum Advantage — Where are We and What is Needed? While the panelists looked slightly weary — their’s was, after all, one of Read more…

May 15, 2024

AI is one of the most transformative and valuable scientific tools ever developed. By harnessing vast amounts of data and computational power, AI systems can un Read more…

May 15, 2024

The makers of the Aurora supercomputer, which is housed at the Argonne National Laboratory, gave some reasons why the system didn’t make the top spot on the Top Read more…

May 15, 2024

Some scientific computing applications cannot sacrifice accuracy and will always require high-precision computing. Therefore, conventional high-performance c Read more…

February 8, 2024

Recently, it was announced that Synopsys is buying HPC tool developer Ansys. Started in Pittsburgh, Pa., in 1970 as Swanson Analysis Systems, Inc. (SASI) by John Swanson (and eventually renamed), Ansys serves the CAE (Computer Aided Engineering)/multiphysics engineering simulation market. Read more…

August 17, 2023

The GPU Squeeze continues to place a premium on Nvidia H100 GPUs. In a recent Financial Times article, Nvidia reports that it expects to ship 550,000 of its lat Read more…

October 30, 2023

With long lead times for the NVIDIA H100 and A100 GPUs, many organizations are looking at the new NVIDIA L40S GPU, which it’s a new GPU optimized for AI and g Read more…

May 21, 2024

Atos – via its subsidiary Eviden – is the second major supercomputer maker outside of HPE, while others have largely dropped out. The lack of integrators and Atos’ financial turmoil have the HPC market worried. If Atos goes under, HPE will be the only major option for building large-scale systems. Read more…

December 11, 2023

Accelerating the training and inference processes of deep learning models is crucial for unleashing their true potential and NVIDIA GPUs have emerged as a game- Read more…

March 18, 2024

Nvidia’s latest and fastest GPU, codenamed Blackwell, is here and will underpin the company’s AI plans this year. The chip offers performance improvements from Read more…

October 5, 2023

When discussing GenAI, the term “GPU” almost always enters the conversation and the topic often moves toward performance and access. Interestingly, the word “GPU” is assumed to mean “Nvidia” products. (As an aside, the popular Nvidia hardware used in GenAI are not technically… Read more…

May 15, 2024

The makers of the Aurora supercomputer, which is housed at the Argonne National Laboratory, gave some reasons why the system didn’t make the top spot on the Top Read more…

January 30, 2024

Early quantum computing pioneer D-Wave again asserted – that at least for D-Wave – the commercial quantum era has begun. Speaking at its first in-person Ana Read more…

February 1, 2024

The immediate effect of the GenAI GPU Squeeze was to reduce availability, either direct purchase or cloud access, increase cost, and push demand through the roof. A secondary issue has been developing over the last several years. Even though your organization secured several racks… Read more…

December 7, 2023

AMD and Nvidia are locked in an AI performance battle – much like the gaming GPU performance clash the companies have waged for decades. AMD has claimed it Read more…

May 7, 2024

We have all thought about it. No one has done it, but now, thanks to HPC, we see what it looks like. Hold on to your feet because NASA has released videos of wh Read more…

August 8, 2023

Intel is planning to onboard a new version of the Falcon Shores chip in 2026, which is code-named Falcon Shores 2. The new product was announced by CEO Pat Gel Read more…

January 25, 2024

In under two minutes, Meta’s CEO, Mark Zuckerberg, laid out the company’s AI plans, which included a plan to build an artificial intelligence system with the eq Read more…

February 21, 2024

While 2023 was the year of GenAI, the adoption rates for GenAI did not match expectations. Most organizations are continuing to invest in GenAI but are yet to Read more…

May 8, 2024

Chip companies, once seen as engineering pure plays, are now at the center of geopolitical intrigue. Chip manufacturing firms, especially TSMC and Intel, have b Read more…

© 2024 HPCwire. All Rights Reserved. A Tabor Communications Publication

HPCwire is a registered trademark of Tabor Communications, Inc. Use of this site is governed by our Terms of Use and Privacy Policy.

Reproduction in whole or in part in any form or medium without express written permission of Tabor Communications, Inc. is prohibited.