Giảm biên thiết kế bằng hiệu chỉnh mô hình silicon

By Guy Cortez and Mark Laird

It’s no secret to anyone that chip design gets harder every year. There are two major trends driving these ever-increasing challenges. The first is the continual scaling down to smaller design nodes. Although the pace of new node introduction has slowed somewhat in recent years, the impact of each new geometry and process is more dramatic than ever before. Accelerated device scaling introduces many new complexities for layout, timing, power, design integrity, and more. The second trend is the growth in overall design size and complexity, with billions of transistors on a single silicon die now commonplace.

Successful design requires highly accurate models of silicon behavior throughout the development process. This enables accurate prediction of timing and power, with the primary goal that when the fabricated chips arrive back from the foundry, they operate in accordance with the product specification. Silicon foundries work hard to develop the best possible libraries for their customers, based on both calculations from the underlying physics and measurements from test chips during new process development.

However, with the decrease in transistor geometries, there is a growing, significant miscorrelation between predicted and actual silicon behavior. The results from small test chip runs may not fully correlate with volume production test of complex system on chip (SoC) designs due to process variation. If the devices run slower than predicted, they may not meet the product specifications. A re-spin may be required to fix this, increasing project cost and lengthening the time-to-market (TTM).

Chips that run faster than predicted are over-margined due to the inaccuracy of the libraries. Over-margined designs increase die size unnecessarily while also using more power. Conversely, chips that are significantly slower than predicted are susceptible to Vmin and other performance related failures. The only way to resolve this situation is to refine the libraries based on observed behavior when testing fabricated chips. This is known as silicon model calibration, an advanced method to improve consistency between the behavior predicted by the design models and the actual performance of the chips.

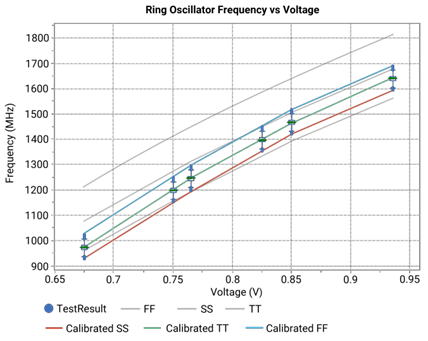

Silicon model calibration allows designers to better understand the manufacturing variation of advanced process nodes and helps to validate how accurately the pre-silicon models can predict post-silicon results. Figure 1 shows a real-world example of how this method works, with measurements from a 7nm, 300K gate design. The measurements were taken from a ring oscillator (RO), a structure commonly used to measure silicon performance, across a group of dies to measure the effect of process variation.

Fig. 1: Ring oscillator frequency versus voltage.

Compared to the foundry targets as reflected in the supplied libraries and shown as the gray colored lines in figure 1, the actual silicon as shown in the form of a box plot was slower than the Slow-Slow (SS) process corner for some dies at low voltage (0.675V). As the voltage increases, the silicon gets closer to the ideal Typical-Typical (TT) value, eventually running a bit faster. The gap from the Fast-Fast (FF) process corner foundry target to the measured frequency shows that the design is over margined in terms of preventing hold violations. This means the design tools create a larger design than is necessary to accommodate instances of fast silicon that will not actually happen.

After calibrating the library based on silicon measurements from production test as depicted by blue (FF), green (TT), and red (SS) colored lines in figure 1, the new TT process corner target aligns with the median of the silicon population. The FF corner is at the maximum frequency of the measured chips and the SS corner is at the minimum. This means the design tools now account for the actual silicon performance, reducing Vmin fallout and avoiding the hold violation over margining. The result is a 6% correlation improvement between the models and the silicon, enabling a 3.5% reduction in design margins.

Silicon model calibration is an important application of silicon lifecycle management (SLM), a methodology that improves silicon health and operational metrics at every phase of the device lifecycle. The Synopsys SLM solution is built on a foundation of enriched in-chip observability, analytics, and integrated automation. Monitors enable deep insights from silicon to system, gathering meaningful data at every opportunity for continuous analysis and actionable feedback.

Figure 2 shows how this solution calibrates silicon models to produce designs better correlated with actual silicon results. The ROs are provided by the designers and are supplemented by Synopsys SLM IP monitors, including Process Detectors (PDs) and SLM Path Margin Monitors (PMMs). All three types of structures are inserted into the SoC early in the design phase.

Fig. 2: Synopsys silicon model calibration tool flow.

Once early test chips are fabricated, silicon data from the SLM monitors is gathered on the test floor and then analyzed by Synopsys Silicon.da data analytics. The resulting data is fed back to Synopsys PrimeShield, where SPICE models and both foundry and augmented library files are combined to create a Compact Timing Power Model (CTPM). This model is a database of calibrated timing information for the library cells that stores sensitivity information of library timing for voltage and process shifts.

The CTPM is used by the design implementation tools to estimate the change in functional path timing due to voltage and process sensitivity. This correlates the pre-silicon timing and the post-silicon results more accurately. For next generation chips belonging to the same process, the calibration results in designs with better power, performance, and area (PPA). If the chip being measured in silicon gets a design re-spin because of not meeting product performance specifications, for example, the designers may choose to use the CTPM to improve the original design as well.

Silicon model calibration produces better designs with lower margins by leveraging real-world measurements from production silicon. These results can also be provided to the foundry to help improve the supplied libraries. All parties benefit from this advanced method, from process engineers to end users who now have better chips in their electronic products.

For more information on silicon model calibration please visit https://www.synopsys.com/ai/ai-powered-eda/silicon-da.html

Mark Laird is a principal applications engineer at Synopsys.

Guy Cortez

Guy Cortez is a product management manager, senior staff, at Synopsys. His marketing career spans more than 25 years. He has held various marketing positions, including technical, product/solution, channel/field, integrated/corporate, alliance and business development for companies such as Synopsys, Cadence, VMware and Optimal+. Prior to that, Cortez spent 12 years as a test engineer at Hughes Aircraft Company (now Boeing), and later at Sunrise Test Systems (which became Viewlogic, and later Synopsys). At Hughes, he was responsible for generating all of the manufacturing test programs for the ASICs developed in the Missile Systems Group Division. At Sunrise, Cortez was a pre and post-sales applications engineer, and also doubled as the company’s instructor.